The history of quantum computing: A complete timeline

The theories behind quantum computing go back decades, but progress has accelerated in the 2000s. Know the key milestones to get a quick understanding of how quantum developed.

Quantum computing has made considerable progress since theoretical ideas were first formally explored in the 1970s. It promises a dramatic leap in our ability to understand the world, improve business processes, and build better AI and machine learning systems. Yet it will also make it easier to crack the public key cryptography algorithms that underpin the digital economy.

Quantum computers encode and process information using information primitives called qubits, which are analogous to the bits used in classical computing. The key difference is that, while bits can only exist in one of two states, qubits can exist in multiple states simultaneously, thus enabling more efficient parallel processing.

Considerable progress has been made in overcoming quantum computing challenges in hardware, algorithms and error correction to make quantum computing fault tolerant. A variety of physical phenomena, such as superconductivity, nuclear magnetic resonance (NMR) and trapped ions, have scaled from a few qubits in the 2000s to thousands of qubits today. Quantum algorithms, first proposed in the mid-1990s, are being scaled and tuned for specific hardware. Researchers are also discovering ways to reduce the overhead of transforming physical qubits into the long-duration and robust logical qubits required to solve problems.

Quantum computing was first proposed to improve physics simulation in the 1980s. Many other applications will also benefit from special-purpose quantum simulators that might be easier to scale for certain problems. For example, quantum simulators have already been built with a much larger number of physical qubits than general-purpose quantum computers, but the gap is starting to narrow.

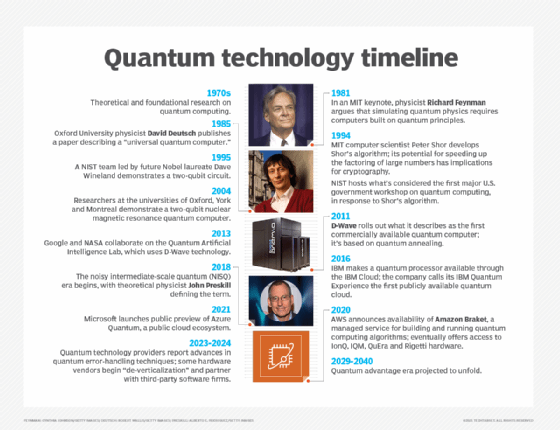

A timeline of quantum computing history

Here are the major milestones in the development of quantum computing.

Early history

In the early 1900s, scientists began developing a theory of quantum mechanics to explain experimental gaps between new observations and classical physics. In the 1920s, researchers began proposing new theories for how information could be entangled across particles and waves of matter and energy, prompting considerable debate and discovery.

In 1959, physicist Richard Feynman suggested the notion of quantum computing in a talk, "Plenty of Room at the Bottom." Most of the discussion was about expanding the limits of classical computing approaches using different physical principles. Feynman said, "When we get to the very, very small world -- say circuits of seven atoms -- we have a lot of new things that would happen that represent completely new opportunities for design. Atoms on a small scale behave like nothing on a large scale, for they satisfy the laws of quantum mechanics."

By the 1970s, research began into the possible relationship between quantum mechanics and information theory, the mathematical study of encoding and communicating information.

1980s

It took another decade before scientists postulated the theoretical foundation for how quantum computers might be constructed. In 1980, physicist Paul Benioff argued that the Turing machine architecture used in classical computers can't faithfully simulate quantum phenomena, while a quantum computer can do so.

In 1981, Feynman delivered a keynote at MIT titled "Simulating physics with computers," in which he argued that computing with quantum principles will be required to simulate quantum physical processes. This sparked widespread excitement in the scientific community. In 1982, he published a paper with the same title elaborating on problems where quantum computers might excel.

In 1985, David Deutsch of Oxford University introduced the notion of a universal quantum computer, which helped conceptualize how quantum computers might evolve beyond simulating the world to support general-purpose computing. It included the concepts of quantum logic gates and qubits for quantum computing and established the theoretical framework for quantum algorithms and processors. Deutsch wrote, "Computing machines resembling the universal quantum computer could, in principle, be built and would have many remarkable properties not reproducible by any Turing machine."

1990s

In 1994, Peter Shor at Bell Labs introduced a quantum algorithm for factoring integers, which came to be known as Shor's algorithm. It suggested a way quantum computing might be able to crack existing public key (asymmetric) cryptography algorithms widely used for securing authorization, authentication and private key exchange. The algorithm spurred research on implementing quantum computers and new post-quantum cryptography (PQC) algorithms.

In 1995, Dave Wineland and Christopher Monroe at NIST demonstrated a simple, two-qubit quantum circuit. Meanwhile, Shor and associates discovered the first quantum error-correction codes, which offered a way to overcome the noise and stability issues inherent in qubits. These developments proved the feasibility of the basic building blocks for scalable, fault-tolerant quantum computers.

In 1996, Lov Grover at Bell Labs introduced an algorithm that used quantum principles to accelerate searches on unstructured data. It also provided a quadratic speedup for solving the mathematical problems used in symmetric cryptography.

Meanwhile, David DiVincenzo at IBM postulated practical criteria for building quantum computers and supporting quantum communication between them to guide research efforts. His five conditions for quantum computers included the following:

- Scalable physical qubits.

- The ability to initialize qubits.

- Long quantum coherence duration.

- Universal quantum gates.

- A qubit measurement capability.

Connecting quantum computers together also required two conditions for quantum communication according to DiVincenzo's criteria: the ability to convert between stored and traveling qubits, and the ability to maintain qubit integrity during transit.

In 1998, IBM researchers ran Grover's search algorithm on two-bit quantum hardware using NMR principles. In 1999, NEC researchers pioneered superconducting Josephson junction circuits that could be scaled using chip fabrication techniques and were later used for much larger quantum computers.

2000s

In 2000, Michael Nielsen and Isaac Chuang published the seminal textbook, Quantum Computation and Quantum Information, which established quantum information as a maturing discipline. Meanwhile, researchers in the U.S. and Germany made progress in scaling NMR quantum computers to seven qubits capable of using Shor's algorithm for simple problems.

In 2003, the field began to diversify as researchers demonstrated quantum computers built on new physical phenomena that use trapped ions and photons. Research expanded to determine the pros and cons of implementing quantum computers through the different approaches. Benchmarks based on the DiVincenzo criteria compared relative merits across physical platforms as labs competed to build better qubits and quantum circuits.

In 2007, D-Wave Systems demonstrated a 28-qubit quantum computer for solving optimization problems. While it was not a universal quantum computer, it illustrated how simpler approaches might be scaled more easily for certain kinds of problems.

2010s

In 2011, D-Wave released the 128-qubit D-Wave One, the first commercial quantum computer for use outside research settings. It sped up certain optimization problems and guided research on quantum annealing algorithms for simulation, but it did not show cost or performance advantages over classical computers.

In 2013, Google and NASA established the Quantum Artificial Intelligence Lab at NASA’s Ames Research Center, starting with the 512-qubit D-Wave Two, the largest quantum computer in the world. The partnership explored how quantum computers might be applied to machine learning, scheduling and space exploration.

The cybersecurity industry began seriously considering the risks of cryptography-relevant quantum computers in 2015. Michele Mosca published a paper outlining the risks of having to protect confidential information for long periods, past the time quantum-enabled decryption becomes available, which came to be known as Mosca's theorem. He initially predicted a 1-in-2 chance of breaking the popular Rivest-Shamir Adleman (RSA) encryption scheme by 2031. In 2017, Microsoft researchers estimated that a quantum computer with 4,098 logical qubits could break an RSA-2048 encryption key.

In 2015, Australian researchers constructed the first two-qubit quantum logic gate in silicon, demonstrating that all the physical building blocks for a silicon quantum computer had been successfully built. It used existing semiconductor chip production processes that promised a viable alternative for scaling quantum computers more cost-effectively.

D-Wave scaled quantum simulators to 1,000 qubits in 2015 and 2,000 qubits in 2017. IBM connected a 5-qubit computer to the cloud in 2016 to catalyze interest in experimenting with quantum technology. Other major cloud providers, including AWS and Microsoft, soon launched similar quantum-as-a-service offerings.

In 2017, Intel and IBM extended superconducting approaches to about 50 qubits. The following year, John Preskill coined the term noisy intermediate-scale quantum (NISQ) to denote machines with fewer than 1,000 qubits that lack the capacity and fault tolerance needed to achieve "quantum advantage," also called quantum supremacy. The current state of quantum computing is often described as the NISQ era, and NISQ algorithms have been developed to make computation practical on the error-prone systems.

In 2019, Google claimed to have achieved quantum supremacy by using a quantum computer with a 53-qubit superconducting chip called Sycamore to solve an obscure math problem faster than a classical computer. Growing excitement spurred multi-billion-dollar government research programs and coordination efforts in the U.S., Europe and China.

2020s

In 2020, Chinese researchers demonstrated a photon-based quantum simulator to solve a problem that would have taken a classical machine billions of years. The next year, they demonstrated a 66-qubit superconducting processor and supporting algorithm that solved a more complex variant of Google's 2019 quantum supremacy problem.

Vendors also made considerable progress scaling the number of physical qubits. IBM achieved 127 qubits in 2021, 433 qubits in 2022 and more than 1,000 qubits in late 2023. Many startups and spinoffs from universities and established vendors continued to make progress.

The focus was turning beyond simply scaling physical qubits that suffer from problems with noise and limited runtimes to logical qubits that address these limitations. Such efforts included improving the quality of physical qubits, as well as developing more efficient error-correction codes and novel connection approaches. On the first front, researchers at QuEra and Harvard University demonstrated a 48-logical-qubit computer in 2023. Google revealed a way to extend the duration of error correction to support over 10 billion cycles without an error. In 2025, Microsoft unveiled its Majorana chip, which is based on topological qubits thought to be more resilient than other approaches to physical qubits.

Meanwhile, the cybersecurity community made progress in developing a roadmap for migrating to asymmetric encryption algorithms considered to be safe from quantum attacks. In late 2024, NIST rolled out the first PQC standards and established a timeline for migrating all government systems to the new algorithms.

Today, researchers and vendors continue to advance quantum key distribution networks. These networks use quantum principles to detect eavesdropping attempts to intercept symmetric encryption keys and are different from the kinds of quantum networks required to connect quantum computing processes.

Where is quantum computing development headed next?

Considerable progress has been made in developing quantum computers and algorithms in the last several decades. Despite this progress, there is widespread debate on when and how quantum computing will deliver clear advantages over classical computing approaches.

In the short term, the earliest advantages will likely come from special-purpose quantum computers designed for problems in optimization, search and simulations. These systems will prove useful in areas like supply chain optimization, AI algorithm development, materials R&D and physics research.

Expanding into other quantum computing uses will require improvements in numerous areas of quantum technology. This includes improving the quality and duration of physical qubits, reducing the overhead of error-correction algorithms, and developing quantum memory and networks for storing and sharing qubits.

In the long run, emphasis will shift away from physical qubits toward logical qubits and the number of gates that can be constructed between them. For example, IBM's roadmap previously helped it grow from 53 physical qubits in 2019 to 1,092 physical qubits in 2025, connected by 5,000 gates. The roadmap targets a modest expansion to 2,000 qubits by 2033, but they will be error-corrected and connected by a billion gates.

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.