9 quantum computing challenges IT leaders should know

While the theory of quantum mechanics is complex, the technology might offer practical uses for organizations. Learn about the challenges and opportunities of quantum computing.

Quantum computing might seem like a technology that belongs in the distant future, but there are potential real-world applications today. Still, to take advantage of those, organizations must address the challenges quantum computing poses.

Many organizations are investigating practical use cases of quantum computing, and for good reason. Quantum computing has the potential to solve computational problems significantly faster than existing classical computing approaches. Businesses might want to use quantum computing to optimize investments, improve cybersecurity or discover new paths for value creation.

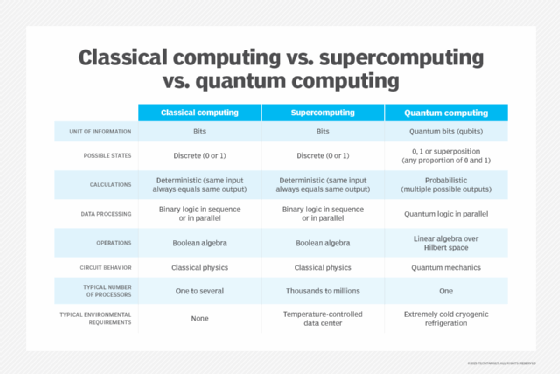

Whereas classical computing encodes data into logical bits using transistors that can process information only one decision at a time, quantum computers encode problems into basic units of information called quantum bits (qubits) that can exist in multiple states simultaneously. This encoding makes them perfect for complex optimization problems and cracking existing encryption codes.

Despite some excitement that quantum computing can solve obscure problems faster than classical computers, enterprises must address the existing challenges of quantum computing before they can broadly adopt practical tools and systems.

"Quantum computing has the potential to be seismically disruptive, and the businesses that are experimenting now stand to amplify business value and innovation," said Carl Dukatz, tech innovation strategy executive at Accenture, an IT services and consulting firm headquartered in Dublin. "Quantum could change the world, but there are numerous factors to consider in getting this right."

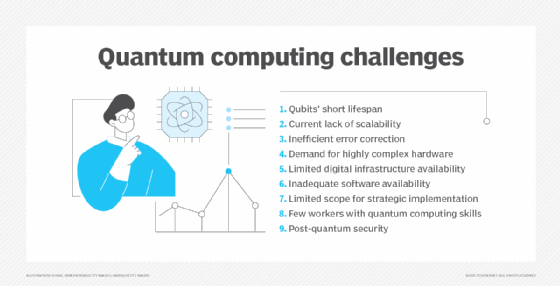

Here are nine quantum computing challenges business and IT leaders should understand.

1. Qubits' short lifespan

One major challenge to widespread quantum computing adoption is the qubits' fragile state.

Quantum computers encode information into qubits using ions, light or magnetic fields. Existing technologies can only keep the information in a quantum state for brief periods, limiting the duration of calculations.

The slightest interference from outside the computer, such as radio waves, mechanical vibration or magnetic fields, can cause the field to break down. These types of interference further reduce the duration of calculations.

Decoherence is the process that occurs when qubits become entangled with their environment, leading to the loss of the delicate quantum properties used in quantum computing. This drawback limits quantum computers' use for minor computational problems.

Researchers are exploring different designs that might be more resilient to noise or that can last longer. For example, light-based approaches are less susceptible to electrical noise but have yet to scale as quickly.

"One of the most striking developments in quantum computing hardware recently is the transition from pure academic research to a more commercially driven, competitive race among major tech companies and specialized startups," said Anders Indset, philosopher, best-selling author and chairman of venture capital firm Njordis Group. Industry giants Amazon, Google and Microsoft have each announced ambitious projects, such as Google's Willow and Microsoft's work on topological qubits with its Majorana 1 approach.

"We see an explosion of new hardware models and architectures: superconducting qubits [favored by IBM and Google], trapped ions [IonQ], photonic qubits [PsiQuantum], and more advanced or specialized approaches," Indset said.

2. Current lack of scalability

Quantum computers need to be scalable to solve real-world challenges -- and researchers have a way to go.

Quantum computers require specialized techniques and materials, which face challenges around fabrication precision, materials quality and minimizing defects.

Scalability can also require integrating multiple qubits, quantum gates and other components. Each component comes with different error rates, noise characteristics and operational requirements.

Qubits also need to interact with each other. However, maintaining connectivity and enabling interactions between qubits are challenging. Special quantum gates operating in sequence are required to manipulate qubits, and it's critical to control errors and decoherence as these processes are chained together.

"Additional qubit types, like neutral atom and topological, are showing more viability than before as compared to superconducting, ion trap and photonic, but it's still unclear which modality will be the first to provide advantages for computation over classical," Dukatz said.

Despite numerous recent breakthroughs, more progress will be required to turn these into practical commercial solutions. "Despite significant milestones being reached in scalability, fault tolerance and error reduction with [Microsoft's] Majorana and Google's Willow chips, there's still a lot of work to be done to get to broad adoption readiness," said Zack Moore, senior product manager of cybersecurity at InterVision, an IT services and consulting firm.

Quantum processing is susceptible to minor variances in operational conditions, making it challenging to maintain stable, consistent systems. Error checking and correction in current top-end chips take significant resources, and chip development costs are still market-prohibitive at scale.

"Once that [major] hurdle is overcome, there's still the task of integrating quantum computing capabilities into existing systems and/or developing applications, operating systems and security that work at quantum speed," Moore said.

3. Inefficient error correction

Qubits are much more likely to suffer computational faults than classical computing built on transistors and traditional storage technology. The bits in a classical computer are relatively stable until the power goes off in a CPU and can last much longer when laid down into magnetic disks or solid-state drives.

However, current qubits technology tends to lose the coherence required for calculations relatively quickly, and there's no easy way to store them. "To push the current hardware further, there has been [an] additional focus on error mitigation using techniques like noise reduction, post-processing and optimization, in conjunction with error correction, which focuses on redundancy across multiple qubits," Dukatz said.

Researchers are developing technology to organize multiple groups of these physical qubits into what's known as logical qubits so they operate more reliably. This is akin to the way RAID in classical computers connects multiple physical drives to create one larger and more reliable logical drive. However, qubits are more complicated than classical bits stored in RAID.

There are ways to approach error correction in quantum computers, said James Sanders, principal analyst for cloud and infrastructure at CCS Insight, a London-based industry analyst firm.

One such example that could minimize this drawback is quantum error correction. In simple terms, the process involves joining multiple physical qubits into one longer-lived logical qubit.

Like RAID, logical qubits need more space to represent each physical qubit. In a RAID system, each logical bit is stored across two physical drives. Other RAID schemes optimized for speed or reliability might require three or more physical bits for each logic bit.

But current quantum error correction schemes fare much worse.

The most optimistic approaches suggest it might be possible to transform 100 physical qubits into one logical qubit, Sanders said. Approaches that require 10,000 physical qubits to create one logical qubit are more realistic.

4. Demand for highly complex hardware

Quantum computing requires highly specialized computer hardware to build qubits successfully. The challenge is that there aren't enough physical resources to quickly manufacture high-quality, quantum-ready components at a reasonable cost for enterprise use.

The current crop of quantum computers also requires ultracool temperatures to maintain their quantum state. The complexities of this process negatively affect any enterprise's long-term environmental, social and governance strategy.

However, some academic labs are beginning to experiment with different types of computer hardware to minimize these challenges.

Early commercially available quantum systems would likely rely on superconducting. Superconductivity is when certain materials conduct an electric current with minimal resistance.

Another possibility is a trapped-ion quantum computer, where the qubits are ions trapped by electric fields and manipulated with lasers. Trapped ions have longer coherence times, which means longer-lived qubits.

Further examples include Intel's experimentation with building qubits on top of the company's existing investment in semiconductor technology. Some startups are exploring other approaches that build qubits from cold atoms, electrons on helium and photons.

5. Limited digital infrastructure availability

Many organizations might balk at the initial investment required for a single quantum computer. Company leaders might decide that the better move is to find a service provider that grants access to a quantum computer's capabilities.

Although vendors are beginning to offer remote access to the latest quantum computing hardware, widespread availability remains limited.

Cloud services, such as Amazon Braket and Azure Quantum, provide early access to quantum computers from multiple vendors. These cloud services also offer interfaces to store algorithms and results calculated by a quantum computer in the same environment enterprises might already use for high-performance computing in the cloud.

Cloud vendors are also starting to provide access to quantum simulators to develop quantum algorithms that run on classical computers but at a much lower speed.

Enterprises should think about experimenting with different types of quantum computers using these cloud services to find the best fit for their applications and goals.

6. Inadequate software availability

You don't need to be a quantum physicist to write programs for quantum computers. However, there's a considerable lack of software for quantum computing systems.

This scarcity means almost no cross-compatible software works well between quantum computers. Quantum algorithms might need fine-tuning to work effectively on different or similar types of quantum computers from other vendors.

Industry groups, such as the QIR Alliance, are developing intermediate representations for quantum software. This would make it easier to port quantum software between systems.

7. Limited scope for strategic implementation

Organizations must have a tactical roadmap before adopting quantum computing for the enterprise.

"Quantum computing has such vast potential that it can be difficult to know where to start," Dukatz said.

A haphazard enterprise approach to quantum computing produces innovative sparks without lasting value. Some potential actions could include researching only a single use case or deploying quantum computing in pockets across the enterprise.

A better approach would be to analyze how quantum computing could broadly affect the enterprise across industries, functions, R&D, IT and security practices. Such an approach could help guide discussions across business and IT teams to lay the foundations for integrating existing enterprise data and services with new quantum cloud services.

8. Few workers with quantum computing skills

Finding potential employees with specific skills in quantum computing remains a key issue. Early quantum research was conducted in research labs and academic institutions. However, quantum research skills had limited use outside of those specific settings.

Indset observed that new quantum-inspired algorithms can demonstrate value today without needing full-blown quantum hardware. These can run on classical hardware with new quantum-inspired heuristics to speed up optimization and sampling problems compared to traditional algorithms.

Also, simulated annealing algorithms running on specialized quantum processors, such as D-Wave's quantum annealers, are starting to deliver practical results. "Simulated annealing is widely available today and is used in a number of different areas related to AI and [machine learning], such as for tuning neural networks," Moore said.

Organizations should invest in quantum computing literacy, pilot programs with quantum technology providers, approaches to emerging quantum tech, and quantum-compatible infrastructure and talent. "The key is to view these approaches not as optional but as central components of a future-proofed IT strategy," he said.

Enterprises should also explore how AI can complement these efforts. "AI is everywhere, and that's true for quantum computing as well," Dukatz said. Quantum computing integration is driving improvements in programming, system design and algorithm development.

Speeding up AI through quantum computation -- typically labeled as quantum machine learning -- was under consideration before generative AI took off. "The synergies between quantum and AI are top of mind for most [observers] today, especially with GPU-accelerated quantum toolkits," Dukatz said.

9. Post-quantum security

Quantum computing systems pose potential security threats to existing data protection systems. For example, quantum computers have the processing power to crack most of the existing encryption schemes and security protocols that secure modern business systems and communications.

To combat this, researchers are developing new quantum cryptography techniques. As enterprise use of quantum computers matures, employing quantum algorithms to secure sensitive data from potential threats and risks can address the challenge.

NIST released the first set of standards for post-quantum security in August 2024, and they're being deployed to protect systems from "steal now, decrypt later" attacks. Additional algorithms were released in March 2025, meaning crypto-agility -- the ability to switch between new cryptographic algorithms easily -- is becoming a higher priority for businesses.

"Non-quantum cryptography will be deprecated in 2030, news which has motivated a great deal of activity [from] executives and subsequently IT and software development teams," Dukatz said.

Ultimately, quantum security readiness shouldn't be an isolated upgrade, Indset said. It should form part of a broader, modernized cryptographic strategy that blends classical and quantum-safe methods. That way, organizations can protect sensitive data now and as quantum computing technology continues to evolve.

Editor's note: This article was updated in April 2025 to reflect quantum technology advancements and improve the reader experience.

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.