Syda Productions - stock.adobe.c

Establishing AI governance in a business

It's hard to ethically manage data for AI models, but AI governance, as well as a strong ethics framework, can help enterprises effectively manage data and models.

Getting data to power AI models is easy. Using that data responsibly is a lot harder. That's why enterprises need to implement a framework for AI governance.

"With great data comes great responsibility," said Monark Vyas, managing director of applied intelligence strategy at Accenture, alluding to the proverb made popular by comic book hero Spider-Man.

Making AI governance policies a priority

Speaking during a panel at the AI Summit Silicon Valley conference, Vyas noted just how easy it is for companies to mishandle data.

"You're now sitting on so much data, that it's so easy to start using it, misusing it and abusing it," he said.

A company could create ethical and legal problems by collecting personal data outside of a user agreement, for example. A business could also misuse data in model development, by using wrongly collected data to power a machine learning model. Building a model on wrongly collected data can pose serious legal problems for a company, and potentially force a company to scrap its entire model if it can't separate the data from the model.

A strong AI governance structure can help prevent data misuse.

"Governance is the process of establishing a decision-making framework," said Salim Teja, a partner at Toronto-based venture capital firm Radical Ventures. AI ethics and governance, he noted, guide his investing decisions.

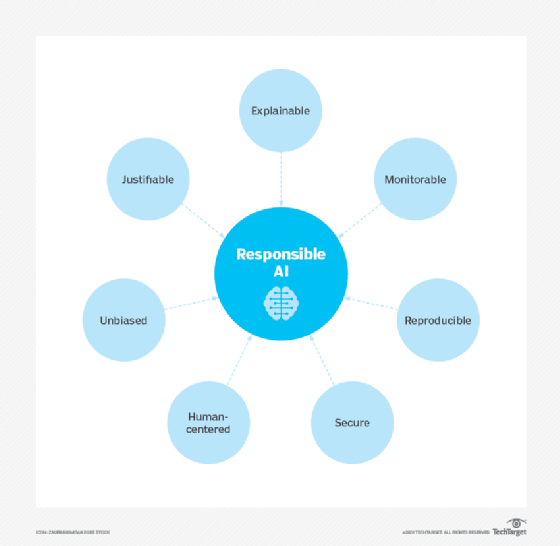

Themes related to AI governance include explainability, transparency and ethical alignment, Teja continued. AI ethics should reflect business ethics in an organization's business plan.

Enterprise leaders need to set AI governance and accountability as a priority, he continued.

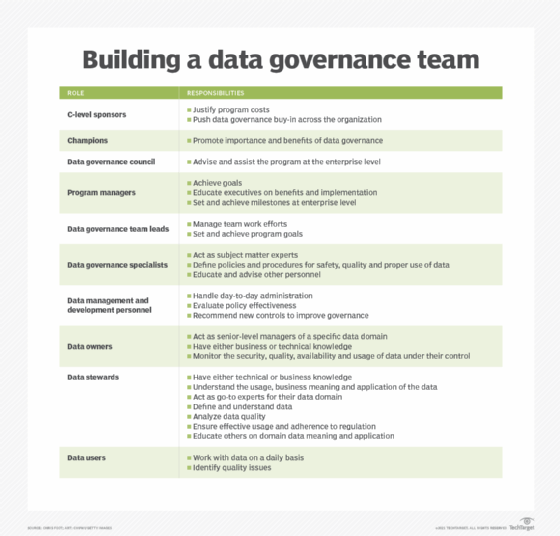

Governance touches on all aspects of a business, including the work of the board, which considers business risks, as well as the role of CFO, who considers financial risk, and the CTO, who considers technological risks.

"Companies that do this well really try to map out who should be accountable for which decisions, and what then is the data that we need to use to move decisions forward," Teja said.

It's important, however, that enterprises quantify their AI governance results.

"Unless we're measuring something, we don't necessarily know whether a governance approach is working or not," Teja said.

Still, he added, enterprises are still in the early stages of determining what exactly to measure, although he said he has seen some measure the value and cost of data and AI model bias, security and audibility.

"They're trying to develop a framework to have this discussion and make sure they are including all of the critical decision-makers in the organization to have a wholesome discussion," he said.

Limited AI governance: Potential risks

One of the biggest challenges enterprises face, however, is looking at a problem through either too much of a technical lens or too much of a business lens. Organizations, Teja continued, should consider exactly what outcomes they are trying to measure.

A strong system for AI governance and ethics plays an important role in hiring. Talent, Teja said, has increasingly looked at hiring companies' morals, values and ethics, as well as the purpose of their products.

"If startup companies are not focused on a place where they are creating positive impact, it makes it really, really tough to find the best talent," he said.

Still, not many companies are completely open about their products, largely for competitive reasons.

Merve Hickok, founder of aiethicist.org and the consulting firm Lighthouse Career Consulting, said she has seen some companies hold themselves responsible for the technology they put into the world, namely by opening their tech to third-party reviews. This, she noted, enables them to better understand their risks and see their blind spots.

Still, she added, companies "can create a value and [they] can create a difference amongst [their] competitors in the marketplace" by embedding ethics into their AI products and establishing strong AI governance practices.

The conference was held virtually this year on Sept. 30 - Oct. 1.

James Brusseau, a philosophy professor at Pace University and director of the AI Ethics site at Pace, moderated the panel "The Million Dollar Question -- How Do You Build an Ethical, AI-Augmented Organization That People Want to Work For?" with Hickok, Teja and Vyas.