Getty Images/iStockphoto

Intel launches Xeon 6 for AI data centers

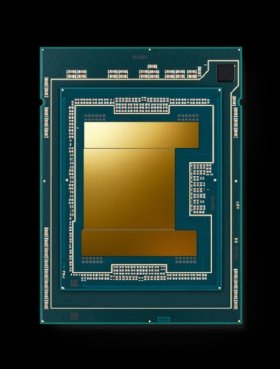

This month, Intel will launch the first of its Xeon 6 data center silicon that offers two microarchitectures, one for performance and the other for power efficiency.

Intel has responded to the demands of AI in cloud providers and enterprise data centers with a new generation of chips that offer options for performance and power efficiency.

This month, Intel will launch the first of its Xeon 6 silicon. The new product line comes in two separate microarchitectures: the Performance Core (P-Core) for AI applications and high-performance computing and the Efficient Core (E-Core) for power efficiency when running less-demanding workloads. Intel will make the chips available through cloud providers and data center server providers.

Intel's first release, the Xeon 6700E, is a system-on-chip (SoC) that comprises a revamped CPU and GPU for power efficiency, the company said this week at the Computex computer expo in Taiwan. In the third quarter, Intel plans to launch the Xeon 6900P, which comprises a CPU, GPU and neural processing unit. The NPU is a built-in AI accelerator.

"At IDC, we expect vendors' lines of CPUs, GPUs, AI-specific chips, and other kinds of accelerators to proliferate as end-users continue to search for the right combination of processing and acceleration for their specific workloads," IDC analyst Shane Rau said.

In the first quarter of 2025, Intel plans to expand the two product lines to give enterprises more options for matching the chip's performance and price to the workload. For less-demanding AI applications, Intel will have the Xeon 6700P, 6500P, and 6300P. At the same time, Intel plans to launch the 6900E for workloads that need more power than what's available in the 6700E.

Intel's Xeon 6 P-Core chips will compete with AMD EPYC processors and custom-designed chips offered by each of the major cloud providers, AWS, Google and Microsoft. AMD and Intel offer X86 processors, while the clouds offer Arm-based chips.

"It's the Wild West show out there right now," said Jack Gold, principal analyst for J.Gold Associates. "Everyone is trying to position optimally for a particular workload."

For enterprises, the competition means more choice and better pricing on chips, Gold said. However, how well Intel measures up to the competition will depend on the results of independent testing following the products' release.

The market for AI chips used in data center servers or offered by cloud providers will total $21 billion this year, increasing to $33 billion by 2028, according to Gartner.

AMD, Intel and Nvidia

In general, analysts expect companies to use Intel and AMD products for inference, the process of fine-tuning a generative AI model for a specific task, such as using natural language to search corporate data.

Nvidia, which makes the industry's most powerful AI chips, dominates today's market for training the most advanced large language models (LLMs), including OpenAI's GPT-4 and Google's PaLM 2.

In March, Nvidia launched the Blackwell B200, its next generation of AI GPUs for highly advanced LLMs. The Blackwell architecture is the successor to Nvidia's previous Hopper series, which included the H100 and H200 GPUs.

In the third quarter, Intel plans to introduce its line of AI accelerators, Gaudi 3, which the company claims surpasses Nvidia's older H100 chip. Gaudi 3 will also compete with the new AMD Instinct MI325X accelerator, available in Q4 2024.

Chips and software development tools for AI applications are in the early stages of development, making it difficult to pick the best technology for a specific use case.

"It gets super complicated to a point where sometimes I'm like, wait, how does this fit?" said Bob O'Donnell, principal analyst of TECHnalysis Research.

The AI frenzy in the tech industry started less than 24 months ago with OpenAI's launch of ChatGPT in November 2022.

Today's AI chipmakers include cloud providers, incumbents AMD, Intel, and Nvidia, and startups such as Cerebras Systems, Mythic, and SambaNova Systems.

In other Intel news, the chipmaker released technical details of its upcoming mobile AI processor, codenamed Lunar Lake. The X86 SoC includes a compute tile of E-Cores, P-Cores, an Xe2 GPU of 60 tera operations per second, and an NPU of 48 TOPS.

Intel plans to release Lunar Lake this year. It will compete with AMD Ryzen processors and Qualcomm Snapdragon X Plus.

Antone Gonsalves is an editor at large for TechTarget Editorial, reporting on industry trends critical to enterprise tech buyers. He has worked in tech journalism for 25 years and is based in San Francisco.