A history of wireless for business and a look forward

This history of enterprise wireless takes you from WLAN development inside the enterprise to cellular data services outside the walls to make wireless-first a possibility.

Anyone who joined an enterprise IT team in the 21st century has always worked in a world where wireless is an option -- and more recently, a necessity. But enterprise wireless hasn't always been broadly available, affordable and reliable, and the world of wireless-first and wireless-only enterprises has been 50 years in the making.

Here's a look at how we got here.

The birth of wireless LANs

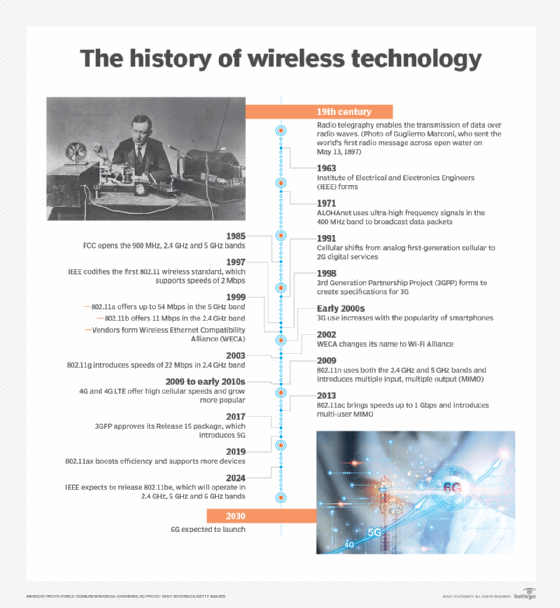

Technologically, the idea of transmitting data (not voice) over radio waves goes back to the 19th century and the birth of radio telegraphy, which meant sending Morse code over the air. But the origins of modern wireless computer networking only go back to the creation of ALOHAnet at the University of Hawaii in 1971.

ALOHAnet used ultra-high frequency signals in the 400 MHz band to broadcast packetized data among the Hawaiian Islands, which technically made it a WAN technology. ALOHAnet was important to the creation of Ethernet, which began in 1973 and culminated in codification in the IEEE 802.3 Ethernet standard. ALOHAnet was also an important ancestor of 802.11, the first standard for wireless and a sort of "Ethernet without the wires."

Chaos in the airwaves

Although 802.11 wasn't codified until 1997, commercial activity took place before that as different vendors and service providers brought wireless networking products and services to market to address the needs of retailers, logistics companies and others.

Commercially, enterprise wireless was mostly a non-starter until 1985, when the Federal Communications Commission (FCC) relaxed its licensing requirements for three distinct sections of the industrial, scientific and medical spectrum: the 900 MHz, 2.4 GHz and 5 GHz bands. At the time, enterprises were not required to have an FCC license for low-power, limited-range broadcasts in those bands of spectrum.

Vendors suddenly had a huge potential market for high-speed wireless data networking. Companies including AT&T, Lucent Technologies (now part of Nokia), NCR and Proxim Wireless introduced products targeting different use cases. Out of this stew of competing and incompatible solutions, an IEEE working group for wireless pulled together the first version of the 802.11 protocol. In its first incarnation, the standard supported speeds of only 2 Mbps.

Enter Wi-Fi branding

In 1999, less than two years after IEEE released the 802.11 wireless LAN standard, it issued an updated version that codified 802.11a and 802.11b. The 802.11a standard used the 5 GHz band to deliver up to 54 Mbps, and 802.11b could deliver 11 Mbps in the 2.4 GHz band.

Despite the presence of standards, however, many allegedly standards-compliant products didn't interoperate with each other. So, in the same year, a group of vendors banded together to form the Wireless Ethernet Compatibility Alliance (WECA), a trade group focused on promoting standards compliance and interoperability to drive enterprise wireless adoption.

The alliance began selling products under Wi-Fi branding, and broad enterprise adoption began to take off with 802.11b-compliant gear from networking vendors and PC makers. In 2002, WECA changed its name to the Wi-Fi Alliance, which now has more than 800 member organizations globally.

Since 1999, the IEEE continues to issue updates to the 802.11 LAN standard. Updates take advantage of new antenna designs and materials, compression technologies, chip densities and the availability of more spectrum. These advances make possible higher speeds, higher densities, better security, better reliability and lower power consumption.

In the next two decades, 802.11a and 802.11b were followed by these standards:

- 802.11g. Released in 2003, this standard doubled the speed of 802.11b to 22 Mbps.

- 802.11n. Released in 2009, 802.11n brought together the use of the 2.4 GHz and 5 GHz bands and introduced multiple input, multiple output (MIMO) technology to support more users and higher throughput per access point.

- 802.11ac, also known as Wi-Fi 5. Released in 2013, 802.11ac boosted possible top speeds to more than 1 Gbps and increased wireless networks' ability to deal with denser groups of devices, while also delivering more reliable and higher-speed communications. It also introduced multi-user MIMO.

- 802.11ax, also known as Wi-Fi 6. Released in 2019, Wi-Fi 6 boosted efficient delivery of higher throughput to a given volume of space, supporting more devices and traffic with high reliability.

Wi-Fi becomes the default option

Until 802.11ax was released, each new generation of technology was sold under the Wi-Fi umbrella. Improvements in reliability, speed, reach and service density broadened the enterprise use of wireless LANs (WLANs). Since 2000, WLANs have transitioned from luxuries used only where wires are impossible, to broadly available adjuncts to wired LANs to complete replacements for them.

Wireless is usually the default option for connecting to an enterprise network, at least for mobile devices of all sorts, laptops, desktops, printers and IoT devices. In a major change, an increasing number of offices and buildings are not cabled to support wired LAN access for end users.

The Wi-Fi Alliance decided to refer to 802.11ax as Wi-Fi 6, partially in recognition of the need to distinguish the latest and most comprehensive versions of Wi-Fi from those that came before, and partially to set up a parallel with the simple branding around mobile data services (3G, 4G and 5G). Each new Wi-Fi standard increases in number. For example, when IEEE codifies 802.11be, the standard will be called Wi-Fi 7 commercially.

Outside enterprise walls: Cellular data services

In parallel with the development of WLAN technology, telecommunications carriers developed mobile broadband technologies. These mobile technologies power data access on mobile phones and similar handheld devices. They also provide service to cellular modems for laptops and other devices, as well as provide fixed data access to devices or sites for which wired designs are impractical or prohibitively expensive.

Mobile broadband became possible in the 1990s with the shift from first-generation consumer cellular service (1G), which was analog, to 2G digital services. Although they are being phased out by most carriers, 2G data services enabled texting (SMS) and early media services, such as the ability to download ringtones.

Demand for mobile data increased so quickly and broadly that carriers eased into a new generation of mobile data service in the late 1990s. Built like voice services on a circuit-switched architecture, 2G services gave way to 3G, with more LAN-like packet-switching systems. These systems extended to mobile networks the kind of technology base serving wired and wireless enterprise networks (harking back to ALOHAnet).

Mobile technology generations are messy. Each generation sees internal evolution resulting in extensions -- e.g., 2.5 G -- before the next generation is codified. Thanks to equipment lifecycles, mobile networks can only add support for a new generation over the course of multiple years. Those networks typically continue to support older generations for many years after a new generation is in service.

Although business use of mobile data occurred pre-3G, it was 3G that made business-centric smartphones possible, starting with the Blackberry, personal hotspots and cellular modems. For the first time, 3G made mobile access to enterprise systems broadly accessible and useful. Speeds were far better than for dial-up modems (still in broad use in the early 2000s but already becoming unworkably slow for new applications), starting above 200 Kbps and reaching as high as 15 Mbps.

4G ushers in widespread data access, 4G WANs

Late in the 2000s and into the early 2010s, carriers and device makers shifted to 4G and then 4G long-term evolution (LTE) technologies.

4G brought higher speeds, with peak rates between 100 Mbps and 1 Gbps. In the 2010s, 4G LTE services became nearly ubiquitous in the U.S., fully empowering a remote or traveling enterprise employee to function from anywhere, at any time. Speeds at or above 10 Mbps also made 4G data services a reasonable WAN choice.

5G and cellular data for the IoT age

With 5G, carriers and device makers introduced a broad set of service improvements aimed at supporting data service to several orders of magnitude more devices in a service zone. 5G is also designed to support very-low-latency, ultra-high-reliability services, such as connectivity for self-driving cars. As with each generational shift, 5G supports a couple of orders of magnitude increase in speeds, specifying eventual peak download rates of 10 Gbps.

Ultra-high speeds with low latency means 5G will be able to serve extremely demanding real-time use cases, such as e-sports. Notably, 5G also has an array of features aimed at supporting undemanding devices. For example, things like environmental sensors might send less than a hundred bytes of information in a second and aren't sensitive to latency, but they often have to be extremely stingy on power consumption.

Converging wireless paths address challenges

5G and Wi-Fi 6 use many of the same technologies and techniques in trying to address the same set of challenges related to density, speed, reliability and power use. It is becoming increasingly common to see enterprises use 5G instead of Wi-Fi, for example, to eliminate the need for networking in small branch offices by having staff connect over mobile internet instead.

With higher top speeds and network slicing soon to enable service providers to make and deliver on performance guarantees, 5G also increases the range of site sizes for which wireless WAN is a viable option. And private 5G options offer enterprises the ability to deploy their own 5G networks -- for example, to provide coverage on a large campus or across an airport.

The day is coming when the main distinction between wireless technologies will center on power and reach -- the threshold between needing a license to use spectrum (5G and what follows) and not (Wi-Fi 6 and what follows).

Enterprise users should eventually be able to expect seamless and transparent transition between Wi-Fi on premises and 6G elsewhere. They can also expect endpoint technology that better supports "work from anywhere" by invisibly handling switching among services, based not just on location but also on performance at the moment to deliver the best user experience, without sacrificing security.