Getty Images

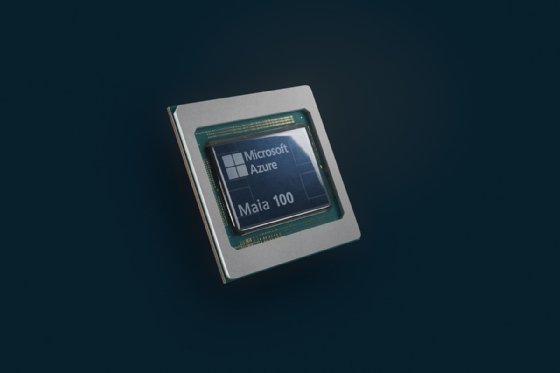

Microsoft launches custom AI, server chips for Azure

Maia is for AI inferencing, while the Arm-based Cobalt is for general-purpose computing. The chips could compete with AMD, Nvidia and Intel offerings eventually.

Microsoft introduced a custom AI chip on Wednesday that could compete with AMD and Nvidia for low- and mid-tier workloads on Azure while giving the company more control over its cloud infrastructure.

Microsoft unveiled Maia for accelerating AI inferencing and a custom Arm-based chip, Cobalt, for general-purpose computing at its Ignite conference. The chips will be available on Microsoft Azure alongside options from partners AMD, Intel and Nvidia.

Microsoft plans to start rolling out the chips early next year, initially to power services such as Microsoft Copilot or Azure OpenAI Service.

Maia is not equal to Nvidia's highly coveted H100 Tensor Core GPU for the most demanding AI workloads. However, Microsoft's hardware could compete with lower-end offerings from Nvidia and AMD for AI inferencing, said Jack Gold, principal analyst at J.Gold Associates.

More competition would mean lower prices on Azure eventually.

"Competition always lowers prices," Gold said. "It's just kind of the law of finance."

Besides intensifying competition, Maia and Cobalt also provide Microsoft with control over chip features that optimize its cloud infrastructure, similar to how rivals AWS and Google use custom chips.

"If I build my chip, I can optimize it for whatever I want and not have to go with what Nvidia or AMD gives me," Gold said.

Maia and Cobalt represent the "last puzzle piece" in Microsoft's effort to build its cloud infrastructure from top to bottom, the company wrote in a blog post. Microsoft-designed infrastructure on Azure ranges from software and servers to racks and cooling systems.

"At the scale we operate, it's important for us to optimize and integrate every layer of the infrastructure stack to maximize performance, diversify our supply chain and give customers infrastructure choice," said Scott Guthrie, executive vice president of Microsoft's Cloud and AI Group, in the joint blog with other executives.

In developing Maia, Microsoft worked with partner OpenAI to refine and test the chip for the company's LLMs, said OpenAI CEO Sam Altman. Microsoft has invested $13 billion in OpenAI.

"Azure's end-to-end AI architecture, now optimized down to the silicon with Maia, paves the way for training more capable models and making those models cheaper for our customers," Altman said.

Design changes for Maia

Microsoft built racks from scratch to meet Maia's requirements, making them wider to support more power and networking cables for AI workloads.

Microsoft developed "sidekick" hardware for cooling next to the Maia rack. Cold liquid flows through the hardware like fluid through a car radiator and to cold plates attached to the surface of the Maia chips.

Each plate has channels that absorb and transport heat from the flowing liquid. The cooled fluid is then sent back to the rack to reabsorb heat.

For Cobalt, Microsoft chose the Arm architecture because its power-per-watt ratio, typically better than x86 designs, will help reduce power consumption in Azure.

"Multiply those efficiency gains in servers across all our data centers, it adds up to a pretty big number," said Wes McCullough, corporate vice president of hardware product development at Microsoft.

While hyperscalers such as Microsoft continue to reduce power consumption, experts warn that AI's power demands in data centers will exceed the electrical capacity of the nation's grids despite the addition of sustainable energy like wind power and solar. To close the gap, data center operators are exploring the use of mini-nuclear reactors.

A second generation of Maia and Cobalt chips is under development, Microsoft said. The company did not provide a release date.

At Ignite, Microsoft introduced Azure Boost. The technology makes storage and networking faster by offloading the processing from servers' CPUs to other hardware and software.

The company also unveiled AI hardware from AMD and Nvidia.

AMD's Instinct MI300X generative AI accelerator is for AI inferencing on the cloud's Azure N-series virtual machines. Nvidia's H100 Tensor Core GPU is available with the ND H100 V5-series virtual machine.

Microsoft plans to release the ND H200 V5-series for Nvidia's new and more powerful H200 Tensor at an unspecified date.

Antone Gonsalves is an editor at large for TechTarget Editorial, reporting on industry trends critical to enterprise tech buyers. He has worked in tech journalism for 25 years and is based in San Francisco. Have a news tip? Please drop him an email.