Getty Images

How to identify and manage AI model drift

The training data and algorithms used to build AI models have a shelf life. Detecting and correcting model drift ensures that these systems stay accurate, relevant and useful.

AI models cannot remain static and unchanged; they inevitably drift over time. This makes continuous monitoring and model drift mitigation vital to any ongoing AI strategy.

AI systems are developed using complex combinations of algorithms that produce results by comparing new operational data with a comprehensive set of training data. This comparison between training and production data is crucial, as it enables AI models to learn patterns and take informed actions amid an ever-rising flood of business information.

Unfortunately, real-world data conditions can change unexpectedly or shift gradually over the course of months or even years -- and training data does not autonomously adapt to keep pace with these changes. Over time, growing deviations between production and training data can impair a model's accuracy or predictive capabilities -- a phenomenon known as model drift -- which can lead to declining model performance.

What is AI model drift?

AI model drift occurs when the real-world data a model encounters deviates from the data it was trained to recognize or handle. As a result of this discrepancy, the model gradually loses its ability to accurately spot trends, identify issues or make decisions, as it continues to apply outdated patterns learned during its initial training.

For example, consider an email filtering model trained to identify spam by flagging certain words or phrases commonly found in such emails. Over time, language changes, and spammers adopt new tactics for attracting readers' attention. This could include new buzzwords, phrases, references and tactics like spear phishing that the model was not originally trained to handle.

Thus, over time, the elements the model was trained to see might decline and be replaced by new elements. Due to these production data changes, the model's ability to function degrades because it was never taught how to handle these new data elements. This results in model drift that reduces, or even erases, the model's value to the business. To combat this, AI and machine learning teams can update the training data and possibly integrate adaptive learning mechanisms capable of adjusting to new spamming behaviors.

Drift has no permanent impact on models themselves -- if production data and variables returned to the expected parameters, a model's behavior and output would be restored. However, drift does affect a model's ability to deliver accurate and predictable outputs, compromising its value. The severity of this impact depends on the amount of deviation between production and training data.

Causes of AI model drift

There are two principal causes of model drift:

- Data drift occurs when there is a change in the distribution, scope or nature of the incoming production data over time. For example, a model used to make trend predictions for a retail business might be impaired because of unexpectedly high shipping volumes and costs during the COVID-19 pandemic, when typical shipping activity escalated significantly.

- Functional drift occurs when there are changes in the fundamental underlying behaviors or relationships among variables, making the initial parameters less suited to the operational environment. For example, a model used by a financial services provider might experience functional drift if shifts in the economy alter how loan defaults relate to credit scores.

Other factors can also result in forms of model drift, undermining reliability and accuracy:

- Poor data quality. Incorrect measurements, missing values, lack of normalization and other data errors can result in data quality issues that reduce model effectiveness. For example, a sales prediction model that was fed incorrect transaction amounts will not have reliable results.

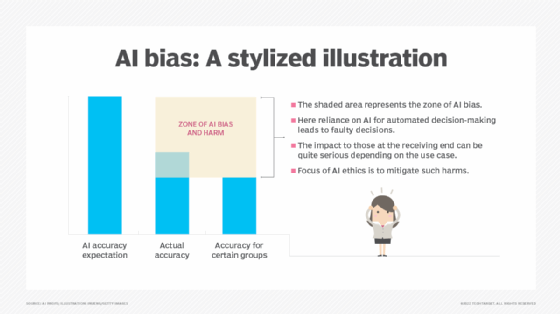

- Training data bias. Data bias occurs when the distribution of data in a set is improperly skewed or not properly representative of the true distribution. If a model is trained using biased data, it will perform poorly in production when it encounters data in real-world environments that differs from its training set.

- External events. Models employed for tasks such as user experience or sentiment analysis can face a deluge of unexpected data from external sources such as political events, economic changes and natural disasters. For example, a regional war might affect user sentiment analysis for a product or service, causing previously positive indicators to suddenly and broadly decline. This form of data drift sometimes resolves quickly, but it can also persist for an extended period, causing a prolonged impact to the model.

How to monitor and detect AI model drift

Detecting model drift can be tricky; businesses might be averse to taking the time necessary to build and train a model as well as check its results. However, business environments and data change over time, putting any system at risk for model drift. This, in turn, can inhibit accurate decision-making within the organization and lead to worse ROI from AI initiatives.

Detecting model drift requires a comprehensive suite of methods:

- Direct comparison. The most straightforward method of detecting model drift is to compare predicted values with actual values. For example, if a model is designed to help forecast revenue for the upcoming quarter, regularly comparing the predicted revenue to the actual revenue for that quarter will ensure drift becomes evident if the two results diverge over time.

- Model performance monitoring. There are numerous metrics that can help to measure model performance, including confusion matrices, F1 score, and gain and lift charts, among others. Other statistical methods, such as the Kolmogorov-Smirnov test, can also help calculate the deviation of outputs from the expected mean. Model engineers should select the metrics that are most appropriate for the model, its intended purpose and the characteristic under review.

- Data and feature assessments. Data and the features used in models change over time. Model engineers should periodically assess the data being delivered to the model, consider the training data used to prepare the model, and reevaluate the algorithms and assumptions used to construct the model. This can help teams determine whether changes in data quality have occurred and whether the existing features still have predictive power.

- Comparative models. When two or more similar models are available, it might be worth comparing the output of multiple models to understand their variability and sensitivity to different data sets. For example, teams could develop parallel models that use slightly different training data or production data, then compare outputs to help determine drift in one or more models.

Regardless of the method used, drift detection should be treated as a regular process to ensure accurate outputs over time.

How to correct AI model drift

To correct model drift, businesses can employ machine learning workflows that include a recurring process of data quality assurance, drift monitoring and mitigation. This includes establishing strong data governance practices, proactively designing models to ensure that they can adjust over time, and regularly auditing models for accuracy and reliability.

Specific drift mitigation strategies include the following:

- Model retraining. Periodic retraining is one of the easiest and most straightforward means of mitigating model drift, and it can be performed as needed -- whether at regular intervals or when triggered by detected drift. Retraining can provide fresh, accurate, complete and valid data that enables the model to evolve in response to new data and features.

- Adaptive techniques. Traditional machine learning models employ initial training and retraining as needed, but advanced model design can implement feedback loops within the model that enable it to actively learn from and adapt to incoming data. One such technique might include user scoring or other human feedback that enables the model to tailor its decision-making dynamically to produce more desirable outputs. Other incremental or continuous learning techniques can also be integrated into the model for regular training updates.

- Multiple models. Another way to guard against model drift is to employ several related models simultaneously, using different models to capture different aspects of the problem or data. Evaluating the same issue from different perspectives establishes a more holistic approach to analytics and decision-making. Using multiple models can also serve as a safeguard against the failure of the entire system if one model starts to drift.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 20 years of technical writing experience in the PC and technology industry.