What is SAP S/4HANA?

SAP S/4HANA is an enterprise resource planning (ERP) business suite based on the SAP HANA in-memory database that allows companies to perform transactions and analyze business data in real time.

S/4HANA is the centerpiece -- or digital core -- of SAP's strategy for enabling digital transformation, a broadly defined undertaking where organizations can modify existing business processes and models or create new ones. SAP S/4HANA integrates core processes and relevant data into a single system. For example, use cases for SAP 4/HANA include managing and unifying sales, finance, operations and customer relations departments under a single database and operating system. This allows companies to be more flexible, responsive and resilient to changing business requirements, customer demands and environmental conditions. SAP refers to this S/4HANA-centered business environment as the intelligent enterprise.

SAP S/4HANA can be deployed on-premises, in the cloud or in a hybrid model.

S/4HANA features

S/4HANA includes the following features:

- Designed for speed and ease of use. S/4HANA was designed to make ERP more modern, faster and easier to use through a simplified data model, lean architecture and a new user interface (UI) built on the tile-based SAP Fiori UX.

- Integration with other technologies. S/4HANA includes or is integrated with several advanced technologies, including AI, machine learning (ML), the internet of things (IoT) and advanced analytics. The SAP HANA in-memory database architecture and the integration of advanced technologies enable S/4HANA to help solve complex problems in real time and analyze more information faster than previous SAP ERP products.

- Several deployment options. S/4HANA offers on-premises, public cloud, private cloud or hybrid deployment models. There's also a multi-tenant software as a service (SaaS) version, SAP S/4HANA Cloud, whose modules and features differ from those of the on-premises version.

- Suitable for various industries. S/4HANA offers various industry-specific functionalities tailored to areas such as manufacturing, retail, financial services and healthcare.

History of S/4HANA

SAP released S/4HANA in February 2015 to much fanfare, with then-CEO Bill McDermott calling it the most important product in the company's history. S/4HANA stands for "Suite for HANA," as the product was written to take advantage of SAP's HANA offering. HANA, or High-Performance Analytic Appliance, is SAP's in-memory database and application development platform, which debuted in 2011.

S/4HANA was completely rewritten for HANA, differentiating it from its predecessor, SAP ERP Central Component (ECC), which also ran on HANA and was released in 2013.

SAP S/4HANA required rethinking the database concept and rewriting 400 million lines of code. According to SAP, the changes make the ERP system simpler to understand and use and more agile for developers. SAP sees S/4HANA as an opportunity for businesses to reinvent business models and generate new revenue by taking advantage of IoT and big data by connecting people, devices and business networks.

Also, because S/4HANA doesn't require batch processing, businesses can simplify and execute their processes in real time. This means that users can get insight on data from anywhere in real time for planning, execution, prediction and simulation, according to SAP.

Up to 2023, SAP released a new version of S/4HANA every year. However, starting with the 2023 release, SAP moved to a two-year release cycle for its on-premises version.

Differences between S/4HANA and SAP ECC

S/4HANA shares many of the characteristics of previous SAP ERP products, including ECC, but because S/4HANA was a redesign, it differs considerably from ECC in several areas. Fundamentally, S/4HANA is designed to take advantage of capabilities not available for ECC, such as advanced analytics and real-time processing.

The following are some of the main areas where S/4HANA differs from ECC:

- Database. S/4HANA only runs on HANA, whereas ECC can run on many databases, including DB2, Oracle, SQL Server and SAP MaxDB.

- Deployment options. S/4HANA has a wider array of deployment options, including on-premises, public cloud, private cloud, hosted cloud and hybrid environments. ECC is primarily deployed on-premises and can run in hosted public-cloud environments, but there's no specific public cloud edition.

- User experience. S/4HANA uses the modern SAP Fiori UX, while ECC uses the older, standard SAP GUI, though it does have a limited number of Fiori apps. Fiori is a collection of commonly used S/4HANA functions that are displayed in a simple, consumer-ready tile design and can be accessed across various devices, including desktops, tablets and mobile devices.

- Advanced functions. S/4HANA is designed to use advanced technologies, including embedded analytics, robotic process automation, ML, AI and the SAP CoPilot digital assistant. These advanced capabilities aren't available in ECC.

S/4HANA lines of business

In its earliest versions, S/4HANA comprised modules that each contained functionality for a distinct business process. The first module was Simple Finance, which streamlined financial processes and enabled real-time analysis of financial data. Later renamed SAP Finance, it helped companies align their financial and nonfinancial data into what SAP refers to as a single source of truth. SAP Business Suite users often deployed SAP Finance as the first step to S/4HANA.

SAP added modules and functionality in later releases, such as the following:

- S/4HANA 1511, released in November 2015, introduced a logistics module called Materials Management and Operations.

- S/4HANA 1610, released in October 2016, included modules for supply chain management, including Advanced Available-to-Promise; Inventory Management; Material Requirements Planning; Extended Warehouse Management; and Environment, Health and Safety (EHS).

S/4HANA subsequently reorganized the SAP ECC ERP modules into lines of business (LOBs) that are comprised of functions for specific business processes. The first LOB was SAP S/4HANA Finance, and the following LOBs have been added in later releases:

S/4HANA Finance focuses on all a business's financial aspects, including financial accounting, controlling, treasury and risk management, financial planning, financial close and consolidation.

S/4HANA Logistics is a collection of LOB modules centered around processes for supplier relationship management and supply chain management, including the following:

- S/4HANA Sourcing and Procurement centers on capabilities needed to source and obtain raw materials for fulfilling production orders, including extended procurement, operational purchasing and supplier and contract management.

- S/4HANA Manufacturing focuses on the processes required to manufacture products, including responsive manufacturing, production operations, scheduling and delivery planning, and quality management.

- S/4HANA Supply Chain focuses on end-to-end business planning and logistics processes, from preproduction to distribution to end purchasers, including production planning, batch traceability, warehousing, inventory and transportation management.

- S/4HANA Asset Management focuses on maintenance processes for a company's fixed assets, from machine tools to plants, warehouses and other buildings, including plant maintenance and EHS monitoring.

S/4HANA Sales focuses on processes required to fulfill sales orders, including pricing, sales inquiries and quotes, promise checks, incompletion checks, repair orders, individual requirements, return authorizations, credit and debit memo requests, picking and packing, billing and revenue recognition.

S/4HANA R&D and Engineering focuses on the product lifecycle, including defining the product structure and bills of materials, product lifecycle costing, project and portfolio management, innovation management, management of chemicals or other sensitive materials used in development and health and safety regulatory compliance.

SAP later extended the digital core LOB capabilities to meet specific industry requirements. As of 2022, the industry segments were Consumer, Discrete Manufacturing, Energy and Natural Resources, Financial Services, Public Service and Service.

Each industry segment contains functionality for the following business requirements.

Consumer

- Agribusiness.

- Consumer products.

- Fashion.

- Life sciences.

- Retail.

- Wholesale distribution.

Discrete manufacturing

- Aerospace and defense.

- Automotive.

- High tech.

- Industrial manufacturing.

Energy and natural resources

- Building products.

- Chemicals.

- Mill products.

- Mining.

- Oil, gas and energy.

- Utilities.

Financial services

- Banking.

- Insurance.

Public services

- Defense and security.

- Federal and national government.

- Future cities.

- Healthcare.

- Higher education and research.

- Regional, state and local government.

Services

- Cargo transportation and logistics.

- Engineering, construction and operations.

- Media.

- Passenger travel and leisure.

- Professional services.

- Sports and entertainment.

- Telecommunications.

Advantages and drawbacks of S/4HANA

Advantages

Using S/4HANA can provide the following benefits:

- Good fit for large organizations. S/4HANA is a complex ERP system best suited for large, complex organizations. It enables them to standardize business processes across multiple geographic locations and corporate entities.

- Industry-specific capabilities. S/4HANA includes a broad package of capabilities focused on the complex business requirements of industries such as manufacturing, procurement, supply chain, distribution, retail and financial services.

- Ability to innovate. SAP invests heavily in research and development for S/4HANA. This means S/4HANA is at the leading edge of ERP functionality, integrating advanced technologies, such as AI, ML, industrial IoT, blockchain and advanced analytics.

- Real-time performance. S/4HANA is built on the HANA in-memory database. This greatly improves processing speed and enables real-time analytics and transactions, which can be important for organizations that require immediate financial reporting.

- Multiple deployment options. S/4HANA can be deployed both on-premises or through a cloud offering. The cloud option offers customizability in scalability and cost.

Disadvantages

Implementing S/4HANA also comes with its own set of challenges, however, including the following:

- Complexity. S/4HANA's complexity can make it unsuitable for organizations with relatively simple requirements. It's expensive to implement and run, so it's best suited for businesses with the resources to deploy it effectively.

- Skills requirement. Because S/4HANA has a significantly different architecture, data model and capabilities than previous SAP ERP systems like ECC, there might be a lack of developers and administrators who have advanced S/4HANA skills and experience. However, this was a bigger issue in the early years.

- Cost and risk. Many S/4HANA customers must engage with third-party systems integrators to deploy and manage the environment, which can increase costs. The complexity of S/4HANA also increases the risk of failed implementations if the projects aren't managed properly or requirements aren't properly defined.

S/4HANA deployment

S/4HANA was developed as an on-premises software system but can also be deployed in various cloud scenarios. Most customers who move to the cloud choose to deploy SAP S/4HANA Cloud, which is essentially a different product than standard S/4HANA. The initial versions of S/4HANA Cloud had significantly fewer capabilities than S/4HANA, but the feature sets have become more similar with subsequent releases.

The standard on-premises S/4HANA can be deployed in a private cloud environment hosted on the servers of a cloud service provider. This option is usually chosen by companies that want some of the advantages of cloud computing, such as no longer needing to manage infrastructure without sharing the cloud environment with other instances of the ERP system.

S/4HANA can also be deployed in hybrid environments, in which some instances run on hosted cloud infrastructure and others run on-premises. Companies with security or data governance requirements usually choose this deployment option.

How to implement S/4HANA

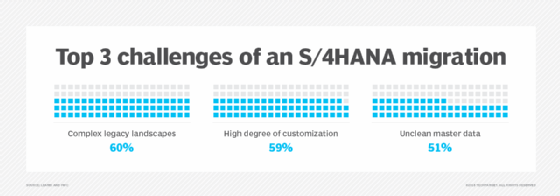

Whatever deployment method a company chooses, implementing S/4HANA can be a complex, time-consuming and costly process. Most S/4HANA customers replace existing SAP ECC systems, but migrating to S/4HANA differs from a standard version upgrade. Indeed, in many cases, a S/4HANA migration is more like a new software implementation than an upgrade.

Because S/4HANA has a simplified data model and includes many different functions than SAP ECC, a company must rethink and redesign its business processes to take advantage of S/4HANA's advanced capabilities.

Most SAP ECC systems have been heavily customized, with thousands of specialized functions developed to meet a company's internal requirements or those of its particular industry. Many of these custom functions aren't needed because they come standard with S/4HANA. This means that before beginning an S/4HANA implementation, companies should thoroughly examine all their processes to understand how they can be best designed for S/4HANA and eliminate functions that might not be needed.

When embarking on a S/4HANA implementation, organizations can take either a brownfield or greenfield approach. In a brownfield implementation, a company transfers its existing SAP landscape largely wholesale to S/4HANA. This means that the company continues to use at least some legacy functions. A brownfield implementation is usually less disruptive and time-consuming than a greenfield approach, but the company might not get all of the transformational value of moving to S/4HANA.

A greenfield implementation involves installing and configuring S/4HANA in a completely new environment. Companies must redesign entire processes for a greenfield approach, making it more disruptive, costly and time-consuming than a brownfield approach. However, when completed, it provides all of the advantages of S/4HANA's modern ERP capabilities.

Managing data is a significant part of any S/4HANA implementation, regardless of the company's approach. Data being moved into the new system must be prepared for S/4HANA's simplified data model.

SAP S/4HANA Cloud

In February 2017, SAP released S/4HANA Cloud, a multi-tenant SaaS version of S/4HANA. S/4HANA Cloud is best suited for organizations with 1,500 employees or more that want to run a two-tiered ERP system. In this scenario, a company runs a full business suite -- such as ECC or S/4HANA -- on-premises and implements S/4HANA Cloud at the division or subsidiary level.

S/4HANA Cloud includes next-generation technology, such as ML, through a tool called SAP Clea and a conversational digital assistant bot called CoPilot.

A new version of S/4HANA Cloud Public Edition is released twice a year in February and August.

SAP S/4HANA embedded analytics

S/4HANA includes embedded analytics software that enables users to perform real-time analytics on live transactional data. This is done through Virtual Data Models, prebuilt models and reports based on SAP HANA Core Data Services that analyze HANA operational data without requiring a data warehouse. The analytics functions come with S/4HANA software and don't require separate installations or licenses. Other features include multidimensional reports, KPI management, a query browser and analytics Fiori apps.

SAP S/4HANA roadmap

Prior to 2023, a major release of the on-premises S/4HANA came out once a year. The product-naming convention combined the year and month of release until the 2020 release -- when it was changed to only the year.

The most recent version of S/4HANA on-premises is SAP S/4HANA 2023, which was released in October 2023. In 2024, new versions of SAP S/4HANA Cloud were released.

SAP's ongoing 2025 roadmap includes a variety of improvements across the SAP ecosystem. For SAP 4/HANA, SAP is planning to enable the refurbishment of spare parts with subcontractors using newer Fiori apps. SAP is also planning improvements to S/4HANA Cloud tracking and decision-making capabilities by using digital twin-based visualization of time and financial data.

Each S/4HANA release includes new capabilities and extensions to the software's functionality. In addition to the new functional capabilities, recent S/4HANA releases have added cloud integration with SAP cloud-based products -- such as SAP Ariba for procurement, SuccessFactors for human capital management, Fieldglass for contingent workforce management, and Concur for travel and expense management -- as well as other enterprise systems. Embedded emerging technologies have also been included in more functional modules, including AI, ML, IoT, advanced analytics and blockchain in more LOB modules.

As S/4HANA has matured, it's become an extremely flexible tool. Learn more about S/4HANA deployment options and editions.