Azure Kubernetes Service (AKS)

What is Azure Kubernetes Service (AKS)?

Azure Kubernetes Service (AKS) is a managed container orchestration service based on the open source Kubernetes system, which is available on the Microsoft Azure public cloud. Although Kubernetes is useful for managing containerized workloads and services, it can be complex to install and maintain. AKS helps to reduce the complexity, enabling organizations to handle critical functionality, such as deploying, scaling and managing Docker containers and container-based applications.

What is the difference between AKS and Kubernetes?

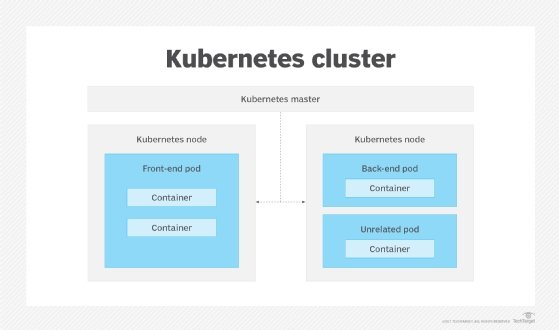

Kubernetes is a popular platform for container orchestration and containerized workload management. It is extensible and highly configurable, meaning Kubernetes clusters can be customized with different configurations and extensions to suit the needs of different work environments. Kubernetes provides numerous capabilities for running containerized workloads, including deployment patterns, storage orchestration, service discovery, load balancing, automated rollouts and rollbacks, and container self-healing.

In sum, Kubernetes is a platform. In contrast, AKS is a service.

Specifically, AKS is a managed service that enables organizations and their teams to simplify the deployment, scaling and management of Kubernetes clusters so they can quickly deploy cloud-native apps in their chosen environment -- Azure cloud, data centers, on the edge, etc. AKS helps manage the overhead involved in using Kubernetes, reducing the complexity of deployment and management tasks in Kubernetes. AKS is designed for organizations that want to build scalable applications with Docker and Kubernetes while using the Azure architecture.

Kubernetes has been open source since 2014. AKS became generally available in June 2018. Both Kubernetes and AKS are commonly used by software developers and IT operations staff.

Kubernetes and AKS are both free to use. However, organizations do incur costs when using these technologies. With AKS, they pay for compute resources -- virtual machines (VMs), storage, networking, etc. Kubernetes users may have to pay for third-party consultants or pay-to-play platforms to make full use of Kubernetes and its capabilities.

Azure Kubernetes Service features and benefits

As a managed service provided by Microsoft, AKS automatically configures all Kubernetes nodes that control and manage the worker nodes during deployment. It also handles other tasks, including governance, cluster scalability, connections to monitoring services and configuration of advanced networking features. Users can monitor a cluster directly or view all clusters with Azure Monitor. The primary benefits of AKS are flexibility, automation and reduced management overhead.

AKS also helps to speed up the development and deployment of cloud-native apps. It provides prebuilt cluster configurations for Kubernetes, built-in code-to-cloud pipelines and guardrails that make it easy to do the following:

- Spin up managed Kubernetes clusters.

- Develop and debug microservices applications.

- Simplify runtime and portability.

- Set up a test deployment strategy.

- Detect failures.

AKS node configurations can be customized to adjust operating system (OS) settings or kubelet parameters, depending on the workload. Also, nodes can be scaled up or down to accommodate fluctuations in resource demands. For additional processing power -- say, to run resource-intensive workloads -- AKS supports graphics processing unit (GPU)-enabled node pools. In addition, users can define user node pools to support applications with different compute or storage requirements and create custom tags to modify resources.

AKS integrates with Azure Container Registry to create and manage container images and related artifacts -- an authentication mechanism must be established first. Also, since AKS supports Azure Disks, users can dynamically create persistent volumes in AKS for use with Kubernetes pods.

Accessing Kubernetes resources from the Azure portal

The Azure portal is a web-based console that enables users to manage their Azure subscription and related services, including AKS. The portal provides a view into all the Kubernetes resources in a user's AKS cluster, including deployments, pods and replica sets. The portal also simplifies cluster upgrades as new versions of Kubernetes become available.

The Kubernetes resources view on the portal also shows the live status of individual deployments, while in-depth information about specific nodes and containers can be viewed from Azure Monitor.

Here, explore the answer to key questions about Kubernetes backup, including what the key Kubernetes backup tools are and how to incorporate Kubernetes backup into existing disaster recovery processes.

Azure Kubernetes Service architecture

With AKS, users can create Kubernetes clusters from the Azure portal, Azure PowerShell, Azure Command-Line Interface (CLI) or Azure Resource Manager templates. An AKS cluster has at least one node. However, the clusters can run multiple node pools to support mixed OSes and Windows Server containers, and they can be upgraded with the Azure portal, Azure CLI or Azure PowerShell.

Regardless of the creation method used, when an AKS cluster is created, a control plane is also automatically created and configured. The control plane is a managed Azure resource that the user cannot directly access. In addition to the control plane, AKS also configures Kubernetes nodes once the user deploys the cluster and specifies the node number and size.

All AKS nodes run on Azure VMs. Both single and multiple GPU-enabled VMs are available. AKS provides an Azure Linux container host, a lightweight and hardened OS image for running container workloads on AKS.

All Kubernetes development and management tools work with AKS. Azure also provides multiple tools to streamline Kubernetes.

Azure Kubernetes Service use cases

Organizations can use AKS to automate and streamline the migration of applications into containers. AKS is also useful to deploy, scale and manage diverse groups of containers, which helps with the launch and operation of microservices-based applications.

AKS usage can complement Agile software development paradigms, such as continuous integration/continuous delivery (CI/CD) and DevOps. Thus, developers could place a new container build into a repository like GitHub, move those builds into ACR and then use AKS to launch the workload into operational containers.

Data streaming can be made easier with AKS as well. It can be used to process real-time data streams to perform quick analyses and enable fast decision-making.

AKS can also be used for internet of things (IoT) applications. It can ensure that adequate compute resources are available to process data from large numbers of discrete IoT devices. Similarly, AKS can help ensure adequate compute resources for big data tasks or compute-intensive workloads, such as machine learning model training, visualization and so on.

Azure Kubernetes Services security, monitoring and compliance

AKS includes numerous security features to secure and control access to Kubernetes clusters. One is Kubernetes role-based access control (RBAC) that enables administrators to limit resource access to users, groups or service accounts based on their roles. Microsoft Entra ID and Azure RBAC can also be used to enhance security and simplify user permission management.

AKS is a trusted platform with integrations to Azure Policy and Entra ID. Entra ID integration provides fine-grained identity and access control, while Azure Policy enables organizations to enforce regulatory compliance controls. In addition, just-in-time cluster access can be used to grant privileged access and Microsoft Defender for Containers to maintain and monitor security.

Azure Kubernetes Service availability and costs

As of March 2024, AKS is available in 60-plus regions in North America, Africa, East Asia, South Asia, Europe and South America. AKS is a free Azure service, so there is no charge for Kubernetes cluster management. AKS users are, however, billed for the underlying compute, storage, networking and other cloud resources consumed by the containers that constitute the application running within the Kubernetes cluster.

Kubernetes vs. Docker

Docker technology is used to create and run containers. Docker is made up of the client CLI tool and the container runtime. The CLI tool executes instructions to the Docker runtime, while the Docker runtimes create containers and run them on the OS. Docker also contains container images.

Comparatively, Kubernetes is a container orchestration technology. Kubernetes groups the containers that support a single application or microservice into a pod. Docker used to be the default container runtime used by Kubernetes; however, other container runtime choices are also available now.

The important distinction between Docker and Kubernetes is that Docker is a technology for defining and running containers, while Kubernetes is a container orchestration framework that represents and manages containers. Kubernetes does not make containers.

What are the differences between AKS and Azure Service Fabric?

Azure Service Fabric is a distributed systems platform -- platform as a service -- that facilitates the development, deployment and management of microservices and containers on the Azure cloud. These microservices communicate with each other through service application programming interfaces. The platform is beneficial for creating stateful services, although it also provides a lightweight runtime for stateless services.

Clusters in Service Fabric can be created in a variety of environments, including Windows Server and Linux on premises, Azure and other public clouds. The platform supports the full application lifecycle and CI/CD for cloud applications and integrates with numerous CI/CD tools, such as Jenkins and Azure Pipelines.

The biggest difference between AKS and Service Fabric is that AKS only works with Docker-first applications using Kubernetes. Service Fabric is geared toward microservices and supports a number of different runtime strategies. Service Fabric can deploy both Docker containers on Linux and Windows Server containers.

Both Service Fabric and AKS offer integrations with other Azure services, but Service Fabric integrates with other Microsoft products and services more deeply because it was wholly developed by Microsoft.

Unless the application under development relies heavily on the Microsoft technology stack, a cloud-agnostic orchestration service, like Kubernetes, is more appropriate for most containerized apps.

Azure Kubernetes Service vs. Azure Container Service

Prior to the release of AKS, Microsoft offered Azure Container Service (ACS), which supported numerous open source container orchestration platforms, including Docker Swarm and Mesosphere Data Center Operating System, as well as Kubernetes. With AKS, the focus is exclusively on the use of Kubernetes. ACS users with a focus on Kubernetes can potentially migrate from ACS to AKS.

However, AKS poses numerous differences a user must address before migrating from ACS. For example, AKS uses managed disks, so a user must convert unmanaged disks to managed disks before assigning them to AKS nodes. Similarly, a user must convert any persistent storage volumes or customized storage class objects associated with Azure disks to managed disks.

In addition, stateful applications can be affected by downtime and data loss during a migration from ACS to AKS, so developers and application owners should perform due diligence before making a move.

To counter the complexity and stress of running, deploying and managing Kubernetes, turn to a managed Kubernetes service. Compare and contrast popular Kubernetes tools. Also, learn how to create an Azure Kubernetes Service cluster with this Ansible and Kubernetes tutorial, and see how to handle Kubernetes challenges in complex cloud environments.