kras99 - stock.adobe.com

How an AI governance framework can strengthen security

Learn how AI governance frameworks promote security and compliance in enterprise AI deployments with essential components such as risk analysis, access control and incident response.

Deploying AI systems isn't just about coding and algorithms; it involves integrating a transformative technology into the very fabric of an organization.

As AI comes to play an ever-larger role in business operations, concerns surrounding the security of AI technologies are paramount. Like any other technology, AI has its vulnerabilities and is open to manipulation, misuse and exploitation if not governed appropriately.

An AI governance framework is fundamental for any organization looking to deploy AI safely and effectively. By outlining clear policies, processes and best practices, an AI governance framework can provide a roadmap for secure AI deployment.

A blueprint for strengthening AI security

Structured AI governance frameworks fortify enterprise security by helping organizations implement AI safely, ethically and responsibly. From risk analysis and access controls to monitoring and incident response, an AI governance framework should address all facets of AI security.

Risk analysis

Before deploying any AI system, it's essential to conduct a thorough risk assessment. This involves identifying potential threats to the AI system, assessing the likelihood of their occurrence and estimating their potential impact. By understanding the AI system's vulnerabilities, organizations can prioritize security measures and allocate resources efficiently.

This process is becoming more complex as more business processes integrate with not just internal systems, but also third-party SaaS offerings. Increasingly, this third-party software is itself likely to have AI components not directly controlled by the enterprise.

Access control

AI models and data sets are among an organization's most valuable assets. Thus, it's crucial to limit access to these resources through access control, which ensures only authorized users can interact with AI systems.

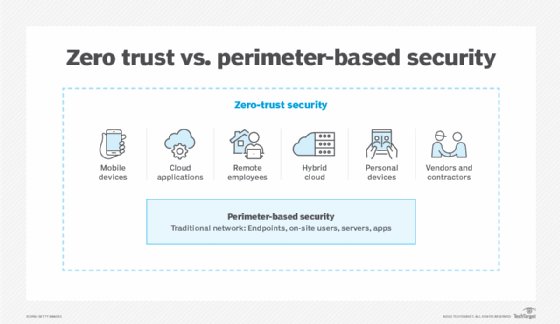

Implementing access control for AI involves employing strong authentication protocols, establishing user hierarchies and rigorously logging all interactions. Within the larger context of zero-trust architectures, these AI safeguards can reinforce strong organization-level access controls.

Monitoring

Continuous monitoring is the cornerstone of AI security. Tracking AI system operations in real time can reveal anomalies that could indicate potential security breaches or system malfunctions.

By keeping a vigilant eye on AI operations, organizations can swiftly identify and address issues before they escalate. Security professionals should ensure any new AI systems implemented are tied into existing security incident and event management systems.

Incident response

Despite an organization's best efforts, security breaches can still occur. But a rapid response can mitigate damage and prevent further exploitation.

Consequently, an effective AI governance framework must incorporate a robust incident response plan. This incident response plan should include predefined protocols for identifying, reporting and addressing security incidents. The Mitre ATT&CK framework can be a helpful tool in developing these guidelines.

Ethical considerations

AI governance isn't just about security; it's also about ensuring the ethical use of AI, including promoting fairness and preventing bias.

Ethical considerations must be baked into any AI governance framework. These considerations dictate how AI models are trained. Companies with the resources to do so might look into developing their own training data sets, where it is easier to control for these issues.

AI governance: A component of broader strategies

As AI continues to transform the business landscape, ensuring its secure and ethical use is of paramount importance. An effective AI governance framework is the first step in this ongoing journey.

But AI governance should not exist in a vacuum. It must be integrated into an organization's broader cybersecurity and AI strategies. This means aligning AI governance objectives with overall business goals, ensuring not only that AI systems are secure, but also that they effectively drive organizational growth and innovation.

Furthermore, as AI technology continues to evolve, so should its governance framework. Regularly reviewing and updating governance policies ensures organizations remain at the cutting edge of AI security.

Jerald Murphy is senior vice president of research and consulting with Nemertes Research. With more than three decades of technology experience, he has worked on a range of technology topics, including neural networking research, integrated circuit design, computer programming and designing global data centers. He was also the CEO of a managed services company.