What is biometrics?

Biometrics is the measurement and statistical analysis of people's unique physical and behavioral characteristics. The technology is mainly used for identification and access control or for identifying individuals who are under surveillance. The basic premise of biometric authentication is that every person can be accurately identified by intrinsic physical or behavioral traits. The term biometrics is derived from the Greek words bio, meaning life, and metric, meaning to measure.

Biometrics is advantageous for businesses looking to protect their facilities and valuable resources. Only authorized personnel who prove their identity with unique physical attributes can access them.

However, security and privacy concerns exist as well. These include hackers stealing biometric data through insecure collection devices and businesses misusing collected data. Still, various industries find practical value in biometric identification when implemented and used correctly.

How does biometrics work?

Using biometric verification for authentication is becoming common in corporate and public security systems, consumer electronics and point-of-sale applications. One driving force behind biometric identity verification is convenience; there are no passwords to remember or security tokens to carry. Some biometric methods, such as measuring a person's gait, can operate with no direct contact with the person.

Components of biometric devices include the following:

- A reader or scanning device to record the biometric sample data being authenticated.

- Software to convert the scanned biometric data into a standardized digital format and to compare match points of the observed data with stored data.

- A database to securely store biometric data for comparison.

Biometric data is often held in a centralized database. However, modern biometric implementations often depend instead on gathering biometric data locally and then cryptographically hashing it. This helps accomplish authentication or identification without direct access to the data.

Biometric data collection involves the following seven steps:

- Capture. Biometric data is captured from an individual. This can include fingerprints, facial features, iris patterns, voice or other physiological biometric characteristics. The first time a person uses a biometric system is referred to as their enrollment.

- Extraction. The system extracts specific features or templates from the captured data. These extracted features are converted into a digital format for processing.

- Comparison. The extracted biometric data is compared with biometric templates stored in the system's database. The system uses algorithms to analyze the similarities and differences between the captured biometric data and the stored templates.

- Matching. The comparison results in a match score or a similarity score. If the match score meets a predefined threshold, the individual is authenticated. If the match score doesn't meet the threshold, the individual isn't authenticated.

- Decision. Based on the matching result, the system decides whether to grant or deny access to the individual.

- Feedback. The system provides feedback to the user indicating whether the authentication was successful. This feedback can be in the form of a green light for access granted or a red light for access denied.

- Logging. The system logs the authentication transaction, capturing details such as the time of authentication, the user's identity and the result of the authentication attempt. This data is useful for audit trails and security monitoring.

Types of biometrics

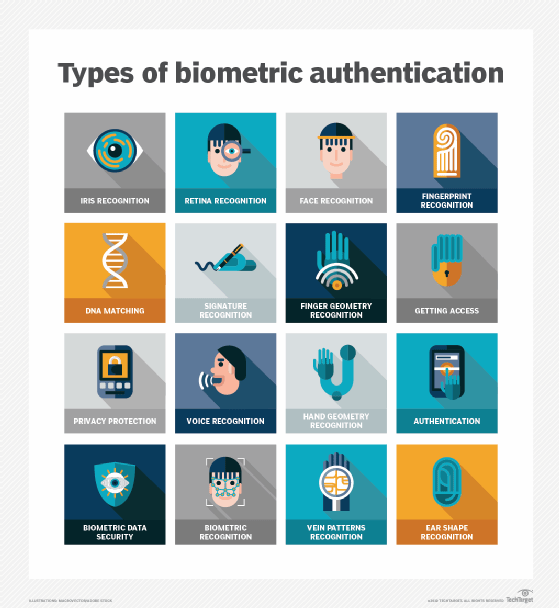

The two main types of biometric identifiers are physical characteristics and behavioral characteristics.

Physical identifiers relate to the composition of the user being authenticated and include the following biometric recognition factors:

- Facial recognition.

- Fingerprints.

- Hand geometry (the size and position of fingers).

- Iris recognition.

- Vein recognition.

- Retina scanning.

- Voice recognition.

- DNA (deoxyribonucleic acid) matching.

- Digital signatures.

Behavioral identifiers include recognition of the following unique ways in which individuals act:

- Typing patterns.

- Mouse and finger movements.

- Website and social media engagement patterns.

- Walking gait.

- Other gestures.

Some of these behavioral identifiers provide continuous authentication instead of a single one-off authentication check. While behavioral identifiers are a newer method with lower reliability ratings, they have potential to grow alongside other improvements in biometric technology.

Biometric authentication can be used to access information on a device such as a smartphone, but there are other uses for biometrics. For example, biometric information can be held on a smart card, where a recognition system reads an individual's biometric information and compares it against the biometric information on the smart card.

Advantages and disadvantages of biometrics

Biometrics has plenty of advantages and disadvantages regarding its use, security and other related functions. The advantages of the technology include the following:

- Biometric identification data is hard to fake or steal, unlike passwords.

- It's easy and convenient to use.

- It's generally the same over the course of a user's life.

- It's nontransferable.

- It's efficient because templates take up less storage.

Biometrics also has its share of disadvantages:

- It's costly to get a system up and running.

- If the system fails to capture all the biometric data, it could fail to identify a user.

- Databases holding biometric data can be hacked.

- Errors such as false rejects and false accepts can happen.

- If a user gets injured, then a biometric authentication system might not work. For example, if a user burns their hand, a fingerprint scanner might not be able to identify them.

Examples of biometrics in use

In addition to biometrics use in smartphones, the technology is used in other fields:

- Law enforcement. Biometrics is used in criminal identification systems, such as fingerprint and palm print authentication systems.

- Government. The Department of Homeland Security's Border Patrol unit uses biometrics for numerous detection, vetting and credentialing processes. U.S. Customs and Border Protection uses biometric systems for electronic passports, which store fingerprint data. It also uses facial recognition systems.

- Healthcare. Fingerprint ID is used in some national healthcare identity card systems.

- Airport security. Iris recognition and other biometrics are being used in this area.

However, not all organizations and programs will opt in to using biometrics. As an example, some justice systems refuse to use biometrics so they can avoid any possible error that might occur.

What are security and privacy issues of biometrics?

Biometric identifiers depend on the uniqueness of the factor being considered. For example, fingerprints are considered highly unique to each person. Fingerprint recognition, especially as implemented in Apple's Touch ID for iPhones, was the first widely used mass-market application of a biometric authentication factor.

Other biometric factors, such as retina and iris recognition or vein and voice scans, haven't been widely adopted. This is, in part, because there's less confidence in the uniqueness of some identifiers and because some factors are easier to spoof and use for digital identity theft and other malicious activities.

The stability of a biometric factor, or how permanent it is, also affects its acceptance. Fingerprints don't change over a lifetime, while the appearance of a facial image can change drastically with age, illness and other factors.

The most significant privacy issue of using biometrics is that physical attributes, such as fingerprints and retinal blood vessel patterns, are static and can't be modified or replaced. This is distinct from nonbiometric factors, including passwords and tokens, which can be replaced if they're breached or otherwise compromised. Nearly 6 million sets of fingerprints were compromised in the 2014 U.S. Office of Personnel Management data breach, putting government agents at risk of being identified by the stolen fingerprints.

The increasing ubiquity of high-quality cameras, microphones and fingerprint readers in mobile devices means biometrics is a common way to authenticate users. For example, Fast Identity Online has specified new authentication standards that support two-factor authentication with biometric factors.

While the quality of biometric readers continues to improve, they can still produce false negatives, which occur when an authorized user isn't recognized or authenticated, as well as false positives, which occur when an unauthorized user is recognized and authenticated.

Efforts to rectify numerous data privacy and security concerns worldwide have expanded. Laws and regulations are constantly evolving to secure biometric data. For example, the General Data Protection Regulation (GDPR) protects EU citizens' biometric data, classifying it as personal data. As with other forms of personal data, the GDPR requires organizations to disclose exactly how and why biometric data is collected and to implement security measures to protect it from theft or misuse.

Is biometrics secure?

While high-quality cameras and other sensors are enabling the use of biometrics, they can also facilitate cyberattacks. Because people don't shield their faces, ears, hands, voice or gait, attacks are possible simply by capturing biometric data from people without their consent or knowledge.

An early attack on fingerprint biometric authentication was called the gummy bear hack. It dates to 2002 when Japanese researchers, using a gelatin-based confection, showed that an attacker could lift a latent fingerprint from a glossy surface. The capacitance of gelatin is similar to that of a human finger, so the gelatin transfer could fool fingerprint scanners designed to detect capacitance.

Determined attackers can defeat other biometric factors. In 2015, Jan Krissler, also known as Starbug, a Chaos Computer Club biometric researcher, demonstrated a method for extracting enough data from a high-resolution photograph to defeat iris scanning authentication. In 2017, Krissler reported defeating the Samsung Galaxy S8 smartphone's iris scanner authentication scheme. Krissler had previously recreated a user's thumbprint from a high-resolution image to demonstrate that Apple's Touch ID fingerprinting authentication scheme was vulnerable.

After Apple released the iPhone X, it took researchers just two weeks to bypass Apple's Face ID facial recognition using a 3D-printed mask. Face ID can also be defeated by individuals related to the authenticated user, including children or siblings.

Biometric security concerns apply to the public sector as well. In November 2023, an Inspector General report found that the Department of Defense (DoD) had implemented biometric devices that were vulnerable to cyberattacks. Like other government departments, the DoD collects biometric data to authenticate personnel, but the report found two areas with issues: Devices used for biometric data collection weren't properly encrypted, and the DoD's biometrics policies didn't address encryption. In addition, those policies didn't include destruction or sanitization of data processes once devices were no longer in use. The department has since addressed these concerns.

The future of biometrics

A major biometrics technology trend is the addition of artificial intelligence (AI) and machine learning (ML) capabilities. For example, ML algorithms can analyze large volumes of biometric data to more accurately authenticate individuals. This reduces ongoing problems with false positives and false negatives.

There's also a growing emphasis on data privacy laws and regulations. The GDPR, the Illinois Biometric Information Privacy Act and the European Union AI Act are all examples of how countries or states are taking the threats posed by biometric data breaches or misuse seriously. All three laws exist to ensure individuals' biometric data remain private and used appropriately. The EU AI Act specifically limits how biometrics systems can use AI. More countries and states are likely to follow suit.

Biometrics plays a crucial role in multifactor authentication. Learn how biometrics helps make MFA beneficial for enterprises.

Michael Cobb also contributed to this article.