10 popular libraries to use for machine learning projects

Machine learning libraries expedite the development process by providing optimized algorithms, prebuilt models and other support. Learn about 10 widely used ML libraries.

Machine learning libraries offer developers and data scientists resources to build, deploy and train models that incorporate data sets to generate predictions and take specific actions. Models employ deep learning algorithms for image recognition, language processing, computer vision and data analytics. These capabilities become the basis for innovative technologies from smart robotics to AI.

Most programmers rely on libraries to develop applications for industries as diverse as manufacturing, cybersecurity, transportation, finance and healthcare. In this article, explore the evolution of ML and a survey of some of the most useful open source software (OSS) machine learning libraries available to developers.

Growth of ML libraries

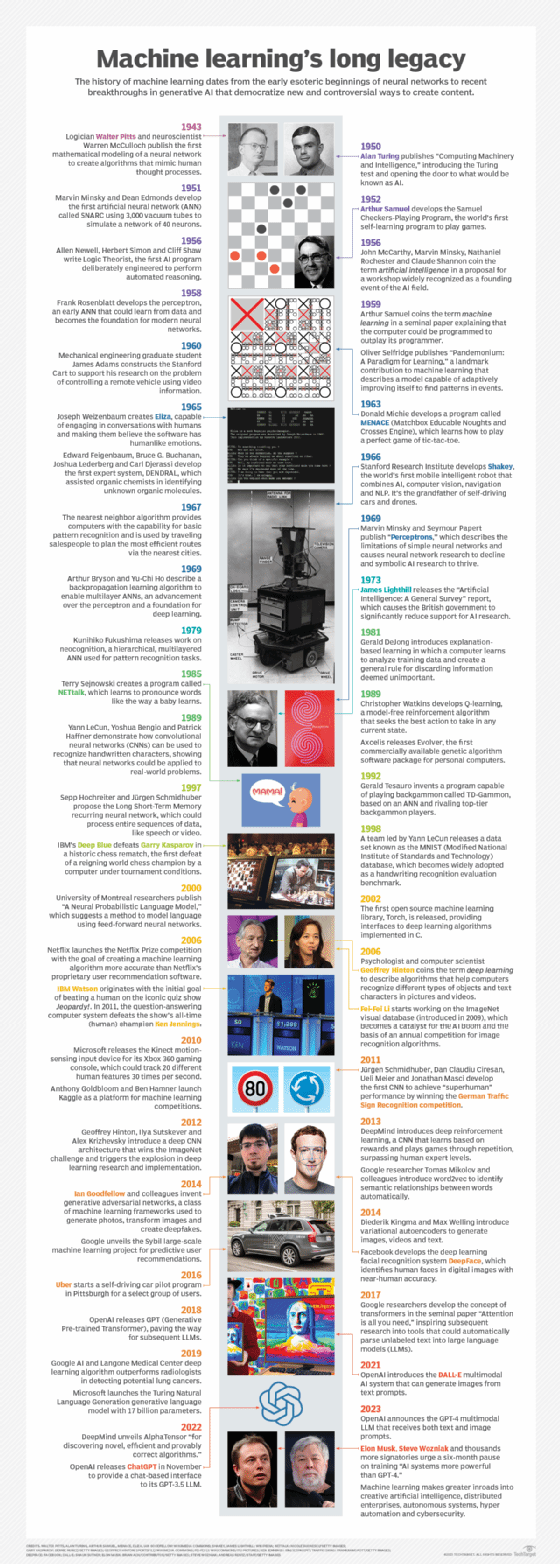

The use of algorithms and model training in machine learning was introduced in the 1950s. Applications at the time were minor. However, fundamental concepts that established the logic behind ML were proposed by a number of pioneering mathematicians and scientists, e.g., Alan Turing; Allen Newell and Herbert Simon; and Frank Rosenblatt. And ML gradually gained momentum over the decades as improvements in networking and compute performance enabled new innovations, such as natural language processing (NLP) and computer vision.

By the 1990s, developers using languages like Pascal, Fortran and Lisp could access rapidly growing ML libraries for tools to preprocess, train and monitor models.

What is the purpose of ML libraries?

Libraries, along with automation, helped eliminate complexity by providing prewritten code to accomplish multiple ML tasks. Today's libraries offer diverse tools -- i.e., code, algorithms, arrays, frameworks, etc. -- for builds and ML deployments. Machines rely on effective models to progressively learn, maturing autonomously without active mediation on the part of programmers. To that end, hundreds of different ML libraries exist that offer unique strengths and capabilities to simplify the implementation of complex algorithms and to test sophisticated models.

Getting the most out of ML libraries

In general, developers should have facility in more than one language, even though most ML libraries are written in C++. This versatility can improve outcomes by enabling them to choose the language best suited to different tasks. For example, by using Python, programmers can gain advantages, such as developing AI applications, working with GPUs or accelerating overall development times. On the other hand, coding in C++ can be more effective for certain projects, such as building small neural networks or boosting model performance.

Moreover, the business and compute problems that developers are trying to solve can also dictate the most effective language to use. Other efficient languages for ML programming include JavaScript, R, Julia, Go and Java, as well as the longtime stalwarts, C and C++.

Top OSS libraries

These 10 popular ML libraries provide key resources for designing, building and deploying effective models.

1. TensorFlow

Developed by Google, the TensorFlow open source library emphasizes deep learning -- a requirement for building neural networks, developing image recognition and creating NLP systems. Programmers can use the library's automatic differentiation to optimize model performance. Moreover, they can employ TensorFlow to improve functional gradient computations, which dictate the speed at which models can learn.

2. PyTorch

Developed by Meta, PyTorch streamlines model prototyping through the use of tensors -- i.e., multidimensional arrays -- that process data more efficiently. Tensors support automatic differentiation -- a critical prerequisite for training deep learning models for robotics, computer vision, NLP and a host of other applications. Moreover, programmers can use PyTorch's dynamic computation graph to debug and modify models in real time. Finally, the PyTorch forum community is exceptionally supportive of beginners.

3. Scikit-learn

Scikit-learn is ideal for understanding the basics of ML. Developers can learn the difference between supervised and unsupervised learning, understand linear and nonlinear model selection, and perform validation techniques. With its intuitive GUI, users can grasp the relationship between inputs and outputs, which define how data is represented, processed and transformed into predictions for consumer behavior, market trends or IT management.

4. Keras

Keras employs its seamless deep learning integration with TensorFlow to simplify model building and training. For developers working in Python, the library provides an effective interface that removes complexity for creating neural networks, as well as a simplified API, making ML development accessible to beginners.

5. Apache MXNet

In addition to supporting key ML languages -- Python, R, Scala, Julia, Matlab and JavaScript -- MXNet compiles to C++, enabling high-speed execution and overall reliability. Programmers can build convolutional neural networks (CNNs) that classify images into different predefined categories. They also gain the advantage of deploying their lightweight neural network models on low-powered devices, from desktop PCs to cloud servers.

6. Jax

Developed by Google and known for its speed, Jax offers just-in-time compilation for high performance, employs gradient descent computations for efficient model training and features dynamic scalability, making it well suited to large-scale ML operations. Moreover, developers and data scientists can use the power of hardware accelerators -- GPUs and Tensor Processing Units -- to speed up computations in deep learning models.

7. Hugging Face Transformers library

Hugging Face is known as the GitHub of ML, where developers and data scientists can build, train and deploy ML models. Programmers employ the huggingface_hub Python library client, a subset of the Hugging Face Transformers library, to access development support, numerous models, data sets and demos, as well as support for dozens of additional libraries. As an open source public repository, it’s continually growing with thousands of developers iterating and improving code. Not limited to language models, Hugging Face also offers computer vision, audio and image models.

8. ML.NET

Developed by Microsoft, the ML.NET open source framework features full integration with the .NET ecosystem and provides native tools and APIs for building and deploying ML models. It offers diverse functionality for classification, regression, clustering and anomaly detection. ML.NET employs Open Neural Network Exchange as a common format for transferring models between different ML formats. While deployed in diverse industries -- healthcare, finance, e-commerce -- ML.NET can be challenging for new developers with growing but limited community support.

9. Shogun

For data scientists, programmers and students, Shogun is both accessible and user-friendly. Designed to handle especially large data sets, this open source ML library offers a combination of ML algorithms, data structures and versatile tools for prototyping data pipelines and building models. It automatically generates build interfaces depending on the chosen development language, including Python, Java, Ruby, C#, R, Lua and others. Since it was first introduced in 1999, Shogun has featured an active and supportive community.

10. Pandas

Pandas is ideal for creating DataFrame, a data structure similar to a spreadsheet that provides flexibility when storing and working with data. The popular library is also useful for exploratory data analysis, a critical step for ensuring reliable ML implementations that can deliver required insights. Built on top of Python, knowledgeable developers can easily access resources for grouping, combining and filtering a wide range of data.

Kerry Doyle writes about technology for a variety of publications and platforms. His current focus is on issues relevant to IT and enterprise leaders across a range of topics, from nanotech and cloud to distributed services and AI.