What are machine learning algorithms? 12 types explained

What are machine learning algorithms?

A machine learning algorithm is the method by which the AI system conducts its task, generally predicting output values from given input data. The two main processes involved with machine learning (ML) algorithms are classification and regression.

An ML algorithm is a set of mathematical processes or techniques by which an artificial intelligence (AI) system conducts its tasks. These tasks include gleaning important insights, patterns and predictions about the future from input data the algorithm is trained on. A data science professional feeds an ML algorithm training data so it can learn from that data to enhance its decision-making capabilities and produce desired outputs.

ML is a subset of AI and computer science. Its use has expanded in recent years along with other areas of AI, such as deep learning algorithms used for big data and natural language processing for speech recognition. What makes ML algorithms important is their ability to sift through thousands of data points to produce data analysis outputs more efficiently than humans.

How ML algorithms work

A data scientist or analyst feeds data sets to an ML algorithm and directs it to examine specific variables within them to identify patterns or make predictions. The idea is for the algorithm to learn over time and on its own. The more data it analyzes, the better it becomes at making accurate predictions without being explicitly programmed to do so, just like humans would.

This training data is also known as input data. The data classification or predictions produced by the algorithm are called outputs. Developers and data experts who build ML models must select the right algorithms depending on what tasks they wish to achieve. For example, certain algorithms lend themselves to classification tasks that would be suitable for disease diagnoses in the medical field. Others are ideal for predictions required in stock trading and financial forecasting.

Supervised vs. unsupervised algorithms

Most ML algorithms are broadly categorized as being either supervised or unsupervised. The fundamental difference between supervised and unsupervised learning algorithms is how they deal with data. Two other categories are semi-supervised and reinforcement algorithms.

Supervised algorithms

These algorithms deal with clearly labeled data, with direct oversight by a data scientist. They have both input data and desired output data provided for them through labeling.

Supervised algorithms typically serve two purposes: classification or regression. In classification problems, an algorithm can accurately assign different data into specific categories – such as dogs and cats -- which becomes feasible with labeled data.

There are many real-world use cases for supervised algorithms, including healthcare and medical diagnoses, as well as image recognition. In both cases, classification of data is needed.

In regression problems, an algorithm is used to predict the probability of an event taking place – known as the dependent variable -- based on prior insights and observations from training data -- the independent variables. A use case for regression algorithms might include time series forecasting used in sales.

Unsupervised data

Unsupervised algorithms deal with unclassified and unlabeled data. As a result, they operate differently from supervised algorithms. For example, clustering algorithms are a type of unsupervised algorithm used to group unsorted data according to similarities and differences, given the lack of labels.

Unsupervised algorithms can also be used to identify associations, or interesting connections and relationships, among elements in a data set. For example, these algorithms can infer that one group of individuals who buy a certain product also buy certain other products.

Semi-supervised algorithms

However, many machine learning techniques can be more accurately described as semi-supervised, where both labeled and unlabeled data are used.

Reinforcement algorithms

Reinforcement algorithms – which use reinforcement learning techniques-- are considered a fourth category. They're unique approach is based on rewarding desired behaviors and punishing undesired ones to direct the entity being trained using rewards and penalties.

Types of machine learning algorithms

There are several types of machine learning algorithms, including the following:

1. Linear regression

A linear regression algorithm is a supervised algorithm used to predict continuous numerical values that fluctuate or change over time. It can learn to accurately predict variables like age or sales numbers over a period of time.

2. Logistic regression

In predictive analytics, a machine learning algorithm is typically part of a predictive modeling that uses previous insights and observations to predict the probability of future events. Logistic regressions are also supervised algorithms that focus on binary classifications as outcomes, such as "yes" or "no."

3. Decision tree

This is a supervised learning algorithm used for both classification and regression problems. Decision trees divide data sets into different subsets using a series of questions or conditions that determine which subset each data element belongs in. When mapped out, data appears to be divided into branches, hence the use of the word tree.

4. Support vector machine

SVMs are used for classification, regression and anomaly detection in data. An SVM is best applied to binary classifications, where elements from a data set are classified into two distinct groups.

5. Naïve Bayes

This algorithm performs classifications and makes predictions. However, it's one of the simplest supervised learning algorithms and assumes that all features in the input data are independent of one another; one data point won't affect another when making predictions.

6. Random forest

These algorithms combine multiple unrelated decision trees of data, organizing and labeling data using regression and classification methods.

7. K-means

This unsupervised learning algorithm identifies groups of data within unlabeled data sets. It groups the unlabeled data into different clusters; it's one of the most popular clustering algorithms.

8. K-nearest neighbors

KNNs classify data elements through proximity or similarity. An existing data group that most closely resembles a new data element is the one that element will be grouped with.

9. Artificial neural networks

ANNs, or simply neural networks, are groups of algorithms that recognize patterns in input data using building blocks called neurons. These neurons loosely resemble neurons in the human brain. They're trained and modified over time through supervised training methods.

10. Dimensionality reduction

When a data set has a high number of features, it's said to have high dimensionality. Dimensionality reduction refers to stripping down the number of features so that only the most meaningful insights or information remain. An example of this method is principal component analysis.

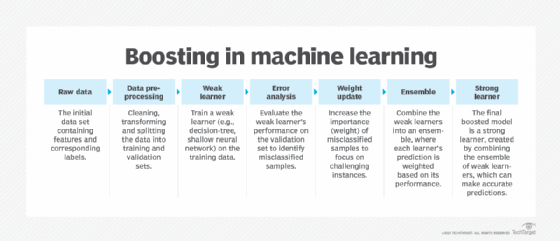

11. Gradient boosting

This optimization algorithm reduces a neural network's cost function, which is a measure of the size of the error the network produces when its actual output deviates from its intended output.

12. AdaBoost

Also called adaptive boosting, this supervised learning technique boosts the performance of an underperforming ML classification or regression algorithm by combining it with weaker ones to form a stronger algorithm that produces fewer errors.

Data scientists must understand data preparation as a precursor to feeding data sets to machine learning models for analysis. Learn the six steps involved in the data preparation process.