What is supervised learning?

Supervised learning is a subcategory of machine learning (ML) and artificial intelligence (AI) where a computer algorithm is trained on input data that has been labeled for a particular output. The model is trained until it can detect the underlying patterns and relationships between the input data and the output labels, enabling it to yield accurate labeling results when presented with never-before-seen data.

In supervised learning, the aim is to make sense of data within the context of a specific question. Supervised learning is good at regression and classification problems, such as determining what category a news article belongs to or predicting the volume of sales for a given future date. Organizations can use supervised learning in processes such as anomaly detection, fraud detection, image classification, risk assessment and spam filtering.

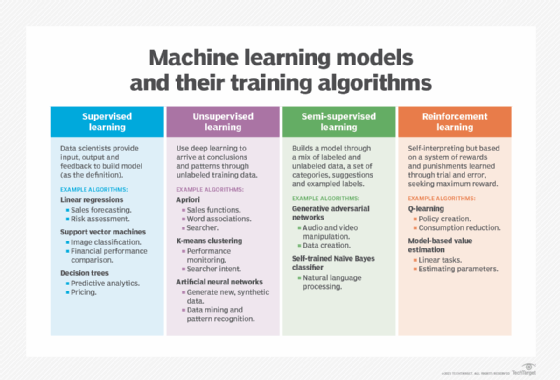

In contrast to supervised learning is unsupervised learning. In this approach, the algorithm is presented with unlabeled data and is designed to detect patterns or similarities on its own, a process described in more detail below.

How does supervised learning work?

Like all machine learning algorithms, supervised learning is based on training. During its training phase, the system is fed labeled data sets, which instruct the system on what output variable is related to each specific input value. The trained model is then presented with test data. This is data that has been labeled, but the labels haven't been revealed to the algorithm. The aim of the test data is to measure how accurately the algorithm performs on unlabeled data.

General, basic steps while setting up supervised learning include the following:

- Determine the type of training data that will be used as a training set.

- Collect labeled training data.

- Divide the training data into training, test and validation data sets.

- Determine an algorithm to use for the ML model.

- Run the algorithm with the training data set.

- Evaluate the model's accuracy using different metrics such as the F1 and logarithmic scores. If the model predicts correct outputs, then it's accurate.

- Regularly monitor the model's performance and update it as necessary. The model might require retraining with new data to ensure its accuracy and relevance.

As an example, an algorithm could be trained to identify images of cats and dogs by feeding it an ample amount of training data consisting of different labeled images of cats and dogs. This training data would be a subset of photos from a much larger data set of images. After training, the model should then be capable of predicting if an output of an image is either a cat or a dog. Another set of images can be run through the algorithm to validate the model.

How does supervised learning work in neural networks?

In neural network algorithms, the supervised learning process is improved by constantly measuring the resulting outputs of the model and fine-tuning the system to get closer to its target accuracy. The level of accuracy obtainable depends on two things: the available labeled data and the algorithm that's used. In addition, the following factors affect the process:

- Training data must be balanced and cleaned. Garbage or duplicate data skews the AI's understanding; therefore, data scientists must be careful with the data the model is trained on.

- The diversity of the data determines how well the AI performs when presented with new cases; if there aren't enough samples in the training data set, the model falters and fails to yield reliable answers.

- High accuracy, paradoxically, isn't necessarily a good indication. It could also mean the model is suffering from overfitting, i.e., it's overtuned to its particular training data set. Such a data set might perform well in test scenarios, but fail miserably when presented with real-world challenges. To avoid overfitting, it's important that the test data is different from the training data to ensure the model isn't drawing answers from its previous experience, but instead, that the model's inference is generalized.

- The algorithm, on the other hand, determines how that data can be used. For instance, deep learning algorithms can be trained to extract billions of parameters from their data and reach unprecedented levels of accuracy, as demonstrated by OpenAI's GPT-4.

Types of supervised learning

Apart from neural networks, there are many other supervised learning algorithms. These algorithms primarily generate two kinds of results: classification and regression.

Classification models

A classification algorithm aims to sort inputs into a given number of categories -- or classes -- based on the labeled data on which it was trained. Classification algorithms can be used for binary classifications, such as classifying an image as a dog or a cat, filtering email into spam or not spam, and categorizing customer feedback as positive or negative.

Examples of classification ML techniques include the following:

- A decision tree separates data points into two similar categories from a tree trunk to branches and then leaves, creating smaller categories within categories.

- Logistic regression analyzes independent variables to determine a binary outcome that falls into one of two categories.

- A random forest is a collection of decision trees that gather results from multiple predictors. It's better at generalization, but less interpretable when compared with decision trees.

- A support vector machine finds a line that separates data in a particular set into specific classes during model training and maximizes the margins of each class. These algorithms can be used to compare relative financial performance, value and investment gains.

- Naive Bayes is a widely used classification algorithm that's used for tasks involving text classification and large volumes of data.

Regression models

Regression tasks are different, as they expect the model to produce a numerical relationship between the input and output data. Examples of regression algorithms in ML include predicting real estate prices based on ZIP code, predicting click rates in online ads in relation to time of day and determining how much customers would be willing to pay for a certain product based on their age.

Algorithms commonly used in supervised learning programs include the following:

- Bayesian logic analyzes statistical models while incorporating previous knowledge about model parameters or the model itself.

- Linear regression predicts the value of a variable based on the value of another variable.

- Nonlinear regression is used when an output isn't reproducible from linear inputs. With this, data points share a nonlinear relationship; for example, the data might have a nonlinear, curvy trend.

- A regression tree is a decision tree where continuous values can be taken from a target variable.

- Polynomial regression enables modeling more complex relationships between the input features and the output variable by fitting a polynomial equation to the data.

When choosing a supervised learning algorithm, there are a few considerations. The first is the bias and variance that exist within the algorithm, as there's a fine line between being flexible enough and too flexible. Another is the complexity of the model or function that the system is trying to learn. As noted, the heterogeneity, accuracy, redundancy and linearity of the data should also be analyzed before choosing an algorithm.

Supervised vs. unsupervised learning

The chief difference between supervised and unsupervised learning is in how the algorithm learns.

In unsupervised learning, the algorithm is given unlabeled data as a training set. Unlike supervised learning, there are no correct output values; the algorithm determines the patterns and similarities within the data instead of relating it to some external measurement. In other words, algorithms can function freely to learn more about the data and discover interesting or unexpected findings that human beings weren't looking for.

Unsupervised learning is popular in clustering algorithms -- the act of uncovering groups within data -- and association -- the act of predicting rules that describe the data.

Because the ML model works on its own to discover patterns in data, the model might not make the same classifications as in supervised learning. In the cats-and-dogs example, the unsupervised learning model might mark the differences, similarities and patterns between cats and dogs, but can't label them as cats or dogs.

However, it's important to note that both approaches offer specific advantages and are frequently used together to optimize output. For example, unsupervised learning can help preprocess data or identify features that can be used in supervised learning models.

Benefits and limitations of supervised learning

Supervised learning models have some advantages over the unsupervised approach, but they also have limitations. The benefits of supervised learning include the following:

- Supervised learning systems are more likely to make judgments that humans can relate to because humans have provided the basis for decisions.

- Performance criteria are optimized due to additional experienced help.

- It can perform classification and regressive tasks.

- Users control the number of classes used in the training data.

- Models can make predictive outputs based on previous experience.

- The classes of objects are labeled in exact terms.

- It's suitable for tasks with clear outcomes and well-defined target variables because it involves training a model on data where both the inputs and the corresponding outcomes or labels are known.

Limitations of supervised learning include the following:

- In the case of a retrieval-based method, supervised learning systems have trouble dealing with new information. If a system with categories for cats and dogs were presented with new data -- say, a zebra -- it would have to be incorrectly lumped into one category or the other. However, if the AI system were generative -- that is, unsupervised -- it might not know what the zebra is, but it would be able to recognize it as belonging to a separate category.

- Supervised learning also typically requires large amounts of correctly labeled data to reach acceptable performance levels, and such data might not always be available. Unsupervised learning doesn't suffer from this problem and can work with unlabeled data as well.

- Supervised models can be time-consuming, as the models need time to be trained prior to use.

- Supervised learning algorithms can't learn independently and require human intervention to validate the output variable.

Semisupervised learning

In cases where supervised learning is needed, but there's a lack of quality data, semisupervised learning can be the appropriate learning method. This learning model resides between supervised learning and unsupervised; it accepts data that's partially labeled, i.e., most of the data lacks labels.

The following are some key benefits of semisupervised learning:

- Semisupervised learning is helpful when there's a large amount of unlabeled data available, but labeling all of it is too expensive or difficult. Semisupervised learning determines the correlations between the data points -- just as unsupervised learning does -- and then uses the labeled data to mark those data points. Finally, the entire model is trained based on the newly applied labels.

- Semisupervised learning can yield accurate results and applies to many real-world problems where the small amount of labeled data would prevent supervised learning algorithms from functioning properly. As a rule of thumb, a data set with at least 25% labeled data is suitable for semisupervised learning. Facial recognition, for instance, is ideal for semisupervised learning; the vast number of images of different people is clustered by similarity and then made sense of with a labeled picture, giving identity to the clustered photos.

Examples of semisupervised learning include text classification, image classification and anomaly detection.

Key use cases and examples of supervised learning

Supervised learning has many use cases across various industries. One possible use case for supervised learning is news categorization. One approach is to determine which category each piece of news belongs to, such as business, finance, technology or sports. To solve this problem, a supervised model would be the best fit. Humans would present the model with various news articles and their categories, and have the model learn what kind of news belongs to each category. This way, the model becomes capable of recognizing the news category of any article it looks at based on its previous training experience.

However, humans might also conclude that classifying news based on the predetermined categories isn't sufficiently informative or flexible, as some news might talk about climate change technologies or the workforce problems in an industry. There are billions of news articles out there, and separating them into 40 or 50 categories might be an oversimplification. Instead, a better approach could be to find the similarities between the news articles and group the news accordingly. That would mean looking at news clusters instead, where similar articles would be grouped together, and there are no specific categories.

Other common use cases of supervised learning include the following:

- Predictive analytics. Predictive analytics is used extensively in supervised learning, as models can be trained on past data with known outcomes to provide predictions on data that was previously unseen.

- Regression analysis. In regression analysis, supervised learning models predict a continuous output variable from one or more input variables. This approach is commonly used for tasks such as forecasting stock prices and estimating salaries based on various factors.

- Classification tasks. One of the main use cases of supervised learning is in classification tasks. Classification predicts which categories or classes new data falls into based on predefined categories or classes. Spam detection in emails, classifying images and identifying objects are a few examples of classification jobs.

- Fraud detection and risk management. The financial industry uses supervised learning for portfolio management, fraud detection and risk management. For example, it's used in banking fraud detection for identifying unusual activity and questionable online transactions that require more extensive research.

- Personalized recommendations. E-commerce and streaming platforms use supervised learning to provide users with personalized recommendations based on their past interactions and interests.

- Healthcare tasks. Supervised learning is applied in healthcare for tasks such as disease diagnosis, patient outcome prediction, and personalized treatment plans and recommendations based on patient data.

- Autonomous vehicles. Supervised learning is used in the automotive industry for autonomous vehicle functions. For example, data from vehicle-to-vehicle and vehicle-to-infrastructure communications is used to assess road conditions, traffic patterns and potential hazards.

- Speech recognition. In the context of speech recognition, supervised learning is used to understand and process human speech, integrating grammar, syntax, and the structure of audio and voice signals to comprehend spoken language. Virtual assistants such as Siri and Alexa, as well as many transcription services, are powered by supervised learning.

- Customer sentiment analysis. Organizations use supervised ML algorithms to extract and identify relevant information -- such as context, emotion and intent -- from large volumes of data for customer sentiment analysis. This can help organizations gain a better understanding of customer interactions and improve brand engagement efforts.

- Credit scoring. Supervised learning is employed to assess the creditworthiness of loan applicants. A labeled data set, which includes historical information on past applicants such as their credit history, income, employment status and other relevant factors, is used to train the ML algorithm.

Learn how supervised, unsupervised, semisupervised and reinforcement learning compare. Further, explore the different types of AI algorithms and how they work.