Getty Images/iStockphoto

11 emerging technologies to watch in 2026

Technology never stands still, and 2026 could see it go full sprint. See our picks for emerging and developing technologies and tell us what tech your organization is watching.

2026 highlights several developments in new, emerging and evolving technologies that could revolutionize industries -- and society -- in unimaginable ways.

Here are our picks of emerging technology to watch in 2026, listed alphabetically. Some of these technologies are new and waiting for the opportunity to make their mark. Others are more familiar and could potentially evolve in the coming year. Read on and let us know in our survey what tech you're watching in 2026.

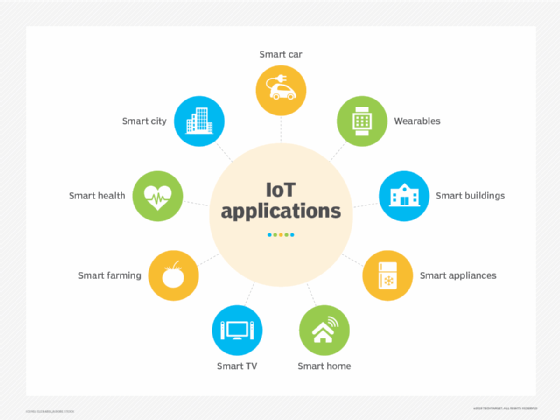

1. Advanced IoT (AIoT)

The internet of things and its proliferation of devices have brought real-world monitoring and control to every industry, including manufacturing, logistics, retail and healthcare. IoT produces enormous volumes of real-time data and is a foundational technology for ML models and AI systems.

The emergence of AIoT promises a smoother blend of integration, processing and communication. AIoT creates intelligent and autonomous systems that can share data, make decisions and learn independently to solve business problems. This evolution requires advancements in several key elements, including the following:

- Advanced devices. AIoT devices incorporate sensors and actuators that collect data and execute physical actions across various environments. These devices will enhance data quality and support on-device analytics.

- Advanced connectivity. AIoT devices will support high-performance Wi-Fi and cellular 5G and 6G connectivity options for more bandwidth with lower latency and power demands. These options embrace reduced capability (RedCap) 5G, which can enhance performance without 5G's extreme speeds. According to research from Omdia, a division of Informa TechTarget, 84% of enterprises using IoT devices are exploring RedCap, with 41% conducting trials and 32% planning to introduce the technology in the next two years, among those surveyed.

- Advanced processing. AIoT can offer better support for edge and cloud computing. The addition of on-device processing brings edge computing to the IoT device itself. If users require additional computing power, the AIoT device can exchange data and perform local processing on an edge gateway.

- Advanced AI and ML. A central tenet of AIoT is its integration with ML and AI, whether that's on-device, in an edge gateway or in the cloud. The application of AI lets devices analyze massive data sets to determine patterns, spot anomalies, make predictions, enable device autonomy and learn.

- Advanced security. IoT data is sensitive and subject to data privacy and security regulations. Consider data collected from a patient's wearable device. AIoT will support strong security features like authentication, encryption and network security.

2. Advanced robotics

Robots have been a foundation of modern manufacturing for decades. In 2026, robotics will advance as AI and sensory technologies blend. This will result in more sophisticated and dexterous robotic devices for logistics, medicine, manufacturing and defense.

Advanced robotics goes beyond simple preset tasks and pathways, such as moving and combining parts from one conveyor to another. These devices use ML, AI and enhanced sensors to create more intelligent and versatile machines capable of perceiving, adapting, learning and performing tasks with high autonomy within complex and changing environments. Key components of an advanced robotics system include the following:

- Advanced dexterity. Advanced robots require more delicate and multifaceted mechanical systems. An advanced medical robot might provide hospital workers with numerous arms equipped with sensors and tipped with dexterous appendages such as precision medical tools or even human-like hands.

- Advanced mobility. Autonomous mobility systems let robots navigate and move within dynamic environments using sensors and AI for mapping, obstacle avoidance and positioning.

- Advanced sensors. Advanced robots use a range of precision sensors to collect data. These include cameras, gyros, accelerometers as well as light detection and ranging (LiDAR), ultrasonic, force and torque, and environmental sensors for factors like light, temperature and pressure.

- Advanced ML and AI. ML and AI will enable robots to process large volumes of data, make decisions, solve problems and adapt in real time.

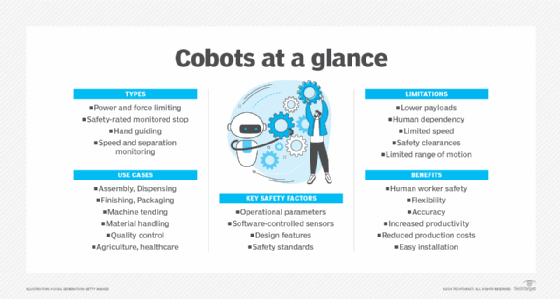

Robotics has seen steady growth over the last five years. A StartUs Insights report found the global robotics market has been growing at a modest 2.14% yearly and could reach $88.3 billion in 2026. One of the most impressive trends is the evolution of cobots, collaborative robots deployed in manufacturing, logistics and assembly environments that provide versatile automation and human-machine collaboration.

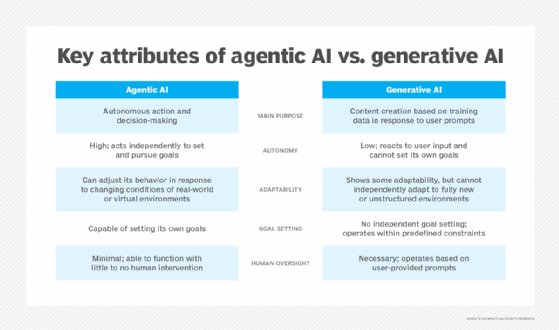

3. Agentic and edge AI

AI agents independently accomplish complex tasks by understanding context, reasoning and setting goals. They separate complex goals into discrete tasks or subtasks, executing those tasks and learning from the outcomes. This can be accomplished with little or no human intervention.

Omdia research found that 51% of surveyed organizations are deploying AI agents, while 27% are experimenting with pilot projects and another 22% are evaluating use cases. Another 53% of respondents use or are considering using AI agents capable of autonomous decision making.

Future developments will provide additional support for multi-agent AI systems to enhance agent collaboration, task delegation and specialization to solve complex problems. Advancements in integration will also improve agentic use of enterprise software, database systems and other platforms. Hybrid agentic systems might combine large language models (LLMs) with traditional programming for high precision in critical tasks. Ultimately, successful agents must balance autonomy with predictable and repeatable outcomes.

Edge AI builds on the ability of edge computing to perform tasks closer to raw AI data. This could enable AI to make faster, real-time decisions while enhancing security and reducing reliance on network connectivity.

Advances in edge AI will likely focus on performance, efficiency and security. Specialized AI accelerators, such as neural processing units (NPUs) and tensor processing units (TPUs), on chips will provide for more sophisticated ML models on endpoint devices. Devices will improve energy and bandwidth efficiency, using less power and exchanging less data for lower network bandwidth use. Broader adoption of edge AI will encourage data retention on the device rather than transmitting it to the cloud, improving security and privacy.

4. Autonomous systems and vehicles

It's been a long road for autonomous vehicles (AVs). 2026 could further advance these systems, which include products and platforms that operate independently to accomplish user goals in complex and dynamic environments. Autonomous systems include warehouse robots, drones and AVs.

A report by Metastat Insight estimated that the global autonomous system market could reach over $11.3 billion by 2032, up from around $4.7 billion in 2025.

AVs are a subset of autonomous systems that have the following capabilities:

- Sense environmental variables, such as traffic, pedestrians, objects and road conditions.

- Adjust speed and direction.

- Respond to events and hazards.

- Establish a flight path or plan driving directions.

- Execute the trip without direct human intervention.

All autonomous systems rely on several key components that will likely evolve in 2026. These include sensors, computing and communications capabilities, software, ML models and AI algorithms.

5. Extended reality (XR) and spatial computing

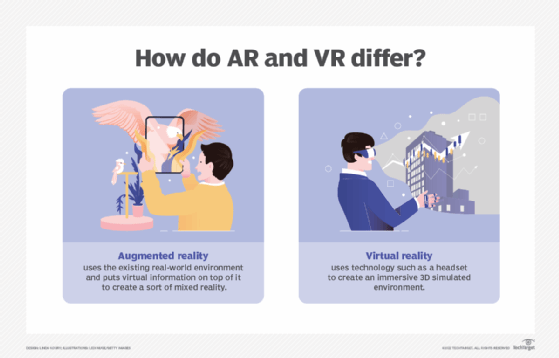

Immersing and blending digital information into the real world has been an ongoing objective for XR technologies. The promise of creating and integrating virtual environments into real-world settings has potential benefits for users across industries. The following are three primary variations of XR:

- Augmented reality (AR). AR overlays digital information and objects onto our view of the real world. For example, a user might equip an AR headset to identify zoo animals and highlight key facts about them as visitors pass through displays.

- Virtual reality (VR). VR replaces the real world with a fully immersive digital representation of current surroundings, or even an entirely different environment. For example, an architect might use VR to explore a newly designed building.

- Mixed reality (MR). MR lets users interact with digital and physical objects based on context and intent. For example, a medical student might use a real scalpel and other instruments to perform a virtual procedure on a digital patient for training or practice.

Although XR technologies aren't new, they are likely to gain traction in 2026. Spatial computing, the underlying technology that enables XR, might bring a new generation of AR and VR. AI and software improvements will drive new business use cases in fields like medicine and manufacturing. According to a report from Research Nester, the XR industry is expected to grow at a CAGR of 33.2% from 2026 to 2035 to reach a value of about $4.4 trillion by 2035.

6. Brain-computer interfaces (BCIs)

Until recently, the notion of a BCI was the stuff of science fiction. In general terms, a BCI establishes direct communication between the electrical signals of the human brain and an external device, such as a computer or computer-driven device, like a prosthetic limb. The BCI detects brain activity using external or implanted sensors. Detected brain signals are translated into commands, such as open an application, move an arm or form a word. BCIs could be used to restore movement or enable speech for injured people and to enhance human abilities.

Recent developments are bringing new attention to BCIs. New brain implants can support wireless power and high-bandwidth data on a single microscopic chip with better performance than previous BCI devices. Improved inflammation management techniques enhance signal quality, maintain device performance and extend device longevity. Wearable sensors that detect brain signals can fit between strands of hair. AI can help BCIs translate thoughts into speech, letting individuals with disabling conditions speak.

2026 could bring new, minimally invasive brain implants capable of detecting brain signals with high precision and bandwidth, and minimal stress on brain tissue. New noninvasive, even wearable BCI devices will provide safer and more accessible uses. The three principal directions for the future of this technology will be in medical rehabilitation, consumer electronics and wearable devices.

Clinical trial success and current regulations will temper BCI market growth. However, Statsnex reported that the global market for therapeutic BCI devices is expected to reach $8.7 billion by 2031 from $2.5 billion in 2025.

7. Neuromorphic computing

Neuromorphic computing emulates the structure and function of the human brain and is highly effective for challenging tasks such as pattern recognition. It uses specialized neuromorphic chips to create computing environments out of artificial neurons and synapses.

Neuromorphic chips process data with high levels of parallelism, using event-driven behavior to allocate computing when a data spike occurs and adopting low-power hardware designs. Neuromorphic chips enable their computing environments to be highly adaptive, develop strong contextual understanding and learn with greater efficacy, all characteristics of the human brain.

This kind of computing power makes neuromorphic technology desirable in edge AI, robotics and IoT devices. Numerous companies produce neuromorphic chips, including BrainChip, IBM's NorthPole, Innatera, Intel's Loihi, Koniku, Qualcomm, Samsung, SynSense and Vivum Computing.

While in relative infancy, neuromorphic computing has potential in areas such as advanced AI, deep learning, robotics, autonomous systems, BCIs, event input devices like cameras and anomaly detection. The chips will integrate into low-power computing devices, such as edge AI systems, wearables and IoT devices. They will take advantage of advances in spiking neural networks for complex natural language processing and sophisticated pattern-matching tasks.

8. Neuro-symbolic reasoning

AI's abilities to contextualize and reason are evolving with advancements in neuro-symbolic AI reasoning. Neuro-symbolic reasoning offers a subtle and sophisticated approach to reasoning compared with conventional AI. It couples data-driven neural networks for pattern recognition with rule-based symbolic logic for knowledge.

The practical limitations of AI models make neuro-symbolic reasoning more appealing. Traditionally, companies fed AI models more data and training to improve them. However, AI companies realized that models can't improve much more through data and training alone. Rather than relying on pattern recognition or logical rules, neuro-symbolic reasoning combines the two complementary paradigms of neural networks and symbolic systems.

Neuro-symbolic reasoning brings the following benefits to AI:

- Reduced hallucinations.

- Increased speed and efficiency.

- Improved interpretation and explainability.

- Superior task handling, learning and adaptability to changing situations.

Neuro-symbolic reasoning will begin to displace single-approach AI models in agentic AI systems, enabling agents to use LLMs and other neural components for perception. It will use the concept of logical chain of thought to improve and fine-tune LLM reasoning, using explicit conditions and logical steps. Emerging models, such as neural theorem provers, connect symbolic logic and deep learning, enabling more accurate and explainable AI systems. Over the course of 2026, additional technologies will integrate logic into neural networks, providing more robust probabilistic reasoning.

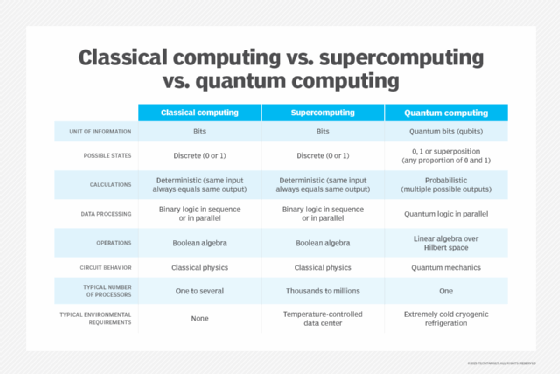

9. Quantum computing

Quantum computing uses principles of quantum mechanics -- such as entanglement and superposition -- in specialized hardware systems to let users solve complex problems that are impossible or impractical to solve with traditional computers. Quantum computers process information using quantum bits, or qubits. Qubits exist as a logical zero, one or any state between the two. This enables massive parallel computing, which has potential for advancements in areas such as drug research and cryptography.

Current quantum computing hardware systems are under development and experimental. Most systems can only handle a limited number of qubits, and errors are common, limiting the power and uses of current quantum computers.

In 2026, quantum hardware is expected to become more stable and reliable as developers refine and implement quantum error correction to enhance fault tolerance. These computers will likely support more qubits, enabling various developments, such as the following:

- Hybrid systems. Businesses might deploy quantum-digital hybrid systems using traditional high-performance computing for many digital tasks. The quantum system would exist as a specialized component, similar to GPUs and NPUs, for complex problems.

- Algorithms. New algorithms and ML models for quantum-based AI will support the most challenging data analysis tasks, such as cyber anomaly detection and security.

- Sensory devices. Quantum technology could provide precise sensory devices for various fields, like healthcare, manufacturing and exploration.

- Post-quantum cryptography. Quantum-based cryptography uses principles of physics rather than mathematics to encode data and instantly reveal eavesdropping.

Quantum computing promises significant evolution in economics, employment and intellectual property development. A report by StartUs Insights predicted the global quantum computing market will reach $5.3 billion by 2029. The report further noted that the quantum computing industry employs more than 1 million people worldwide, with 59,000 new employees last year, while posting over 296,000 patents.

10. Small modular reactors

The explosive growth of expansive cloud platforms and AI is fueling the demand for data center power. These data centers strain the power grid with their enormous energy requirements for GPUs, NPUs, TPUs, cooling systems and other IT infrastructures. Data center loads can exceed grid capacity, resulting in increased power costs, blackout risks and voltage problems to homes and businesses.

A Pew Research Center report noted that U.S. data centers consumed 183 terawatt-hours (TWh) of electricity in 2024, according to the International Energy Agency, and this figure is expected to grow by 133% to 426 TWh by 2030. One emerging alternative to power limitations is the development and deployment of small modular reactors, or SMRs.

SMRs are compact, advanced nuclear reactors that use conventional fission for heat, which becomes electricity. The factory-built modules produce about 300 megawatts of electricity. They're transported to their sites, where crews assemble them and bring them online. SMRs incorporate the latest safety features and can use various media for cooling, such as water, molten salt or gases. SMRs can provide local, carbon-free energy for data centers while relieving the strain on local and regional power grids.

Although some early SMRs are already in use, mainstream commercial ones are still in the design and development phase. A report from Research Nester said that the SMR market was valued at $6.7 billion in 2025 and could reach $10.9 billion by 2035.

Expect 2026 to see a dramatic acceleration in SMR testing and new executive orders from the Trump administration that facilitate faster licensing. Advances in modular fabrication should yield faster SMR construction and deployment.

11. Structural batteries

Batteries have been a principal means of energy storage since about 1800. It's impossible to imagine any industrial or technological device that doesn't require a battery for power storage and delivery. But batteries have limitations, can add weight and be a source of system failure.

Recently, designers have started reimagining the role of batteries, combining energy storage with structural relevance to create components that provide energy storage and structural support. These structural batteries can reduce the weight and complexity of systems, such as electric vehicles, aircraft and portable electronic devices. An effective combination of energy storage and weight reduction lets structural batteries provide increased battery life while reducing weight and increasing range.

Structural batteries use light and strong materials. For example, carbon fiber can serve as a light, strong structural element and the component's negative electrode. The battery component often includes an epoxy resin with a polymer electrolyte. The positive electrode is integrated within the composite, so the material can serve as a battery.

Advances are appearing quickly with this technology. A report by Markets and Markets predicted that the global structural battery market could reach a value of $190 million by 2027 as industry leaders bring early structural battery components to market.

The technology is evolving to enhance energy density, enabling composite materials to compete with traditional battery capacities and address battery safety and physical issues, such as swelling and crash tolerance. Structural batteries must also meet automotive safety regulations, aerospace standards and sustainability demands.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 30 years of technical writing experience in the PC and technology industry.