The need for common sense in AI systems

Building explainable and trustworthy AI systems is paramount. To get there, computer scientists Ron Brachman and Hector Levesque suggest infusing common sense into AI development.

When we imagine a future for fully autonomous AI systems operating in the real world, we must also imagine that AI can react to unanticipated events with common sense.

Ron Brachman and Hector Levesque consider this dilemma in their book Machines like Us: Toward AI with Common Sense (MIT Press). Brachman, director of the Jacobs Technion-Cornell Institute and a professor in Cornell's department of computer science, and Levesque, a former professor in the University of Toronto's department of computer science, started working together on knowledge representation and reasoning research in the early 1980s.

"We see [AI] systems mess up in various ways because they simply haven't been exposed to everything that could possibly happen," Brachman said.

Quite often, human beings are faced with scenarios we have never encountered, Brachman said. Without a mechanism to make sense of what we're seeing and guide our decision-making -- often known as common sense in human cognition -- we'd be lost. To be safe and effective, AI systems also need a mechanism for making sense of new information.

In Machines like Us, Brachman and Levesque imagine a world where proactive explainability and trust are at the forefront of any AI system, arguing that programming commonsense knowledge and reasoning into AI is imperative. Without the ability to react to the unexpected, AI systems can not only prove futile in critical moments but also present major risks to human safety.

The current lack of trust in AI

AI systems need to react to the unexpected in ways humans understand. When they don't, disaster can strike -- in increasingly devastating ways, as AI rollouts come to encompass life-or-death scenarios.

"We don't believe a system that purports to be autonomous should be released in the world without having common sense," Brachman said. "And yet, I think we're going to start seeing that, and we'll probably see the consequences."

In August 2023, California regulators approved robotaxi companies Cruise and Waymo to operate their self-driving cars in San Francisco. Just a few months later, however, Cruise recalled its entire fleet after an incident in which a pedestrian was struck by a car and thrown into the path of one of the company's robotaxis. The Cruise vehicle then dragged the pedestrian roughly 20 feet, failing to recognize that the human was still caught in the wheels.

Devastating accidents like these highlight the importance of building AI that can react to the unexpected in commonsensical ways. Citing instances like road closures due to a parade or a crowded elementary school pickup, Brachman and Levesque argue that a self-driving car -- or any AI system operating in the real world, for that matter -- needs human-like common sense to react appropriately in unprecedented situations.

"It's impossible to know in advance what's going to happen and to have it written down in a manual for [the AI machine] to learn," Levesque said. "You've got to use common sense on it."

The need for proactive, explainable AI

An essential element of explainable AI will be the ability to fully understand why AI models react the way they do. Moreover, users need to be able to trust that the AI will react in a consistent, comprehensible way.

"You want systems that are built to have reasons for what they do," Levesque said. "And we don't have that now."

Instead, AI systems today operate within a framework of reactive explainability, where humans attempt to give plausible explanations for their model's actions in the aftermath of an unanticipated event.

Brachman and Levesque are cautiously excited about the progress large language models (LLMs) have recently made in this area. LLMs showcase an increasingly accurate ability to find connections in massive amounts of text -- something that Brachman and Levesque could only postulate that AI would be able to do when they published Machines like Us in early 2022.

Despite LLMs' promise, achieving fully explainable and trustworthy AI will require far greater levels of reasoning ability and transparency than LLMs currently offer. "There's so little understanding of how these systems come up with the particular answers that they come up with," Levesque said.

This is true even for the developers of these systems, who might not be able to explain why an LLM's response to the same prompt differs over time. This lack of understanding can be catastrophic for fully autonomous AI making critical decisions -- for instance, robotaxis in San Francisco.

Building trust with common sense

How do you build an autonomous, artificial system that can reason like a human? Brachman and Levesque turn to psychology and human cognition.

The goal is to create systems that have reasoning to back up their behaviors, Levesque said. These reasons can manifest as principles, rules and commonsense knowledge -- not so different from a human's reasoning abilities -- that drive the system to make the right choice.

"It's not going to be enough for these systems to know the right things and to be able to sort of recite it when they're asked to," Levesque said. "It's got to be that those principles, whatever they are, actually guide the behavior in the right way."

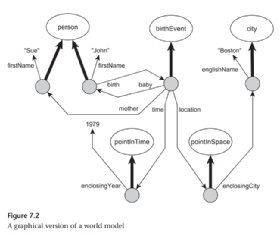

In their book, Brachman and Levesque propose programming AI with human psychology. Using a combination of commonsense knowledge bases, logical reasoning, world models and arithmetic, they sketch what an AI with common sense might look like.

Using agents to symbolize aspects of commonsense knowledge can transform real-world examples into something an AI system can process. These symbolic representations, or world models, enable AI systems to develop reasoning capabilities through computational processing. These reasoning abilities, in turn, can mimic the functioning of human common sense.

But these frameworks for computational common sense must account for the reality that human beings aren't perfect or consistent. Differences in beliefs and ethics can elicit different reactions to the same problem. Moreover, certain events transcend common sense; sometimes, there's no clear-cut right or wrong response to a given situation.

"Common sense is an interesting thing," Brachman said. "It only takes you so far."

Although common sense provides guardrails for how we interact with the world, that doesn't mean it's always sufficient or always works. If it did, subway stations wouldn't need signs reminding people not to try to retrieve something they dropped on a live track.

Building artificial intelligence that adequately considers the breadth of human intelligence will be key. Despite the flaws and complexities associated with human common sense, incorporating a foundational layer of analytic reasoning can still significantly improve the operational quality of AI systems.

"I think [the book is] a good start," Brachman said. "But there's so much more that would need to be done to make a robust, successful engine that can operate in the real world."

A downloadable PDF version of Chapter 1 of 'Machines like Us' is available here.

Olivia Wisbey is the associate site editor for TechTarget Enterprise AI. She graduated from Colgate University, where she served as a peer writing consultant at the university's Writing and Speaking Center.

About the authors

Ron Brachman is director of the Jacobs Technion-Cornell Institute at Cornell Tech in New York City and professor of computer science at Cornell University. During a long career in industry, he held leadership positions at Bell Labs, Yahoo and DARPA.

Hector J. Levesque is professor emeritus in the department of computer science at the University of Toronto. He is the author of Common Sense, the Turing Test, and the Quest for Real AI (MIT Press), and other books.