Olivier Le Moal - stock.adobe.co

How the Model Context Protocol simplifies AI development

Nicknamed the USB-C port for AI applications, the Model Context Protocol standardizes AI interactions with external data, APIs and services while maintaining resource control.

The Model Context Protocol, or MCP, is a new open standard that makes it easier for AI models to connect with external data, APIs and services. Released by Anthropic in late 2024, MCP was designed to move beyond model-specific approaches, creating a universal framework that any language model can adopt.

Interest in MCP is growing fast. In March 2025, OpenAI announced support for the protocol on its platform, and Microsoft has also rolled out MCP support across multiple services in its ecosystem.

MCP is quickly becoming the standard for building integrations across generative AI models, data sources and services. This guide covers the basics of MCP's architecture, how it standardizes data exchanges and how to integrate MCP into your own applications.

How the Model Context Protocol works

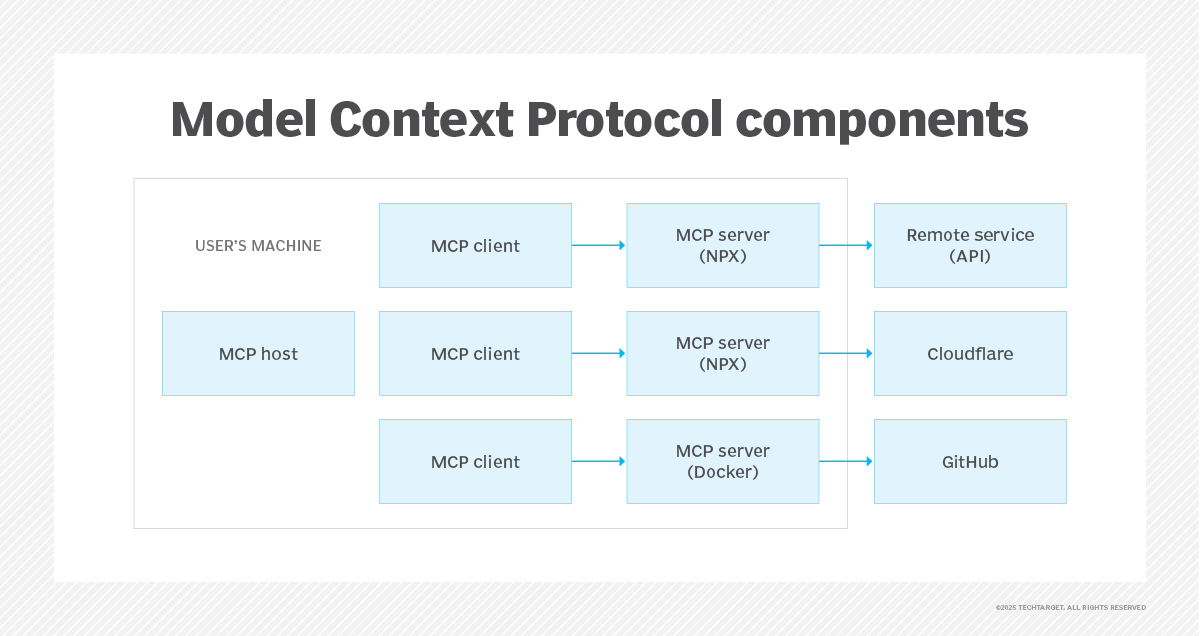

MCP uses a simple architecture with three core components that enable interactions across AI models, services and data sources:

- MCP hosts. They initiate and manage the connection between a model and MCP servers. Currently, only a few programs support hosting, including Claude Desktop and GitHub Copilot.

- MCP servers. Used to expose resources, tools and prompts to clients, they typically run locally on the user's machine, often as npm packages, Docker containers or standalone services. There is currently no standardized support for fully remote MCP servers.

- MCP clients. They are lightweight subprocesses created by hosts. Each client maintains a one-to-one connection with a server to retrieve context and facilitate interactions.

See Figure 1 for an overview of the MCP architecture and component relationships.

An MCP server can provide three main types of capabilities:

- Resources. Structured data such as database records or API responses that the model can use to interact with local files and reference external information.

- Tools. Server-exposed functions that models can invoke automatically with user approval.

- Prompts. Prompts or prewritten prompt templates help users complete specific tasks.

Building MCP servers

When you build an MCP server, you expose APIs and data in a standardized way that generative AI services can consume. A single client can connect to multiple servers.

This means that, if an API is available, it can be made into an MCP server and packaged consistently for a generative AI application. One of MCP's main benefits is that it makes it easy for users to access services using natural-language commands. It also removes the burden of building custom integrations and logic, acting as a proxy between generative AI tools and cloud-based services.

Privacy and control

MCP's design emphasizes resource control and privacy through its architecture and data protection safeguards:

- Resources exposed through servers require user approval before models can access them.

- Server permissions can limit resource exposure to protect sensitive data.

- A local-first architecture ensures that data remains on the user's device unless explicitly shared.

How to integrate MCP into application development

Let's walk through an example of integrating an MCP server into your workflow.

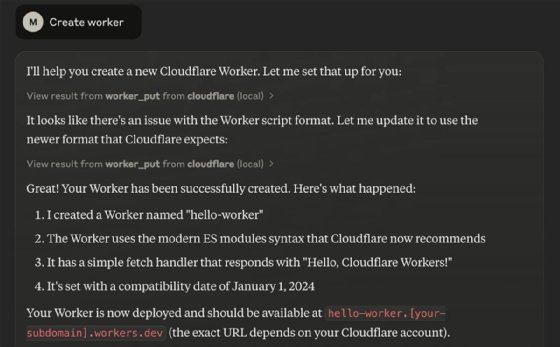

The MCP GitHub page maintains a public directory of MCP servers. Others are offered by providers like Microsoft Copilot Studio. One example is Cloudflare's MCP server, which lets you interact with your Cloudflare resources directly through an MCP-enabled client such as Anthropic's Claude.

To install the Cloudlare MCP server (using NPX), run the following command in your terminal:

npx @cloudflare/mcp-server-cloudflare init

You'll need an active Cloudflare account to complete setup and the Claude desktop app installed to access available features.

After installing the MCP server and restarting Claude, you'll see a new hammer icon in the Claude app, along with a list of available MCP tools from the Cloudflare server.

Figure 2 shows where to find MCP tools in the Claude Desktop app after server setup.

Now, you can use natural-language commands in Claude to manage Cloudflare resources. For example, you can ask Claude to "create a Worker," and it will route the request through the MCP server.

Whenever you trigger a command, the agent will prompt you for confirmation before invoking the server-exposed tool.

Figure 3 illustrates how to use a natural-language command in Claude to create a Cloudflare Worker using an MCP server.

Connecting multiple MCP servers

You can also run multiple MCP servers simultaneously.

For instance, using automation platforms like Zapier, you can expose hundreds of different actions and services through MCP, making them accessible from Claude or GitHub Copilot.

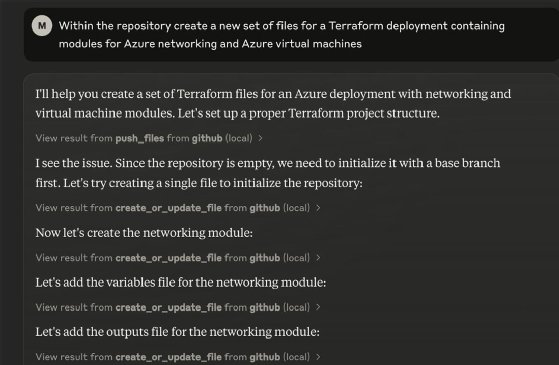

You can even integrate native GitHub operations. By configuring the GitHub MCP server, you can manage repositories, create files and submit issues, all through natural-language interactions.

In the example below, the GitHub MCP server is configured with limited access to specific repositories. Claude is then used to generate a full file structure, including Terraform modules and configuration files, providing a starting point for building infrastructure.

Figure 4 demonstrates how to use the GitHub MCP server to generate a Terraform project structure.

While this example bypasses some traditional Git workflows, it showcases several benefits of integrating MCP with GitHub:

- Inspecting code for security issues.

- Submitting and managing issues.

- Creating README files and project structures.

- Creating repositories or project updates.

Marius Sandbu is a cloud evangelist for Sopra Steria in Norway who mainly focuses on end-user computing and cloud-native technology.