kras99 - stock.adobe.com

5 MCP security risks and mitigation strategies

MCP facilitates interactions between AI models and external services. But at what security cost?

Released in November 2024, Anthropic's Model Context Protocol has quickly become a standard for connecting AI systems to external tools and data sources.

While MCP is still in its infancy, many large technology vendors and a vast ecosystem of AI practitioners have already adopted it. Thousands of different MCP servers currently enable connection to different applications and services from a desktop client.

However, one of the significant concerns with MCP is the security risks it introduces. Many documented vulnerabilities exist, and the protocol lags in security mechanisms and centralized management.

Therefore, MCP users must understand how the protocol works, the risks involved and mitigation strategies for vulnerabilities. Five top MCP security risks stand out:

- Credential exposure.

- Unverified third-party MCP servers.

- Prompt injection attacks.

- Compromised servers.

- Malicious code and unintentional actions.

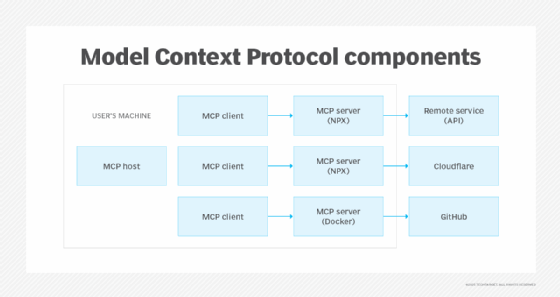

How MCP connects AI models with external services

MCP enables generative AI clients to communicate with third-party services that provide three main types of capabilities:

- Resources. File data or API responses that interact with local files.

- Tools. Model-controlled GPT functions, meaning that tools are exposed from servers to clients with the intention of the AI model being able to invoke them automatically.

- Prompts. Prewritten prompts that help the user do specific tasks.

All this logic runs entirely from an MCP server, a software component that is local to the machine it runs on.

For MCP to communicate with a third-party service, it needs to be able to authenticate with it, which often involves having some form of an API key or secret locally on the machine.

In March 2025, MCP added the OAuth specification, an authorization framework that enables an application to access external resources without credential sharing. This enables users to run remote MCP servers. However, not all users have implemented it yet; many still run servers with local API keys.

5 MCP security risks and mitigation strategies

MCP enables AI systems to access data, use third-party tools and execute commands. These abilities introduce serious security concerns. Learn these five MCP security risks and mitigation strategies.

1. Credential exposure

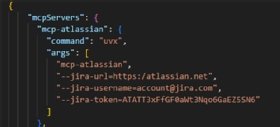

One leading risk with MCP is credential exposure. Often, MCP configuration involves API keys that are directly added to a config file, such as with the Claude desktop. Someone who compromised the client could access those keys and potentially access the Jira instance, like in Figure 2.

If the server supports it, using OAuth with remote MCP servers is one way to mitigate the risk of credential exposure. Users can also add a proxy component, such as Cloudflare or Azure API Management, that provides OAuth authentication in front of the MCP server.

If the server does not support OAuth, the only way to mitigate credential exposure risk is to distribute access keys in the configuration. However, users should avoid this as much as possible because it adds its own share of security vulnerabilities and management issues.

Also, there is no optimal way to distribute the MCP configuration to clients. One option could be to deploy a centralized virtual desktop infrastructure (VDI) machine, where end users can access an MCP-enabled client. This ensures that API keys are only available on centralized machines.

2. Unverified third-party MCP servers

Many publicly available MCP servers are created by community members and unverified sources.

While most of these technically work, they're often unmaintained and come with vulnerabilities -- some even intentionally malicious, set up to harvest tokens or API keys while appearing to function normally.

Users should only use official MCP servers from trusted vendors, such as the public GitHub server github/github-mcp-server. Many large technology companies now have their own list of verified MCP servers. For example, Microsoft maintains a list of MCP servers.

3. Prompt injection attacks

Another main security risk with MCP is prompt injection attacks and tool poisoning.

Attackers can smuggle hidden prompts or rogue instructions into a tool's metadata. Users won't notice anything unusual, but the LLM might treat those prompts as legitimate commands -- opening the door to data leaks or other unauthorized actions.

Much of this risk is avoided by using verified and trusted MCP servers and third-party tools.

4. Compromised servers

Another MCP security risk is when a malicious actor compromises a community MCP server and replaces the source with malicious content.

Since most MCP servers are installed using either a pip install or npm install command, users have limited options to determine if an MCP component has been compromised unless they use a third-party package analyzer.

5. Malicious code and unintentional actions

MCP can access file systems and run commands directly on the host from which it is executing. Therefore, another risk scenario occurs when MCP executes arbitrary code directly from a malware sample.

This can occur if a user is unaware of the risks involved in the MCP server's actions. For instance, once a user has an MCP server configured, they only have the option to block or allow a specific tool. If allowed, the AI model might do something unintentional, such as deleting a user or object.

There are a few things one can do to minimize this security risk.

One option is to ensure that MCP servers are running in an isolated environment with limited access to the machine, such as running the components within a Docker container. Some MCP servers have an option for that, or are listed in the Docker MCP hub.

Another mitigation strategy is to turn off commands and tools that enable read or execute permissions from an MCP server, as seen in Figure 4. Unfortunately, this must be done locally on each machine, often combined with an API key that only allows read access to a system or service.

Marius Sandbu is a cloud evangelist for Sopra Steria in Norway who mainly focuses on end-user computing and cloud-native technology.