What is an AI accelerator?

An AI accelerator is a type of hardware device that can efficiently support AI workloads. While AI apps and services can run on virtually any type of hardware, AI accelerators can handle AI workloads with much greater speed, efficiency and cost-effectiveness than generic hardware.

For that reason, organizations seeking to take advantage of AI should take time to understand the benefits of AI accelerators, how they work, their potential drawbacks and which types of AI accelerator hardware are currently available.

Keep reading for guidance on each of these topics as we discuss what AI accelerators are and the role they play in the modern AI ecosystem.

What is an AI accelerator?

It's sometimes said that AI accelerators are devices "specifically designed" for AI, but this is not necessarily the case. As we note below, one type of AI accelerator -- the GPU -- wasn't designed primarily for AI, although it has capabilities that make it a good choice for certain types of AI workloads.

AI accelerators fall within the hardware category of processing units, meaning that their primary purpose is to perform computing calculations (although some also have integrated short-term memory, so they can function as a type of RAM device, too). In this sense, AI accelerators are similar to computer processing units, or CPUs, a general-purpose type of computer chip that handles most of the processing that takes place on a computer or server.

This article is part of

What is enterprise AI? A complete guide for businesses

However, unlike CPUs, AI accelerators are optimized for tasks associated with AI workloads, like processing large quantities of data, model training and inference. It's possible to use a generic CPU for AI workloads as well, but doing so will typically take much longer because CPUs lack special capabilities that are important for many AI use cases.

How does an AI accelerator work?

There are multiple types of AI accelerators and each type is designed a bit differently. In general, however, the key characteristic that makes AI accelerators work is support for parallel computing.

Parallel computing (also known as parallel processing) means the ability to perform multiple calculations simultaneously. For example, during the process of AI model training, an algorithm powered by an AI accelerator might process hundreds or thousands of data points concurrently. It can do this because the accelerator features a large number of cores (meaning individual processing units that are integrated into a larger chip), and each core can handle a distinct operation.

In contrast, general-purpose CPUs process data sequentially, which means they have to complete one operation before moving on to the next one. Multicore CPUs, which are common in modern PCs and servers, can support parallel computing to a limited extent because each core can handle a different task simultaneously. But CPUs usually include no more than a few dozen cores, whereas AI accelerators typically feature hundreds of thousands of cores -- so their capacity for parallel computing is much greater.

In addition to supporting parallel computing, some AI accelerators offer other types of hardware optimizations, such as processor architectures that reduce energy consumption during concurrent operations and integrated memory, which works more efficiently for AI workloads than the general-purpose RAM attached to computer motherboards.

Why is an AI accelerator important?

An AI accelerator is important because it can significantly improve the performance and efficiency of AI workloads.

Again, it's possible to support virtually any type of AI use case with general-purpose CPUs, just as it's possible to scrub a floor with a toothbrush. But an AI accelerator is to AI workloads what a power mop is to floor cleaning: a way to complete tasks faster and more efficiently.

That said, it's important to note that different AI workloads can benefit from AI accelerators in varying ways. For example, in the case of AI model training, the ability to process data in real time is not typically important because training often takes days or weeks, even when using specialized AI hardware. This means developers don't expect real-time results when performing training. On the other hand, they are interested in reducing the total time necessary to complete training. This means that for training, an AI accelerator that offers a large number of cores -- and can therefore process very large quantities of data efficiently -- is more advantageous than an accelerator that is optimized to process data in real time.

On the other hand, consider AI inference, the type of operation in which a trained AI model interprets new data and makes decisions based on it. For inference, processing data in real time (or very close to it) can be critical because an AI application might need to support use cases -- like fraud detection during payment transactions or guiding a self-driving vehicle -- where instantaneous decision-making is essential. But the amount of data that accelerators need to work with during inference is typically much smaller than the data used for training. As a result, for inference use cases, having many cores in an accelerator is not as important as having powerful cores that can process new data very quickly.

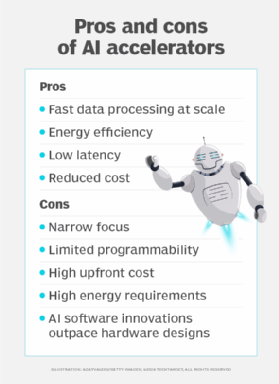

Pros of AI accelerator technology

Compared to general-purpose computing hardware, AI accelerators offer a range of benefits, including the following:

- Fast data processing at scale. The large number of cores in AI accelerators enables parallel computing, which in turn means that accelerators can process large data sets quickly.

- Energy efficiency. Compared to CPUs, AI accelerators often consume less electricity to process the same amount of data, thanks to optimizations like processor designs that minimize the amount of internal data movement that takes place during parallel computing.

- Low latency. The ability to move data quickly within an AI accelerator also minimizes latency, meaning delays between when a processing task begins and when it is complete.

- Reduced cost. Although AI accelerators are often more expensive to purchase than CPUs, their overall total cost tends to be lower due to their capacity to process data more quickly and with less energy use.

Cons of AI accelerator technology

On the other hand, AI accelerators can present some challenges and drawbacks:

- Narrow focus. As noted previously, the types of optimizations that are most important can vary across different AI workloads. As a result, an AI accelerator that excels at one task might underperform for another.

- Limited programmability. Varying AI needs might not be as much of an issue if users could modify the way accelerators work in order to tweak them for different use cases. But they typically can't. Most accelerators are difficult or impossible to program. The exception is a Field Programmable Gate Array (FPGA), a type of accelerator that is designed to be programmable by users -- but even in this case, customizability is limited.

- High upfront cost. The high purchase price of AI accelerators means that there is a significant upfront cost associated with acquiring them, even if they cost less in the long run.

- High energy requirements. Although AI accelerators typically consume energy more efficiently than CPUs when supporting AI workloads, they still require large quantities of electricity. This can place a strain on the servers and data centers that host accelerators, as well as the cooling systems that need to dissipate the heat they produce (which is a byproduct of their high energy consumption).

- AI software innovations outpace hardware designs. New AI software architectures, services and applications are constantly emerging, but AI-optimized hardware tends to evolve less quickly. This means that the accelerators available today may not excel at supporting the latest types of AI models or use cases.

AI accelerator examples and use cases

To gain a sense of how AI accelerators might be useful in the real world, consider examples like the following:

- An accelerator in an autonomous vehicle might optimize the inference necessary to process real-time environmental data and guide the vehicle.

- An accelerator in an edge computing location in a retail store could help detect fraudulent transactions very quickly, allowing retailers to identify fraud before criminals leave the location.

- Accelerator hardware hosted in a public cloud could be used by AI developers to train a large language model (LLM) without having to set up their own training hardware.

- An accelerator in a server that hosts an LLM-based chatbot could help the chatbot process user input quickly, which speeds up inference and improves chatbot responsiveness.

Main types of AI accelerators

Currently, the main types of AI accelerators include the following:

- GPUs. Graphical processing units were originally designed to render video, not support AI. However, because GPUs offer fairly large numbers of cores, they can be useful for AI workloads, too.

- FPGAs. FGPAs are a type of computing device whose internal logic can be modified by users -- hence why they are called field programmable. FPGAs typically don't feature high core counts, so they're not ideal for workloads like model training. But their cores tend to be powerful. Combined with the ability to customize the way FPGAs process data, this makes them a good solution for AI workloads like inference.

- ASICs. Application-specific integrated circuits feature internal logic that is optimized for certain tasks, although unlike FPGAs, ASICs can't be modified by users. Typically, ASICs that are used for AI are optimized for very specific types of workloads, like image recognition.

- NPUs. Neural processing units (NPUs) are a type of AI accelerator optimized for neural learning use cases training. Their main benefit is that they offer high core counts and low energy requirements, making them better than GPUs in some cases for tasks like model training.

- TPUs. Tensor processing units (TPUs) are an AI accelerator product from Google. They're a type of NPU.

Note that there is some overlap between many of these categories of AI accelerators. For example, an NPU could be considered a type of ASIC because an NPU is essentially a chip optimized for applications involving neural networks. One could also argue that there is a gray area between GPUs and NPUs in some cases because some of the latest GPUs, such as those in the Nvidia Ampere product line, were designed with neural processing use cases in mind, not just video rendering.

Best AI accelerators

As we've noted, AI use case requirements vary widely, and different accelerators offer different pros and cons. For this reason, there is no one best AI accelerator.

The best way to go about selecting an accelerator is to think in terms of which accelerators are likely to offer the best tradeoff between performance and cost for a certain type of workload. For example:

- If you're seeking a budget GPU for basic AI tasks, the GeForce GTX 1660, which is available for under $200, is a good option.

- For a more powerful consumer-grade GPU for AI, consider the GeForce RTX 4090. With a price tag of nearly $2,000, it's not cheap, but it's within the realm of affordability for individuals who want to run AI workloads locally.

- The Nvidia A100 GPU, with pricing starting around $10,000, is among the most powerful options for enterprise-grade AI accelerator hardware.

In addition to purchasing AI accelerators and installing them in your own PCs or servers, it's possible to rent AI accelerator hardware using an infrastructure-as-a-service offering that makes accelerators available remotely over the internet. For example, although Google doesn't offer TPUs for sale, it provides access to TPU hardware via servers hosted in Google Cloud Platform. This approach may be ideal for organizations that need AI accelerators for temporary or periodic tasks -- like model training -- and don't want to purchase devices that will sit idle much of the time.