What is embodied AI? How it powers autonomous systems

Embodied AI refers to artificial intelligence systems that can interact with and learn from their environments using a suite of technologies that include sensors, motors, machine learning and natural language processing. Some prominent examples of embodied artificial intelligence are autonomous vehicles, humanoid robots and drones.

Although the term embodied AI or embodied intelligence is relatively new, it's related to mechanisms like adaptive control systems, cybernetics and autonomous systems, which have been around for centuries. For example, an autonomous security system using cameras and motion detectors does not have much of a physical body, but it can learn from how well its decisions mitigate security events on physical routers, hard drives and compute infrastructure, and adapt its monitoring strategies accordingly.

Embodied AI's ability to learn from its experience in the physical world sets it apart from cognitive AI, which learns from what people and data sources say about the world. Human cognitive intelligence characterizes how we summarize, abstract and synthesize stories about our experience of interacting in the physical world and with other humans, animals and machines. The stories we compose summarizing our understanding of these things are what cognitive AI processes.

Embodied AI's type of intelligence goes in a different direction that is more akin to a reflex than a concept: It learns to match its output to its sensory inputs.

This article is part of

What is enterprise AI? A complete guide for businesses

Some kinds of embodied intelligence in the physical world span multiple bodies, such as swarms, flocks and herds of animals that synchronize their efforts. In embodied artificial intelligence, this kind of intelligence could apply to a swarm of drones, a fleet of vehicles in a warehouse or a collection of industrial control systems coordinating their efforts.

Embodied AI can respond to different kinds of sensory input, similar to how the classic five senses in humans do. It can, however, also use a multitude of senses outside our human sensory experience. These abilities include detecting X-rays, ultraviolet and infrared light, and magnetic fields; knowing where things are using GPS; and understanding the performance of various enterprise systems or the inventory levels throughout a supply chain.

It's also important to clarify that many embodied AI systems, such as robots or autonomous cars, move, but movement is not required. For example, an autonomous IT or security system might learn from the physical interactions of agents running on networking, storage and computing infrastructure that rests in place.

Elements of embodied AI systems

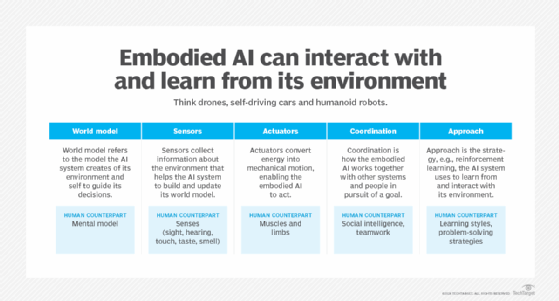

Embodied AI applies to any AI system that learns from interacting with its environment. More capable systems typically include many elements across the different dimensions of embodiment. Here are some of the most important ones:

- World model refers to the model the AI system creates of its environment and self, enabling it to make safer and more efficient decisions as it pursues its goal. Nvidia's Omniverse platform, for example, uses physics-based simulated world models to train more competent robotic and autonomous vehicle embodied AI systems.

- Sensors collect the information about the environment that helps the AI system build and update the world model.

- Actuators are the devices in the AI system that convert energy into mechanical motion, enabling the embodied AI to act on or change the environment, its mobility in that environment or itself.

- Coordination refers to how the system works together with other systems and people.

- Approach refers to the strategy that the AI system uses to learn from interacting with its environment. For example, reinforcement learning uses a policy-based approach, while active inference seeks to minimize free energy.

Spectrum of embodiment

As noted above, there is a spectrum of AI embodiment across different dimensions. At one end is something akin to humans with five senses that can move, effect complex changes, reason and adapt to the environment. On the other hand, there is an autonomous building control system that might just be designed to learn to optimize heating and cooling settings to keep occupants happy and reduce energy consumption. Here are some dimensions of embodiment to consider:

- Modalities refer to the number of senses and actuators the intelligence uses to perceive and act on its environment. Humans have five senses and hundreds of muscles. An AI system might just use one modality -- for example, an audio modality for listening or a special scientific instrument designed to improve drug discovery that is outside direct human experience.

- Mobility in humans is multidimensional and complex, especially when you factor in our ability to use machines to move, which gives us great flexibility. Humans can fly planes, drive cars, operate construction equipment and move pallets across a warehouse. Robots or building control systems might sit in place and have a single movement that does one thing well.

- World models, as noted, are critical to embodied intelligence. Humans can quickly shift across different mental models describing physics, social interactions, finance and enterprise systems. Simpler systems might see good performance through learning a model for doing one thing.

- Coordination, as noted, refers to the ability to integrate cognitive and motor processes such as physical actions, reasoning and sensory inputs into a coherent response. Humans have learned to broadly coordinate across families, governments, businesses and teams. Simple embodied AI systems might learn to work on their own.

Potential use cases of embodied AI

Here are some of the potential use cases of embodied AI:

- Humanoid robots in factories and homes.

- Autonomous vehicles.

- Autonomous mobile robots for moving goods around warehouses and factories.

- Factory automation improvements.

- Autonomous security systems that learn how their decisions improve networks, compute and storage.

- Supply chain management tools that automatically optimize product distribution.

- Chatbots that learn how their content and tone of voice improve customer experience.

The history of embodied AI

The term embodied AI is relatively new, but the fundamental concept of adaptive control systems dates back centuries. In the early days, it was all about designing analog control systems that could learn from their decisions. These days, the focus is on how neural networks can create better representations of the physical world.

1788

Adaptive control. James Watt invented a centrifugal governor that employed a feedback system to adjust fuel flow into a steam engine, igniting the Industrial Revolution.

1943

Analog feedback. Norbert Wiener integrated a novel analog feedback system to improve the control of anti-aircraft guns in response to external stimuli.

1947

Cybernetics. Wiener and associates coined the term cybernetics to characterize the science of control in humans and machines. The term was a nod to the Greek word for "steersman."

1950

Cybernetic tortoise. U.K. researchers developed a turtlelike robot to study and improve how a robot could move around its environment.

1956

Adaptive business. Stafford Beer persuaded management at United Steel to fund a management cybernetics computer.

1960

Shakey the robot. Stanford Research Institute researchers developed a new robot capable of learning to deduce the consequences of its actions.

1971

Scaling cybernetics. Beer helped Chile build a cybernetic system for managing the country's economy. The project was scrapped after a coup.

1973

Humanoids. Japanese researchers developed WABOT-1, the first humanoid robot.

1988

Autonomous vehicles. The autonomous vehicle ALVINN used neural networks to learn to drive from coast to coast in the U.S.

1991

Intelligence without representation. Rodney Brooks published a paper on a new "behavior-based robotics" approach to AI that suggested training AI systems independently.

2004

DARPA Grand Challenge. The U.S. Defense Advanced Research Projects Agency hosted a competition to develop autonomous systems that could drive around the desert. This renewed interest in autonomous systems.

2015

Semantic segmentation. Researchers developed SegNet, an image analysis technique that used neural networks to decipher the meaning of visual data to improve autonomous systems.

2018

Simulation. Wayve researchers developed a new approach to help autonomous cars learn from simulation.

2020

Autonomous driving informed by feedback. Wayve researchers developed a new AI approach to learn from real-world experience with less reliance on pretrained models.

2023

Vision-language-action models. Wayve researchers developed new models that help cars communicate their interpretation of the world to humans. The first vision-language-action model that could simultaneously drive a car and converse in language opened up many new controllability and interpretability opportunities.

What's next for embodied AI

Embodied AI is a work in progress. What will its future hold? It will certainly be informed by improvements in generative AI, which can help interpret the stories humans tell about the world. However, embodied AI will also benefit from improvements to the sensors it uses to directly interpret the world and understand the impact of its decisions on the environment and itself.

The costs of sensors and compute are all coming down. Also, researchers are developing better algorithms for interpreting and adapting to the impact of embodied AI's decisions.

To be clear, we are still early in the development of creating adaptable AI systems that learn from their environment. Progress will require innovations in neural network architectures for representing and learning about physical phenomena and addressing the many challenges associated with getting multiple agents to work together to achieve a goal.