Blue Planet Studio - stock.adobe

AI deployments gone wrong: The fallout and lessons learned

AI's deployment landscape is littered with good intentions, crushed projects and unintended consequences due to misaligned business goals, mistrust in AI and weak management.

When AI implementations fail, the impact often ripples across the enterprise, creating losses that extend well beyond a single system. These failures rarely occur in isolation. Managing the fallout -- and charting a path to recovery -- starts with understanding the risks involved and the steps needed to address them.

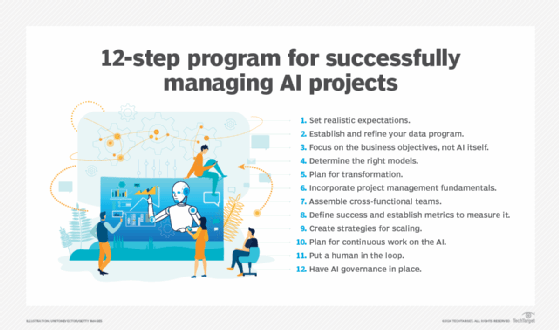

AI initiatives tend to break down in predictable ways. Some projects falter when exposed to real-world data such as user behaviors and AI blind spots; others never move beyond the pilot stage. Successful projects depend on clear business objectives, measurable ROI and strong governance. But as companies push toward autonomous, agentic-based AI systems in pursuit of measurable business outcomes, postmortems of failed deployments point to the usual suspects: an unclear or ill-defined business case, weak change management and a lack of trust in AI-generated output.

"You have to start with what problem you are trying to solve," said Mark Beccue, AI principal analyst at Omdia. "And what happens a lot with failures in AI is misalignment in that first step."

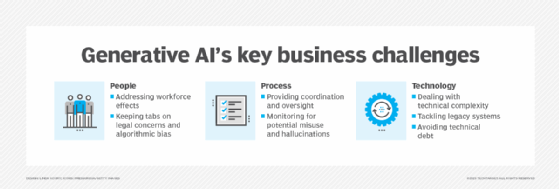

AI is an extremely disruptive, fast-moving technology, Beccue noted. Unlike the specialized tools that solve specific problems with predictive analytics, many enterprises view generative AI (GenAI) as a more centralized area of transformation. Responsibilities often fall to IT departments because these teams excel at integrating disparate aspects of the business, such as digital transformation and compliance.

High cost of failure

An AI initiative should begin with an internal application before tackling higher-risk, customer-facing applications. Many companies took the high-risk plunge -- and paid a price. High-profile and lesser-known deployments occupy the graveyard of AI implementation failures. The following use cases expose what went wrong, the financial and reputational fallout, the lessons learned and how the business responded.

Drive-thru disasters

Fast-food chains are testing AI-powered voice ordering to cut labor costs, but early rollouts have hit a few speed bumps. Yum! Brands began piloting AI-powered voice-ordering systems at U.S. Taco Bell drive-thrus in 2023, expanding to more than 100 locations in 13 states by mid-2024. The initiative aimed to free up staff, accelerate order-taking and optimize back-of-the-house operations. But the Voice AI system misinterpreted customer orders in noisy, high-traffic environments.

In one viral incident, a customer ordered 18,000 cups of water, which the AI system dutifully entered. Customers also reported repeated upselling prompts, such as being offered items after already ordering them, which suggests gaps in contextual awareness and conversational state management.

Despite well-documented risks around accuracy, customer frustration and brand impact, Yum! Brands, which also owns KFC, Pizza Hut and Habit Burger & Grill, continues to invest in AI technologies. In February 2025, the company announced Byte by Yum!, a SaaS platform that supports core operational functions, including online and mobile app ordering, kitchen and delivery efficiency, and inventory and labor management.

The following month, Yum! Brands unveiled a strategic partnership with Nvidia to deploy its AI automated voice order-taking agents for drive-thrus and contact centers, alongside other AI initiatives. While earlier pilots exposed operational and reputational challenges, executives report that these systems, now operating at 300 to 500 locations, have processed more than 2 million orders even as questions remain around consistency, customer experience and long-term ROI.

McDonald's faced similar issues with its AI-powered voice technology, which it tested at roughly 100 locations globally. In October 2021, the fast-food giant partnered with IBM, which agreed to acquire McD Tech Labs (formerly Apprente, an AI voice technology startup) to develop and test an AI-based automated order-taking (AOT) technology, aligning with the natural language processing capabilities of the IBM Watson ecosystem.

Like the Taco Bell system, the AOT pilot project encountered problems with different dialects and failed to meet accuracy levels. McDonald's ended the AOT partnership with IBM and stopped testing the technology in restaurants in July 2024. As part of a broader AI strategy, McDonald's continues to explore AI-powered voice ordering and is investing in AI-driven tools, including Google Cloud services, to support predictive maintenance and optimize restaurant workflows.

Hiring horrors

McDonald's cooked up another high-profile AI misstep in mid-2025 when its McHire.com platform, powered by Paradox.ai (since acquired by Workday), potentially exposed personal data for about 64,000 applicants. Security researchers found that a test administrator account used a default admin username and password ("123456") to secure applicant data. The data included transcripts from the hiring chatbot Olivia and other sensitive personal data. No social security information was accessed, according to Paradox.ai.

In some cases, businesses are choosing to implement AI tools instead of proven core technologies, leading to failed AI deployments and security stacks, reported Jacob Williams (aka MalwareJake), an enterprise risk management expert and vice president of research and development at security consultancy Hunter Strategy. In his blog "The Looming 2026 Challenge: Failed AI Deployments," Williams warned that businesses and security teams can be left exposed when proven tools are retired, and leaders funnel funds into "underwhelming AI projects" that fail to deliver.

Bias and blind spots

AI model training data containing harmful bias against demographic groups or gaps that cause algorithmic blind spots can result in significant financial and reputational damage.

Amazon trained its AI resume-based screening tool on historical data and patterns collected over 10 years. Male job candidates dominated the training data for technology positions. The AI recruiting tool encoded gender bias, penalizing resumes under its five-star rating system that referenced "women's" as first reported by Reuters. After years of research and development and an internal effort to neutralize gender bias in model behavior and underlying data, Amazon disbanded the team and abandoned the AI recruiting system before full deployment in 2018.

Zillow's AI-powered home-pricing model used in its iBuying service, Zillow Offers, launched in 2018, suffered from algorithmic blind spots and flawed assumptions. It even failed to recognize market volatility during the COVID-19 pandemic. The system, which enabled Zillow to act as the primary purchaser and seller of homes, failed to account for rapid price swings across local markets, leading Zillow to overvalue properties in certain regions. The miscalculations resulted in $500-plus million in losses, prompting Zillow to exit the iBuying business in 2021. The closure of Zillow Offers resulted in a 25% reduction in Zillow's workforce.

Lessons learned

Flawed training data can embed and scale poor-quality or biased AI model outputs, damaging brand reputation, eroding trust and driving away customers. Far from rare, these failures are a recurring pattern in enterprise AI deployments. While many business leaders focus on preventing high-visibility AI failures, such as publicized errors in decision-making or chatbots generating racist or offensive content, less obvious risks often go unnoticed.

MIT researchers reviewed more than 300 publicly disclosed AI implementations in 2025 and found that most of them have yet to deliver a measurable profit-and-loss impact. Just 5% of the integrated AI pilots studied generated millions of dollars in value. But some projects were still in the proof-of-concept stage, which could account for the lack of P&L data.

Many projects stall in a proof-of-concept or pilot stage, leaving IT, data science and business teams with no clear accountability, economic model or scaling plan, and with data problems ranging from harmful bias to broken pipelines. "If you ask companies where they stumble in their AI efforts, the first thing is to make sure they are focusing on the right problem," Beccue said. "But the next biggest issue is data." Challenges can include data that's unreadable, biased, poorly formatted or trapped in silos.

Other commonly cited AI obstacles, including model quality, data limitations, legal issues and risk, aren't the main reasons AI initiatives stall, according to MIT's survey "The GenAI Divide: State of AI in Business 2025." The real problem is execution. Most AI tools fail to learn over time and remain poorly integrated into day-to-day workflows. The findings also challenged a popular assumption among enterprises: Businesses that attempted to build AI tools entirely in-house were twice as likely to fail as those that relied on external platforms.

Investment in AI implementations requires human-in-the-loop controls, comprehensive testing and ensuring AI tools align with business and ethical goals, not just cost reductions. In addition, AI investments are still viewed as a technology challenge for the CIO rather than a business issue that requires leadership across the organization.

"Tech alone just isn't enough," said Eric Buesing, a partner at McKinsey & Company. Scaling AI depends as much on change management as on the technology itself. That includes building trust among employees and customers, aligning incentives to succeed and preparing organizations for new roles and skills. Without that foundation, AI deployments can fail to deliver real impact.

"Adoption, efficiency and growth are the impact," Buesing advised. "And that change doesn't happen overnight. In many cases, the change management is the limiting factor for organizations moving from pilots to scale."

Kathleen Richards is a freelance journalist and industry veteran. She's a former features editor for TechTarget's Information Security magazine.