Getty Images

Evaluate model options for enterprise AI use cases

To successfully implement AI initiatives, enterprises must understand which AI models will best fit their business use cases. Unpack common forms of AI and best practices.

A near-universal technology belief among enterprises is that AI will play an important -- and rapidly growing -- role in business. Many have already started to experiment with AI, with mixed results.

Although the uncertainties created by early efforts have made senior management wary, companies aren't yet willing to put AI aside. Successful AI initiatives should recognize that there are multiple types of AI beyond generative AI, and often only one will be right for a given use case.

Understanding common forms of AI

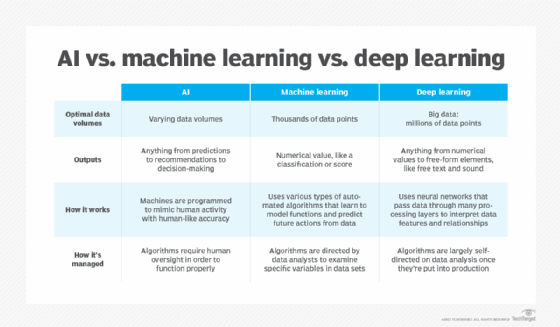

To understand AI's applications for enterprise use cases, it's helpful to be familiar with four key terms: machine learning (ML), deep learning, simple AI and generative AI.

In ML, an algorithm is trained to make predictions or decisions based on repositories of data and, after deployment, ongoing information and actions from users. ML features are often incorporated into business tools such as analytics and operations support. ML is the most common form of AI, both in terms of the number of products in use and the number of users.

Deep learning is a form of ML that involves training a model to analyze information -- sometimes broad public data, but often also private data -- using complex neural networks. Most generative AI models rely on deep learning, and deep learning applications and tools are often grouped with generative AI.

Simple AI uses rule-based systems or inference engines to automate basic tasks based on cooperation between a subject matter expert who provides guidance and a knowledge engineer who builds the model. Like ML, this model is usually integrated with other software to improve its operation and is also a common form of AI.

Generative AI is a form of AI typically based on deep learning. Building a generative AI model involves GPU-based training on a large data set, often using a type of model known as a transformer to build responses and optimize results. Large language models (LLMs) are a popular type of generative AI model that can create natural language responses to questions, but generative models are also capable of performing tasks such as creating images and other forms of data.

Generative AI predicts the optimal response to a question or prompt based on its training data, sometimes using an adversarial process to weed out incorrect responses. While this approach is highly flexible, it is also subject to errors, often referred to as hallucinations. Considerable effort is currently being spent on attempts to improve generative AI's error rate.

Generative AI in the enterprise

Recently, generative AI has been the hottest form of AI, largely because of its ability to generate readily understandable information.

Well-known generative AI tools include widely used LLMs trained on broad public data sets, such as OpenAI's ChatGPT, based on the GPT language model, and Google Bard. There is also a range of generative AI tools designed for image and code generation, among other specialized applications, many of which are also trained on public information. Adobe Sensei, Amazon CodeWhisperer, OpenAI's Dall-E and GitHub Copilot are examples of this type of generative AI.

Generative AI for private data

Among the most active and fast-changing spaces in generative AI is the realm of private data. VMware's recently announced private AI partnership with Nvidia is one prominent example; others include Amazon SageMaker's model generator and PwC's ChatPwC.

All the major public cloud providers -- Amazon, Google, IBM, Microsoft, Oracle and Salesforce -- offer cloud-based AI toolkits to facilitate building models that use private data. Some enterprises have expressed concerns over the sharing of company data with public generative AI tools such as ChatGPT. These cloud-based tools, meanwhile, pose no greater risk than any applications involving public cloud storage of company data.

Amid growing efforts to bring generative AI to private company data, new specialized and private AI tools are emerging, many based on open source LLMs. Companies that want to develop their own AI models might want to look at these tools and watch for development of new capabilities.

Deep learning models are the basis for many custom AI projects classified as generative AI. There are a number of powerful open source models and frameworks available, including the following:

- Apache MXNet.

- Keras.

- PyTorch.

- TensorFlow.

- TFLearn.

- Theano.

There are also specialized open source libraries, such as Fast.ai, Hugging Face Transformers and Stable Diffusion for natural language processing, and Detectron2 and OpenCV for image processing. These tools are used to build models and are suitable only for organizations whose staff includes developers very familiar with AI and ML principles, open source coding, and ML architectures. Many enterprises will find it difficult to use these tools in the absence of such expertise.

When integrated into broader software, ML tools can add an almost-human level of evaluation to applications. ML is commonly used to improve business analytics as well as basic image processing for recognition of real-world conditions. Self-driving and assisted-driving functions in vehicles, for example, are based on ML.

Simple AI tools are now almost totally integrated with other products, which means it will likely be challenging to adopt them unless you already use a product with AI features or are willing to change tools to employ one that does. In addition, AI features of this type are primitive in comparison to what's available in the other three model categories, so there's a risk of expecting too much.

Best practices for adopting AI in the enterprise

So, what should a company interested in adopting AI look for in terms of overall models and specific tools?

Public generative AI tools such as ChatGPT and Bard are useful for writing ad copy, creating simple documents and gathering information. But treat them as junior staff, always subjecting their output to a review by a senior person.

Either private generative AI tools or deep learning features integrated into analytics software can handle business analytics applications. IBM's AI tools are highly regarded in this area, and vendors such as VMware are moving into the same space with a more general approach. All cloud-based generative AI tools accessible as web services on the public cloud are well suited for this, whether through integrating AI into your own software or incorporating it as part of an analytics package you already use.

For real-time control, consider ML tools that can integrate with IoT devices, event processing or log analysis. Simple ML is best used as part of a broader application, but deep learning tools in open source form can help build more complex applications.

Be sure to put any tools you develop or select for real-time applications through extensive testing. A problem with either the tool or with your development can have serious consequences in time-sensitive applications, such as process control or security and operations monitoring.

Generating specialized content, such as code and images, is currently the most difficult task to match to a model and approach. For code generation, users tend to agree that the best approach is to use a co-pilot-style AI coding tool -- GitHub Copilot is a leading example -- with specialized training and a UI compatible with common integrated development environments, such as Visual Studio Code.

For more general content creation, specialized AI writing tools are strong contenders. But as mentioned above, they require careful human review to manage hallucinations. If it's important to build content based on your own data, then you'll need a generative model that you can train on your own document resources -- a form of private generative AI.

As a final warning, AI is not sentient -- despite what some have speculated. In many cases, generative AI and other deep learning models will often generate errors in many of their responses. It's critical not to integrate AI into your enterprise IT environment without implementing sufficient controls over how the results are used. Failing to do so will almost certainly discredit the project, the project advocates and perhaps AI overall within your business.