Generative AI security risks: Best practices for enterprises

Despite its benefits, generative AI poses numerous -- and potentially costly -- security challenges for companies. Review possible threats and best practices to mitigate risks.

Since ChatGPT's public release in late 2022, generative AI has changed how organizations and individuals conduct business globally. GenAI tools support a wide range of business needs, such as writing marketing content, improving customer service, generating source code for software applications and producing business reports. The technology's numerous benefits -- especially reduced costs and enhanced work speed and quality -- have encouraged enterprises to use these tools in their work.

However, as with any new technology, implementing GenAI without careful consideration of cybersecurity issues could allow threat actors to exploit vulnerabilities in an organization's systems and processes. Effectively managing generative AI security risks requires a sound strategy and teamwork among chief information security officers, security teams and AI implementation groups.

Why generative AI poses security risks for businesses

GenAI is a major shift in technology. It can answer business questions in natural language, and it surpasses traditional search engines by answering queries directly instead of providing a list of links. But GenAI creates potential security issues that cybersecurity and AI leaders must address to avoid leaving implementations vulnerable to internal missteps or external attacks.

In addition, bad actors can use GenAI themselves to target businesses. Traditional security measures often fall short against cyberattacks enhanced by AI that enable attackers to execute breaches with little technical understanding while still achieving high-quality results. For example, GenAI features in development platforms such as GitHub Copilot and Cursor can be used to create functional malware and attack code. The speed and scale of GenAI tools offer both business benefits and the potential for misuse.

Key generative AI security risks for businesses

Understanding the potential risks of using GenAI in an enterprise context is crucial to benefiting from this technology while maintaining regulatory compliance and avoiding security breaches. Keep the following risks in mind when planning a generative AI deployment.

1. Sharing data with unauthorized third parties

In enterprise settings, employees should exercise caution when sharing corporate data with third-party entities, such as AI chatbots.

Employees often offload productivity tasks to an AI system to enhance efficiency, but they might overlook the risks associated with transferring sensitive information -- such as personally identifiable information (PII), medical records and financial details -- to third-party systems. For example, the Dutch Data Protection Authority discovered serious data breaches resulting from employees' use of conversational AI systems to summarize patient reports and obtain quick responses to work-related questions.

OpenAI's standard policy for ChatGPT is to keep users' records for 30 days to monitor for possible abuse, even if a user chooses to turn off chat history. For companies that integrate ChatGPT into their business processes, this means employees' ChatGPT accounts might contain sensitive information if they enable chat history. Thus, a threat actor who successfully compromises employees' ChatGPT accounts could potentially access any sensitive data included in those queries and the AI's responses.

2. Security vulnerabilities in AI tools

Like any other software, GenAI tools can contain vulnerabilities that expose companies to cyberthreats. Similarly, the data sets used to train the underlying machine learning model that powers the generative AI tool might also be vulnerable to security issues.

For instance, Protect AI's Huntr bug bounty program has documented more than three dozen security vulnerabilities across various open source AI and machine learning models. The affected tools -- including widely used tools such as ChuanhuChatGPT, Lunary and LocalAI -- contained critical flaws that enable remote code execution and sensitive information theft.

The two most severe vulnerabilities targeted Lunary, a production toolkit for LLMs.

- CVE-2024-7474. With a Common Vulnerability Scoring System (CVSS) base score of 9.1, this vulnerability allows authenticated users to view or delete external user accounts by manipulating the 'id' parameter in request URLs. Successful exploitation results in unauthorized access to sensitive data and potential data loss.

- CVE-2024-7475. A second critical flaw in lunary-ai/lunary version 1.3.2 permits attackers to modify Security Assertion Markup Language authentication configurations without proper authorization, which can compromise the entire authentication framework. This flaw also has a CVSS base score of 9.1.

3. Data poisoning and theft

Generative AI tools must be fed with massive amounts of data to work properly. This training data comes from various sources, many of which are publicly available on the internet and, in some cases, could include an enterprise's previous interactions with clients.

In a data poisoning attack, threat actors could manipulate the pre-training phase of the AI model's development by injecting malicious information into the training data set. These attacks force AI systems to produce incorrect outputs or behave according to the attacker's objectives, compromising the reliability of AI-dependent business processes.

Another data-related risk involves threat actors stealing the data set used to train a GenAI model. Without sufficient encryption and controls around data access, any sensitive information contained in a model's training data could become visible to attackers who obtain the data set.

4. Violating data privacy and compliance obligations

Employees using external GenAI tools or training in-house ones can inadvertently create data privacy and regulatory compliance issues. Organizations should create a strong GenAI security plan that all employees can follow to avoid the following:

- Leaking sensitive data. Using sensitive personal information to train GenAI models or uploading it into a chatbot can violate data privacy and protection regulations such as GDPR, the Payment Card Industry Data Security Standard and HIPAA, leading to fines and legal consequences.

- Breaching intellectual property and copyright laws. AI-powered tools are trained on massive amounts of data and are typically unable to accurately provide specific sources for their responses. Some of that training data might include copyrighted materials, such as books, magazines and academic journals. Using AI output based on copyrighted works without citation could subject enterprises to legal fines from the copyright holders.

- Not disclosing chatbot use. Many enterprises have begun integrating ChatGPT and other GenAI tools into their applications to answer customer inquiries. But doing so without informing customers in advance risks penalties under statutes such as California's bot disclosure law.

5. Deepfake content creation

Malicious actors use GenAI to create convincing videos, images and audio recordings that mimic real individuals, often to deceive or manipulate. These fabricated materials enable sophisticated fraud schemes, as demonstrated by the Hong Kong incident, in which attackers used deepfake video technology to steal $25 million from a multinational corporation through impersonation.

6. Automated social engineering

GenAI systems produce convincing phishing emails at an unprecedented scale, personalizing attacks based on publicly available information about the targets. The quality rivals that of human-crafted communications, making detection much more challenging for both employees and IT security systems.

Best practices to secure enterprise use of generative AI

To address the numerous security risks associated with GenAI, enterprises should keep the following strategies in mind when implementing generative AI tools.

1. Classify, anonymize and encrypt data before building or integrating GenAI

Enterprises should classify their data before feeding it to chatbots or using it to train GenAI models. Determine which data is acceptable for those use cases, and do not share any other information with AI systems.

Likewise, anonymize sensitive data in training data sets to avoid revealing sensitive information. For example, when using patient data to train your machine learning models, anonymize names, contact information and any other data that can reveal their identity.

Encrypt data sets for AI models and all connections to them, and protect the organization's most sensitive data with robust security policies and controls.

2. Train employees on generative AI security risks and create internal usage policies

Employee training is the most critical protective measure to mitigate the risk of generative AI-related cyberattacks. To implement GenAI responsibly, organizations must educate employees about the risks associated with using this technology.

Organizations can set guidelines for GenAI use at work by developing a security and acceptable use policy. Although specifics will vary from organization to organization, a general best practice is to require human oversight. Don't automatically trust content generated by AI; humans should review and edit everything AI tools create.

AI use and security policies should specifically mention what data can and cannot be included in queries to chatbots. For example, developers should never feed intellectual property, copyrighted materials, PII or protected health information into AI tools.

3. Vet generative AI tools for security

Conduct security audits and regular penetration testing exercises against GenAI tools to identify security vulnerabilities before deploying them into production.

Security teams can also train AI tools to recognize and withstand attack attempts by feeding them with examples of cyberattacks. This reduces the likelihood that a hacker will successfully exploit an organization's AI systems.

4. Govern employees' access to sensitive work data

Apply the principle of least privilege within enterprise environments, permitting only authorized personnel to access AI training data sets and the underlying IT infrastructure.

Using an identity and access management tool can help centralize and control employees' access credentials and rights. Likewise, implementing multifactor authentication (MFA) can help safeguard AI systems and data access.

5. Ensure underlying networks and infrastructure are secure

Deploy AI systems on a dedicated network segment. Using a separate network segment with restricted access to host AI tools enhances both security and availability.

For organizations hosting AI tools in the cloud, select a reputable cloud provider that implements strict security controls and has valid compliance certifications. Ensure all connections to and from the cloud infrastructure are encrypted.

6. Monitor compliance requirements, including regularly auditing vendors

Compliance regulations are constantly evolving, and with the uptick in enterprise AI adoption, organizations will likely see more compliance requirements related to GenAI technology.

Enterprises should closely monitor compliance regulations affecting their industry for any changes related to the use of AI systems. As part of this process, when using AI tools from a third-party vendor, regularly review the vendor's security controls and vulnerability assessments. This helps ensure any security weaknesses in the vendor's systems do not traverse into the enterprise's IT environment.

7. Develop an AI data leak incident response plan

Organizations should create and maintain specific procedures for responding to AI-related security incidents. An effective AI incident response plan should document the following key areas:

- Detection and reporting. Monitor AI tool activity across the organization on an ongoing basis and develop simple processes that encourage employees to report suspicious actions promptly. It's essential to include an automated alert for unusual data transfers, as well as a clear escalation order for potential incidents.

- Containment. Once a data leak is detected, restrict access to whatever AI tools and platforms have been affected as soon as possible. The sooner you act, the less likely it is that further data might have been leaked. Plus, original evidence of the attack can typically be preserved.

- Investigation. A thorough investigation should be done to determine the type of information that has been leaked, such as customer data. The effect on the organization's operations and reputation should be assessed, and the timing and nature of the attack should be recorded.

- Remediation. Execute data recovery procedures where feasible, including requesting the removal of exposed information from AI platforms. Update security policies based on incident findings, and implement comprehensive staff retraining programs to prevent similar occurrences.

- Prevention. Strengthen preventive measures through enhanced access controls such as MFA, deployment of AI-specific data loss prevention tools and regular security audits of AI tool implementations.

Future of generative AI security in the enterprise

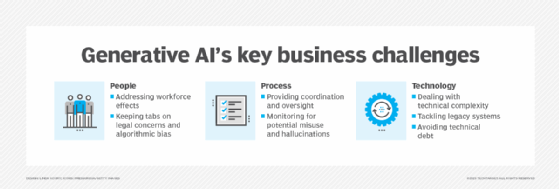

Using GenAI to support business operations is reshaping enterprise security, bringing both innovation and risk. As organizations continue to integrate GenAI into their infrastructure and systems, they must address the following challenges on an ongoing basis.

- Protecting sensitive data. AI systems might unintentionally expose confidential information through the retention of training data sets or prompt injection attacks. Companies like OpenAI have adopted data filtering mechanisms and enterprise-grade access controls. Organizations should develop data classification policies and apply tokenization to sensitive data inputs.

- AI governance. Enterprises should create comprehensive frameworks to ensure the responsible deployment of AI. For example, IBM's AI governance policies include bias detection algorithms and decision audit trails for automated processes. Financial institutions should use explainable AI for loan approvals, while healthcare organizations must establish oversight committees to review AI-assisted diagnoses before patient care decisions.

- Threat mitigation. Malicious actors exploit GenAI systems to aid them in creating sophisticated attacks, including voice cloning for executive impersonation and automated spear-phishing campaigns. Google's Advanced Threat Protection and Microsoft Defender integrate behavioral analysis to identify AI-generated malicious content. Organizations should deploy endpoint detection that recognizes synthetic media and establish verification protocols for high-stakes communications to ensure the integrity of these communications.

We should not forget that implementing GenAI in the business arena also requires combining encryption for data at rest and in transit, zero-trust access controls with regular permission audits and continuous monitoring of AI system outputs. The complex, evolving IT threat landscape requires adaptive security frameworks that treat AI systems as critical infrastructure requiring sophisticated protection strategies.

Editor's note: This article was updated in June 2025 to include additional best practices to manage generative AI security risks and to improve the reader experience.

Nihad A. Hassan is an independent cybersecurity consultant, expert in digital forensics and cyber open source intelligence, blogger, and book author. Hassan has been actively researching various areas of information security for more than 15 years and has developed numerous cybersecurity education courses and technical guides.