Getty Images/iStockphoto

How to become an MLOps engineer

Explore the key responsibilities and skills needed for a career in MLOps, which focuses on managing ML workflows throughout the model lifecycle.

Machine learning goes far beyond basic data analytics -- it can help businesses spot trends, find anomalies and resolve errors at scale. But implementing an ML model from concept to product is a challenging proposition.

A typical ML lifecycle involves gathering, preprocessing and ingesting data into an ML model that a developer then trains, tunes, deploys, monitors and analyzes. For enterprise AI initiatives, success with ML demands constant collaboration among data collection, data science, development and operations teams.

The demand for expertise in ML implementation has led to the emergence of ML operations (MLOps), a practice dedicated to moving ML models from inception to production. The technical experts who focus on organizing, coordinating, experimenting with and improving ML products and processes are known as MLOps engineers.

What is ML?

ML is a foundational technology in AI, the discipline that aims to simulate or model the way that humans think and learn using data-driven algorithms. ML models' ability to learn from their training data enables them to make predictions that can influence a business or its customers.

For example, a retail ML platform might use a given customer's purchase history and stated interests to learn what that customer likes, which then enables the company to make product offers to the customer that align with the customer's interests. In this example, the customer's purchase history and stated interests are part of the data driving the ML algorithm.

But ML use cases can also be far more sophisticated. A more complex example might involve a smart manufacturing system with many diverse sensors used to measure parameters of the system's operation, where an ML algorithm ingests the sensor data.

In this scenario, based on historical cause-and-effect patterns, the ML model can determine when certain sensor signals might indicate system problems, such as miscalibrations, quality or performance issues, or impending system failures. The ML algorithm can then generate reports and alerts for engineers, who can schedule downtime and preventive maintenance before a serious fault occurs.

What is MLOps?

As with many enterprise-class software platforms, there is a significant challenge involved in bringing ML platforms from inception to production -- and then managing and maintaining the platforms in production. MLOps is an emerging specialization designed to focus on ML platform deployment and ongoing support.

MLOps is primarily an operations role, and it often requires strong collaboration with varied stakeholders throughout the organization, including the following:

- Data scientists, who acquire and process the data used to train the ML algorithms and are often involved in initial model design and training.

- Developers, who design and build the ML software.

- IT operations staff, who provision, configure and maintain the IT infrastructure that will host the ML algorithms and data.

- Business stakeholders, who own or are responsible for the resulting ML platform and its impact on the business, such as generating revenue.

The goal of MLOps is to implement and operate an ML platform as a business product, rather than a kind of science experiment. MLOps creates a pipeline that brings stringent oversight and coordination to the ML lifecycle, which involves the following stages:

- Validate and prepare initial training and testing data sets.

- Ingest data into the model.

- Train and tune the model.

- Deploy the model in production.

- Secure any data that the model will process after deployment, such as customer purchase histories.

- Monitor the model for availability, performance and accuracy.

- Understand how and why the model works in the first place, a discipline known as explainability.

MLOps can become especially important as organizations scale, which can involve developing and supporting dozens or even hundreds of ML platforms. A well-defined MLOps pipeline can improve ML release speed, enhance cross-team collaboration and strengthen regulatory adherence by documenting a repeatable ML release and management process.

What does an MLOps engineer do?

The operations specialty tasked with overseeing ML platform productization is called MLOps engineering.

MLOps engineers are highly skilled experts responsible for managing the workflow of an ML platform from design and development into -- and after -- production. MLOps engineers interact with developers, data scientists, operations staff and business leaders involved with the ML product. MLOps engineers have a broad range of responsibilities, which can be broken down into development, deployment, and management and monitoring.

From the development, or DevOps, perspective, MLOps engineers oversee ML models in the continuous integration/continuous delivery (CI/CD) pipeline. They review and approve feature or change requests and pulls from the data science staff, and they ensure testing is successful and all model artifacts are handled properly.

When it comes to deployment, MLOps engineers participate in training, tuning and testing the ML model, sometimes referred to as ML engineering. They deploy the ML model to production at the end of the CI/CD pipeline, employing tools such as Docker and Kubernetes to deploy ML components locally or in the cloud.

In terms of management and monitoring, MLOps engineers collaborate with other teams to refine the pipeline and correct faults in the workflow. To do so, they use monitoring tools to track metrics, such as resource use, error rates and response times, and set up reporting and alerting in response to issues or anomalies in the ML output. They also analyze reports and alerts along with monitoring data, log files and other metrics, and they create documentation on deployment, optimizations, changes and troubleshooting.

ML engineering vs. MLOps engineering

Some organizations make a distinction between MLOps engineers and ML engineers. When such a distinction is made, ML engineers focus on building and training the ML model, while MLOps engineers focus on the workflows and pipelines needed to bring ML models to production. There can be significant overlap in these job responsibilities, and distinctions vary across organizations depending on the specifics of their projects and level of involvement with ML.

MLOps engineer skills and experience

MLOps engineers are the conduit between ML concepts and actual ML product deployment. They handle the complex technical, process and interpersonal mechanics of taking an ML platform from inception to deployment and ongoing maintenance.

Any MLOps engineer candidate would be expected to possess a broad suite of skills, including extensive experience in the following areas:

- Software development approaches and methodologies such as Agile, DevOps and CI/CD.

- Programming, especially Python, as well as foundational languages such as C++ or Java, and other popular data science languages such as R.

- Software testing and troubleshooting using Agile toolchains.

- Collaboration with diverse multidisciplinary teams and project stakeholders.

In some ways, this is similar to many other DevOps jobs. But the key to any MLOps role is a strong understanding of ML algorithms and models.

An MLOps engineer must be able to understand the ML model and its underlying data. This includes being able to explain how and why the model reaches its conclusions and knowing how to deploy and validate that model on an ongoing basis. Consequently, MLOps engineers require a complex skill set that encompasses programming and scripting, data science, statistical modeling, database construction and administration, and IT infrastructure and operations.

The entire field of ML and AI is still in a formative phase as AI products such as ChatGPT attract attention and provide tangible value to businesses. Consequently, the exact roles, responsibilities and skills associated with AI-related jobs are likely to differ from business to business and change over time. Job seekers should review the specific skill, experience and educational requirements for each job before applying for an MLOps engineer position.

How to become an MLOps engineer

Currently, there is no single pathway to becoming an MLOps engineer. This can open many opportunities for professional growth, but can also present confusion and frustration for employers and candidates alike.

It's fair to say that MLOps engineering is an advanced or senior role within the software development profession. It demands a broad array of skills that take time and investment to master. There is currently no formal educational requirement or standard for MLOps, but employers typically look for candidates with a degree and experience in related fields, including computer science, software engineering, data science, computational statistics or mathematics. The more of these areas where a candidate can demonstrate education and experience, the better.

From a more practical perspective, a successful MLOps engineering candidate will be able to demonstrate a core set of skills, such as the following:

- Knowledge of how and why ML models work -- typically the single most important element in MLOps.

- Ability to program, preferably in the languages used by the employer, and script for tasks such as provisioning, configuration and other automation.

- Ability to manage IT infrastructure, including servers, storage, networks and services.

- Ability to deploy and operate complex databases, such as SQL.

- Knowledge of how to store, manage and protect data used to train and run ML platforms.

- Ability to deploy, monitor and manage software, preferably ML models.

It's worth noting that a successful candidate does not need to be highly experienced in all these areas. Many skills can be learned and refined through ongoing or directed learning and on-the-job experience.

The future of MLOps engineering

It can be difficult to speculate on the future of such a relatively new IT specialization, but ML practitioners at every level can consider some potential trends in MLOps engineering that might evolve over the next few years.

In the near term, it's likely that MLOps engineers will deepen their working relationship with data engineers to address data quality and bias issues that can potentially affect model behavior. Better data and clear bias mitigation strategies will improve organizations' compliance and regulatory postures, particularly as government legislation catches up with ML and AI technologies.

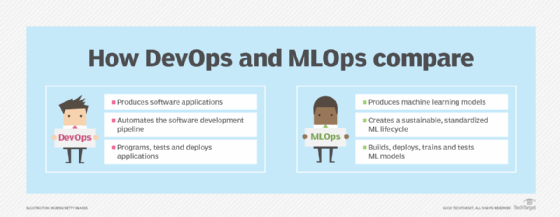

MLOps and DevOps might also converge. MLOps already draws heavily from basic DevOps principles. Data science and application development workflows could see more convergence, with better tools and platforms to bring more cross-team or cross-functional involvement in MLOps efforts. The future might see more scientists and nontechnical staff, such as business stakeholders, involved in ML. This could be further enhanced with the addition of automation in MLOps practices, which will bolster training, tuning, testing and deployment.

Data governance is already a well-established factor in business compliance. As ML becomes mainstream, ML models and their behavior might also become subject to governance principles, which MLOps engineers will need to understand and adopt.

Stephen J. Bigelow is a senior technology editor in TechTarget's Data Center and Virtualization Media Group.