putilov_denis - stock.adobe.com

5 top RAG tools for 2025

The landscape of RAG tools and frameworks is constantly evolving, making it easier to combine generative AI services with enterprise data.

Organizations often want to combine generative AI with their own data to summarize content, synthesize information or answer questions.

AI chatbots don't automatically have access to enterprise data. Thus, organizations that want to work with their unstructured data when using generative AI often turn to retrieval-augmented generation (RAG). RAG is an AI framework that enables large language models (LLMs) to access external knowledge bases, making them key in creating generative AI services that access enterprise content.

Although many organizations want to use RAG to enhance their generative AI services, finding the best RAG tool or framework to aid development and management can be difficult. Understanding five top contenders -- PostgreSQL with pgvector; Azure AI Search; GraphRAG; LightRAG; and txtAI -- can help organizations choose the right components to support RAG workflows in production environments.

What are the main components of RAG?

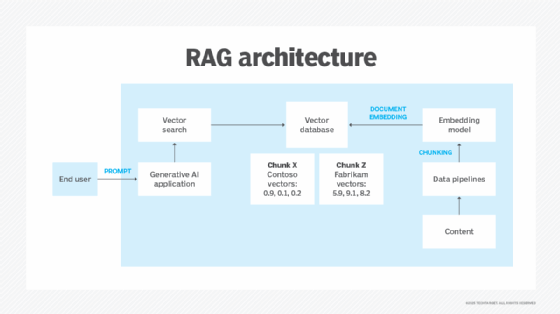

RAG makes unstructured data searchable using a search engine that retrieves the most relevant, proprietary content and provides that information to an LLM that generates output.

RAG architecture might vary depending on which framework or components an organization uses. Still, the main building blocks typically include embedding and vectorization, data chunking, search and similarity matching and response generation.

1. Embedding and vectorization

The RAG system processes all unstructured data -- such as Word files, Excel sheets and text documents -- through an embedding model, which converts data into vector embeddings. Many RAG frameworks and vector databases have built-in features for this vectorization. These vectors are then stored in a vector store, such as Azure AI Search or PostgreSQL with pgvector.

2. Data chunking

The RAG system usually chunks data into smaller segments. For instance, a wiki page might be split into sub-topics, each represented as its own set of vectors. This leads to more precise search results than embedding the entire document as a single vector.

3. Search and similarity matching

The prompt is converted into a vector when a user submits a query. A similarity search is then run against the vector store to find the most relevant pieces of content related to the prompt.

4. Response generation

The most relevant data chunks and the original prompt are passed to the LLM to generate a response for the user.

5 RAG tools and frameworks to consider

From vector stores to knowledge graphs, RAG tools can help organizations access the frameworks and processes they need to build successful RAG systems. These five options provide a sampling for teams to consider.

Tools are listed in no particular order.

1. PostgreSQL with pgvector

Pgvector is an open source extension for PostgreSQL that lets users store and query vector embeddings directly in a database column. It's especially useful if the desired content already lives in PostgreSQL, enabling users to add semantic search without introducing a separate vector database.

The strength of pgvector lies in its simplicity and accessibility. It's free and open source, and if a user is already comfortable with PostgreSQL, it doesn't require learning new tools. Plus, many generative AI frameworks like LlamaIndex, Semantic Kernel and LangChain support it, which can ease integration needs.

2. Azure AI Search

Azure AI Search is a PaaS offering from Microsoft Azure that provides search capabilities built on search indexes, rather than relational tables like in PostgreSQL with pgvector. It's designed for full-text and semantic search.

The service integrates deeply with the Azure ecosystem and offers native support for embedding models from Azure OpenAI. It can automatically vectorize content, simplifying the process of building RAG systems. For teams already working in Azure, AI Search offers a scalable and managed tool for adding vector search without managing infrastructure or separate components.

3. GraphRAG

GraphRAG is a Microsoft RAG framework that incorporates knowledge graphs into the retrieval process. Traditional RAG systems retrieve semantically close chunks of information, but can miss related information that is not present in those chunks.

Knowledge graphs expand the search context using neighboring nodes and relations, which can improve relevance without overwhelming the model with long context. To demonstrate the difference between traditional RAG and knowledge graphs, in a question like -- "Which companies acquired firms involved in AI in the past two years?" -- "which" is a compositional query that is hard for a traditional RAG system to answer since content might be put into different chunks of data.

With a knowledge graph, it is much easier to understand relationships between different entities and answer these compositional queries. GraphRAG combines the speed of traditional RAG with the accuracy of knowledge graphs, making it a helpful framework for developing complex RAG systems.

4. LightRAG

LightRAG, like GraphRAG, is a framework that combines knowledge graphs and hybrid search capabilities, making it excel at understanding relationships between different entities and answering compositional questions.

LightRAG is open source and supports multimodal data handling through MinerU integration, an open source tool for document parsing. This enables RAG capabilities and document parsing across formats such as images, Office documents, PDFs and tables. It also has a separate component called VideoRAG to handle video content.

Another advantage of using LightRAG is that it supports easier updating of content compared to GraphRAG, which requires users to rebuild the entire graph, which takes substantial time -- especially if there is a lot of content.

5. TxtAI

TxtAI is slightly different from the other RAG tools and frameworks listed above. While, for instance, PostgreSQL with pgvector and Azure AI Search are pure vector stores, TxtAI is more of a complete framework aimed at providing both a vector database and a framework for semantic search. The open source tool also includes support for knowledge graphs, pipelines and the ability to build custom AI agents.

While it can be seen more as an alternative to LangChain, unlike LangChain, TxtAI also provides a native vector database.

How to choose the right RAG tool

When building a custom generative AI service that can access and use company data, organizations must first define their use case before choosing which tool or framework to use. The RAG system's architecture and use case needs will dictate what tool is the best fit.

For instance, for an e-commerce website that stores much of its content in a relational database management system (RDBMS) such as PostgreSQL, the optimal RAG tool choice is pgvector.

A framework like GraphRAG or LightRAG that works with knowledge graphs is a better choice for use cases that demand a deep understanding of the relationship between different chunks of data to answer more complex questions.

And, if an organization is simply looking for optimal RAG performance when using business data, a traditional search service like Azure AI Search is often the best choice.

These are just a few of the available frameworks -- cloud providers have many different search and vector stores. There are also numerous open source alternatives to consider.

Marius Sandbu is a cloud evangelist for Sopra Steria in Norway who mainly focuses on end-user computing and cloud-native technology.