AI risk management puts ML code, data science in context

Navrina Singh, CEO of Credo AI, discusses the limits of MLOps and algorithmic auditing in providing governance for responsible AI.

The rapid growth of AI has brought increased awareness that companies must get a handle on the legal and ethical risks AI presents, such as racially biased algorithms used in hiring, mortgage underwriting and law enforcement. It's a software problem that calls for a software solution, but the market in AI risk management tools and services is fledgling and highly fragmented.

Algorithmic auditing, a process for verifying that decision-making algorithms produce the expected outcomes without violating legal or ethical parameters, shows promise, but there's no standard for what audits should examine and report. Machine learning operations (MLOps) brings efficiency and discipline to software development and deployment, but it generally doesn't capture the governance, risk and compliance (GRC) issues.

What's needed, claimed Navrina Singh, founder and CEO of Credo AI, is software that ties together an organization's responsible AI efforts by translating developer outputs into language and analytics that GRC managers can trust and understand.

Credo AI is a two-year-old startup that makes such software for standardizing AI governance across an organization. In the podcast, Singh explained what it does, how it differs from MLOps tools and what's being done to create standards for algorithmic auditing and other responsible AI methods.

Responsible AI risk management

Before starting Credo AI in 2020, Singh was a director of product development at Microsoft, where she led a team focused on the user experience of a new SaaS service for enterprise chatbots. Before that, she had engineering and business development roles at Qualcomm, ultimately heading its global innovation program. She's active in promoting responsible AI as a member of the U.S. government's National AI Advisory Committee (NAIAC) and the Mozilla Foundation board of directors.

One of the biggest challenges in AI risk management is how to make the software products and MLOps reports of data scientists and other technical people understandable to non-technical users.

Navrina Singh

Navrina Singh

Emerging MLOps tools have an important role but don't handle the auditing stage, according to Singh. "What they do really well is looking at technical assets and technical measurements of machine learning systems and making those outputs available for data science and machine learning folks to take action," she said. "Visibility into those results is not auditing."

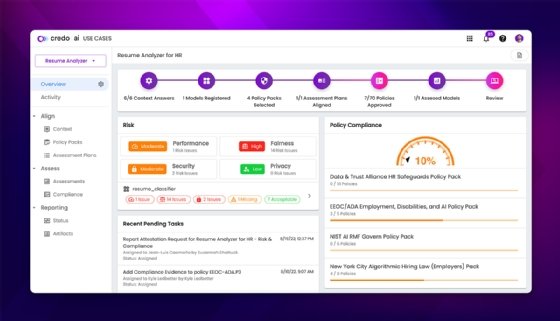

Credo AI tries to bridge the gap by translating such technical "artifacts" into risk and compliance scores that it then turns into dashboards and audit trails tailored to different stakeholders.

A repository of "trusted governance" includes the artifacts created by the data science and machine learning teams, such as test results, data sources, models and their output. The repository also includes non-technical information, such as who reviewed the systems, where the systems rank in the organization's risk hierarchy, and relevant compliance policies.

"Our belief is that if you're able to create this comprehensive governance repository of all the evidence, then for different stakeholders, whether they're internal or external, they can be held to higher accountability standards," Singh said.

Customers include a Fortune 50 financial services provider that uses AI in fraud detection, risk scoring and marketing applications. Credo AI has been working with the company for two-and-a-half years to optimize governance and create an overview of its risk profile. Government agencies have used the tool for governing their use of AI in hiring, conversational chatbots for internal operations and object-detection apps to help soldiers in the field.

New York state of mind

In January, a new law goes into effect in New York City that prohibits employers from using automated employment decision tools unless the tools have undergone an annual audit for race, ethnicity and gender bias. The law also requires employers to post a summary of the bias audit on their websites.

Singh said much of Credo AI's recent business has come from companies scrambling to comply with the New York regulations, which she called a good local law. "It unfortunately failed to define what an audit is," she said.

The lack of standards or even widely accepted best practices for algorithmic auditing is widely viewed as the Achilles' heel of AI governance. The need will only grow as more local and national laws on responsible AI go into effect.

Singh said what many companies did in the past year was just a review of their AI process, not a true audit. "I think we need to get very clear on language because audits really need to happen against standards," she said.

Some promising AI governance standards that have auditing in their purview are in the works, according to Singh. One is the risk management framework that the National Institute of Standards and Technology, which administers NAIAC, is actively working on. The European Union's Artificial Intelligence Act is also taking a risk-based approach, she said. "The UK, on the other hand, has taken a very context-centric, application-centric approach."

Why is it so hard to come up with standards?

"It's hard because it's so contextual," Singh said. "You cannot have a peanut butter approach to regulating artificial intelligence. I would say there's going to be a couple more years before we see harmonization of regulations or emergence of holistic standards. I truly believe it's going to be sector specific, use-case specific."

To hear the podcast, click the link above.