What is Docker?

Docker is an open source software platform used to create, deploy and manage applications in virtualized environments called containers. These containers are lightweight, portable and self-sufficient packages that include everything an application needs to run, such as code, libraries, runtime and system tools. Containers ensure consistent performance across different environments by encapsulating the application and its dependencies.

Docker container technology debuted in 2013. At that time, Docker Inc. was formed to support a commercial edition of container management software and be the principal sponsor of an open source version. Mirantis acquired the Docker Enterprise business in November 2019.

Docker gives software developers a faster and more efficient way to build and test containerized portions of an overall software application. This lets developers in a team concurrently build multiple pieces of software. Each container includes all elements needed to create a software component and ensure it's built, tested and deployed smoothly. Docker enables portability so these packaged containers can be moved to different servers or development environments.

How Docker works

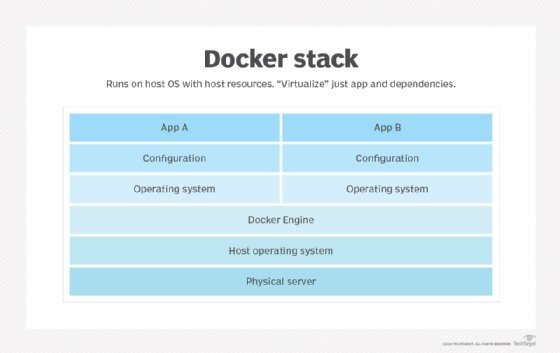

Docker packages, provisions and runs containers. Container technology is available through the operating system (OS): A container packages the application service or function with all the libraries, configuration files, dependencies, and other parts and parameters needed to operate. Each container shares the services of one underlying OS. Docker images contain all the dependencies needed to execute code inside a container, so containers that move between Docker environments with the same OS work with no changes.

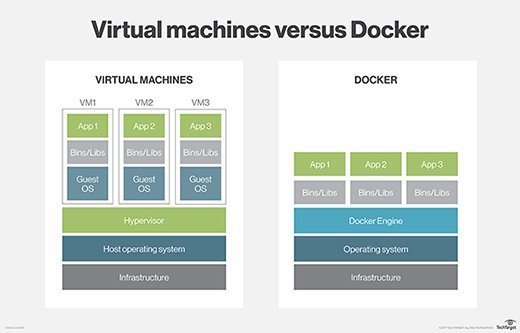

Docker uses resource isolation in the OS kernel to run multiple containers on the same OS. This is different from virtual machines (VMs), which encapsulate an entire OS with executable code on top of an abstracted layer of physical hardware resources.

Docker was created to work on the Linux platform. It was extended to offer greater support for non-Linux OSes, including Microsoft Windows and Apple macOS. Versions of Docker for Amazon Web Services (AWS), Google Cloud and Microsoft Azure are available.

Why is Docker so popular?

Docker has gained popularity due to its ability to streamline operations and transform software development and deployment. Here are some key reasons why Docker has gained widespread adoption:

- Ease of use. Docker makes it easy to use containers to create, deploy and manage applications. Its simple interface and easy-to-use command-line tools help developers learn and use Docker quickly in their workflows.

- Portability. Docker containers package an application along with its dependencies, ensuring consistent operation across various environments. Docker containers can run on any system that supports Docker, whether it's a developer's laptop, a test server or a cloud environment, such as AWS, Google Cloud or Azure. This portability lets developers create applications on their local machines and easily deploy them to production, eliminating concerns about environmental discrepancies.

- Lightweight and efficient. Docker containers are lightweight when compared to traditional VMs, as they share the host OS kernel. This leads to better resource use, enabling more containers to run on a single host, which is especially beneficial in cloud environments.

- Rapid development and deployment. Docker enables continuous integration/continuous delivery (CI/CD) practices, promoting faster development cycles. This lets developers quickly build, test and deploy applications, significantly reducing time to market.

- Community and ecosystem. Docker has a large and active community that contributes to a rich ecosystem of tools, libraries and resources. Community support makes it easier for developers to find answers to problems, share best practices and collaborate on projects.

- Integration with DevOps practices. Docker is well suited for modern DevOps practices as it fosters collaboration between development and operations teams. It also supports automation and orchestration tools, such as Kubernetes, which enhance its ability to manage containerized applications at scale.

Key use cases for Docker

While it's technically possible to use Docker for developing and deploying any kind of software application, it's most useful to accomplish the following:

- Continuously deploying software. Docker technology and strong DevOps practices make it possible to deploy containerized applications in a few seconds. This capability is unlike traditional, bulky, monolithic applications that take much longer. Updates or changes made to an application's code are executed and deployed quickly when using containers that are part of a larger CI/CD pipeline.

- Building a microservices-based architecture. When a microservices-based architecture is more advantageous than a traditional, monolithic application, Docker is ideal for the process of building out this architecture. Developers build and deploy multiple microservices, each inside their own container. Then, they integrate them to assemble a full software application with the help of a container orchestration tool, such as Docker Swarm.

- Migrating legacy applications to a containerized infrastructure. A development team wanting to modernize a preexisting legacy software application can use Docker to shift the app to a containerized infrastructure.

- Enabling hybrid cloud and multi-cloud applications. Docker containers operate the same way whether deployed on-premises or using cloud computing technology. Therefore, Docker lets applications be easily moved to various cloud vendors' production and testing environments. A Docker app that uses multiple cloud offerings can be considered hybrid cloud or multi-cloud.

- Jump-starting machine learning and data science. Docker is increasingly used in AI and machine learning (ML) projects. By packaging code, libraries and dependencies into containers, it provides a consistent environment for running experiments, training models and deploying applications, which is crucial for reproducibility in data science workflows.

- Performing testing and quality assurance (QA). Docker provides advantages for automated testing, letting teams quickly provision and dismantle isolated test environments. This capability ensures that tests are run reliably in clean states without the need for manual intervention. Docker's lightweight containers also start up fast, enabling the efficient execution of parallel tests, which accelerates the overall testing process.

Docker architecture: Components and tools

Docker Community Edition is open source, while Docker Enterprise Edition is a commercialized version offered by Docker Inc. Docker consists of various components and tools that help create, verify and manage containers.

Main components and tools in the Docker architecture include the following:

- Content Trust. This security tool is used to verify the integrity of remote Docker registries through user signatures and image tags.

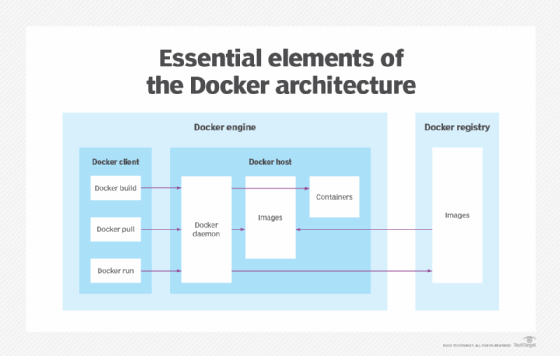

- Daemon. The daemon provides a client-side command-line interface (CLI) for users to interact with the daemon through the Docker application programming interface (API). Containers created by Docker are called Dockerfiles. Docker Compose files define the composition of components in a Docker container.

- Docker Build Cloud. This tool uses cloud infrastructure to enhance and speed up the build process. It integrates with CI/CD tools, such as GitHub Actions, to facilitate streamlined workflows for building and deploying applications.

- Docker client. The Docker client is the primary interface for users to interact with Docker. It lets users issue Docker commands to the Docker daemon using a CLI or through a graphical user interface (GUI). The client communicates with the daemon to manage containers, images and networks.

- Docker Compose. This tool is used to configure multicontainer application services, view container statuses, stream log output and run single-instance processes.

- Docker container. A container is a runnable instance of a Docker image. It encapsulates the application and its dependencies, providing an isolated environment for execution.

- Docker Desktop. Docker Desktop provides an easy-to-use interface for managing Docker containers on local machines. It includes tools for building, running and sharing containerized applications, making it a great choice for developers working in their local environments.

- Docker Engine. Docker Engine is the underlying technology that handles the tasks and workflows involved in building container-based applications. The engine creates a server-side daemon process that hosts images, containers, APIs, networks and storage volumes.

- Docker Hub. This software-as-a-service tool lets users publish and share container-based applications through a common library. The service has more than 100,000 publicly available applications, as well as public and private container registries.

- Docker image. A Docker image serves as a blueprint for a container. An image is a lightweight, standalone and executable package that includes everything needed to run a piece of software, including the code, runtime, libraries and environment variables. Images are built from a set of instructions defined in a Dockerfile.

- Docker Machine. Docker Machine is a tool used for provisioning and managing Docker hosts on various cloud platforms or local hypervisors, such as Oracle VirtualBox and VMware. It automates the process of creating and configuring Docker hosts on local or remote systems.

- Docker Swarm. This tool is part of Docker Engine and supports cluster load balancing for Docker. Multiple Docker host resources are pooled together in Swarm to act as one, which lets users quickly scale container deployments to multiple hosts.

- Docker Trusted Registry (DTR). This is a repository that's similar to Docker Hub but with an extra layer of control and ownership over container image storage and distribution.

- Universal Control Plane (UCP). This is a web-based, unified cluster and application management interface.

Docker advantages and disadvantages

Docker emerged as a de facto standard platform to quickly compose, create, deploy, scale and oversee containers across Docker hosts. In addition to efficient containerized application development, other benefits of Docker include the following:

- A high degree of portability so users can register and share containers over various hosts.

- Lower resource use.

- Faster deployment compared to VMs.

- Version control for Docker images, making it easy to manage different versions of an application and roll back to previous versions if needed.

There are also potential challenges with Docker, such as the following:

- The number of containers possible in an enterprise can be difficult to manage efficiently.

- Container use is evolving from granular virtual hosting to orchestration of application components and resources. As a result, the distribution and interconnection of componentized applications are becoming major hurdles.

- Since containers share the host OS' kernel, without proper management, they can lead to container security issues and vulnerabilities.

- Docker containers introduce some performance overhead due to factors such as virtualization, networking and storage drivers. While this overhead isn't that noticeable, it can be an issue in resource-intensive applications.

- Docker Engine primarily operates as a command-line tool, whereas Docker Desktop provides a GUI. However, managing large-scale deployments often relies on the command line or other management tools. This reliance on command-line syntax can be challenging for users who are more accustomed to graphical interfaces.

- As the Docker ecosystem evolves rapidly, documentation might lag behind the latest features and changes, which can potentially confuse users.

- Adopting Docker introduces a learning curve for teams unfamiliar with containerization concepts and tools, potentially requiring training and adjustment periods.

Docker security

A historically persistent issue with containers -- and Docker, by extension -- is security. Despite excellent logical isolation, containers still share the host's OS. An attack or flaw in the underlying OS can potentially compromise all the containers running on top of the OS. Vulnerabilities can involve access and authorization, container images and network traffic among containers. Docker images can retain root access to the host by default, although this is often carried over from third-party vendors' packages.

Docker has regularly added security enhancements to the platform, such as image scanning, secure node introduction, cryptographic node identity, cluster segmentation and secure secret distribution. Docker secrets management also exists in Kubernetes, as well as CISOfy Lynis, D2iQ and HashiCorp Vault. Various container security scanning tools have emerged from Aqua Security, SUSE Security and others.

Here are some best practices that can be used to improve Docker security:

- Running containers within a VM. Some organizations run containers within a VM, although containers don't require VMs. This doesn't solve the shared resource problem vector, but it does mitigate the potential effect of a security flaw.

- Using low-profile VMs. Another alternative is to use low-profile or micro VMs, which don't require the same overhead as a regular VM. Examples are Firecracker, gVisor and Kata Containers. Above all, the most common and recommended step to ensure container security is to not expose container hosts to the internet and only use container images from known sources.

- Using official and trusted images. It's important to always pull images from reputable sources, such as Docker Hub's official repositories, to minimize the risk of vulnerabilities.

- Avoiding root permissions. Running Docker containers as root simplifies initial setup by bypassing complex permission management, but it's a significant security risk with few valid reasons in production. While Docker's default is nonroot, organizations should explicitly avoid granting root privileges.

- Monitoring container log activities. Organizations should continuously monitor container activities and maintain logs to detect anomalies and respond to security incidents promptly.

Docker alternatives, ecosystem and standardization

Over the years, Docker has become a foundational tool in containerization, but it's far from the only option available. The broader container ecosystem encompasses a range of third-party tools, competing platforms and open standards that have shaped the development, deployment and management of modern applications.

The following is an overview of key alternatives to Docker and the standardization initiatives that have emerged to promote compatibility and consistency across the container landscape:

- Docker alternatives. There are third-party tools that work with Docker for tasks such as container management and clustering. The Docker ecosystem includes a mix of open source projects and proprietary technologies, such as open source Kubernetes, Red Hat's proprietary OpenShift packaging of Kubernetes and Canonical's distribution of Kubernetes, referred to as pure upstream Kubernetes.

- Docker's competition with other container platforms. Docker competes with proprietary application containers, such as VMware vApp, and infrastructure abstraction tools, including Chef. Docker isn't the only container platform available, but it's still the biggest name in the container marketplace. Besides Docker, other proprietary and open source container platforms include Podman, containerd and nerdctl, Linux Containers, runc and Rancher Desktop.

- Role in container standardization. Docker also played a leading role in an initiative to more formally standardize container packaging and distribution named the Open Container Initiative, which was established to foster a common container format and runtime environment. More than 40 container industry providers are members of the Open Container Initiative, including AWS, Intel and Red Hat.

Docker company history

Docker has rapidly evolved from a platform-as-a-service component to a key technology in software development and deployment, focusing on providing developers with comprehensive tools and secure workflows. The following is a timeline of major events in Docker's history:

- March 2013. Docker was first released as an open source platform under the name dotCloud. Docker Engine 1.0 launched in 2014. In 2016, Docker integrated its Swarm orchestration with Docker Engine in version 1.12. Docker's broader goal was to build up its business with containers as a service, but eventually, these plans were overtaken by the rise of Kubernetes.

- March 2017. Docker Enterprise was introduced. The company also donated its containerd container runtime utility to the Cloud Native Computing Foundation that year.

- November 2019. Mirantis acquired Docker products and intellectual property around Docker Engine. The acquisition included Enterprise, DTR, UCP and Docker CLI, as well as the commercial Docker Swarm product. Mirantis initially indicated it would shift its focus to Kubernetes and eventually end support for Docker Swarm, but it later reaffirmed its intent to support and develop new features for the platform. The remaining Docker Inc. company now focuses on Docker Desktop and Docker Hub.

- January 2022. Docker Desktop stopped being available for free use for enterprises. Enterprise use of Desktop requires a paid subscription plan. However, a free version is available to individuals and small businesses.

- October 2023. Docker partnered with LangChain, Neo4j and Ollama to release GenAI Stack. This let developers deploy a comprehensive generative AI (GenAI) setup with minimal effort, streamlining AI application development.

- January 2024. Docker introduced Docker Cloud Build, a cloud-based build service that accelerates image builds by as much as 39 times. It integrates seamlessly with Docker Desktop and supports native multiarchitecture builds, enhancing CI/CD workflows.

- February 2025. Don Johnson was appointed CEO of Docker, succeeding Scott Johnston, to lead the company's next phase of innovation and growth, with a strong focus on developer workflows, including AI/ML integration.

Microservices can be deployed without containers, but they are often deployed together. Understand the benefits and drawbacks of using containers for microservices.

Meredith Courtemanche contributed to an earlier version of this definition.