GAN vs. transformer models: Comparing architectures and uses

Discover the differences between generative adversarial networks and transformers, plus how the two techniques might combine in the future to provide users with better results.

Generative adversarial networks, or GANs, hold considerable promise for generating media, such as realistic images and voices, video and 3D shapes, as well as drug molecules. They can also be used to upscale images to higher resolution, apply a new style to an existing image and for layout optimization in architecture. They were also one of the most popular GenAI techniques until transformers were introduced a few years ago.

Transformers are a foundational technology underpinning many advances in large language models (LLMs), such as generative pre-trained transformers (GPTs). They're now expanding into multimodal AI applications, such as large vision models capable of correlating content as diverse as text, images, audio and robot instructions across numerous media types more efficiently than techniques like GANs can.

GANs and transformers can also be combined in various ways to generate content from a prompt, target adjustments to existing content or interpret content.

Let's explore the beginnings of each technique, their use cases and how researchers are combining the two techniques into various transformer-GAN combinations.

This article is part of

What is GenAI? Generative AI explained

GAN architecture explained

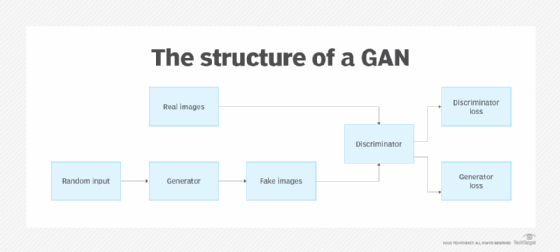

GANs were introduced in 2014 by Ian Goodfellow and associates to generate realistic-looking numbers and faces. They combine the following two neural networks:

- A generator, which is typically a convolutional neural network (CNN) that creates content based on a text or image prompt.

- A discriminator, typically a deconvolutional neural network that identifies authentic vs. counterfeit images.

Before GANs, computer vision was mainly done with CNNs that captured lower-level features of an image, such as edges and color, and higher-level features representing entire objects, said Adrian Zidaritz, founder of the nonprofit organization Stronger Democracy through Artificial Intelligence. The novelty of the GAN architecture resulted from its adversarial approach in which one neural network proposes generated images, while the other one vetoes them if they don't come close to the authentic images from a given data set.

Today, researchers are exploring ways to use other neural network models, including transformers.

Transformer architecture explained

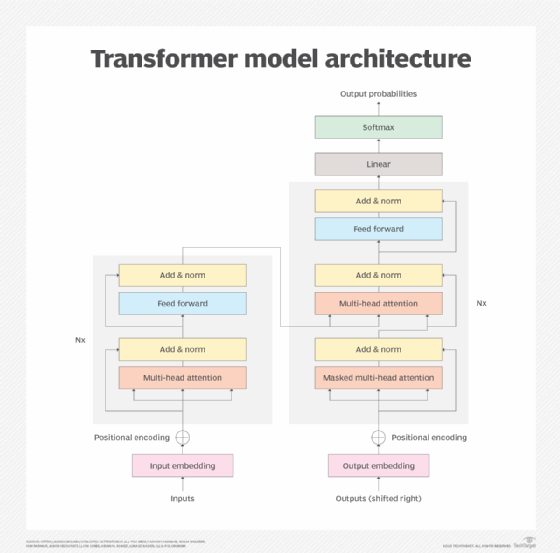

Transformers were introduced by a team of Google researchers in 2017 who were looking to build a more efficient translator. In a paper entitled "Attention Is All You Need," the researchers laid out a new technique to discern the meaning of words based on how they characterized other words in phrases, sentences and essays.

Earlier tools to interpret text frequently used one neural network to translate words into vectors using a previously constructed dictionary alongside another neural network to process a sequence of text, such as a recurrent neural network (RNN). In contrast, transformers essentially learn to interpret the meaning of words directly from processing large bodies of unlabeled text. The same approach can also be used to identify patterns in other kinds of data, such as protein sequences, chemical structures, computer code and IoT data streams. This lets researchers scale the LLMs driving recent advances -- and publicity -- in the field. Transformers can also find relationships between words that are far apart, which was impractical with RNNs.

Small snippets of an image can also be defined by the context of the entire image in which they appear, Zidaritz said. The idea of self-attention in natural language processing (NLP) becomes self-similarity in computer vision.

Transformers also play an essential role in the development of multimodal AI that combines multiple modalities of data, including text, audio, video and sensor data. In these cases, the attention mechanism can find relationships and connections across multiple modalities of data.

GAN vs. transformer: Best use cases for each model

GANs are more flexible in their potential range of applications, according to Richard Searle, chief AI officer at data security platform vendor Fortanix. They're also useful where imbalanced data, such as a small number of positive cases compared to the volume of negative instances, can lead to numerous false-positive classifications. As a result, adversarial learning has shown promise in use cases where there's limited training data for discrimination tasks or in fraud detection where only a small number of transactions might represent fraud compared to more common ones. In a fraud scenario, for example, hackers constantly introduce new inputs to fool fraud detection algorithms. GANs tend to be better at adapting to and protecting against these kinds of techniques.

Transformers are typically used where sequential input-output relationships must be derived, Searle said, and the number of possible combinations of features requires focused attention to provide local context. For this reason, transformers have established preeminence in NLP applications, as they can process content of any length, such as phrases or whole documents. Transformers are also good at suggesting the next move in applications like gaming, where a set of potential responses must be evaluated with respect to the conditional sequence of inputs.

One limitation of transformers is that they're not as computationally, memory or data efficient as GANs. Compared to GANs, transformer models require considerably more IT resources to train and run; this is known as inference. Hence, GANs are a better option for generating synthetic data based on existing data sets.

There's also active research into combining GANs and transformers into so-called GANsformers. The idea is to use a transformer to provide an attentional reference so the generator can increase the use of context to enhance content.

"The intuition behind GANsformers is that human attention is based on the specific local features of an object of interest, in addition to the latent global characteristics," Searle explained. The resulting improved representations are more likely to simulate both the global and local features a human might perceive in an authentic sample, such as a realistic face or computer-generated audio consistent with a human voice's tone and rhythm.

GANsformers could also help map linguistic concepts to specific areas of adjustments or types of adjustments to photos, videos or 3D models. In addition, they could help fill in or improve the rendering of details in generated images. For example, they could make sure humans have five fingers as opposed to the different numbers of fingers often found in many deepfakes. They could also fill in local features to create better deepfakes, such as the subtle color changes in skin tones associated with increased blood flow that aren't noticed by humans but are used to detect fake video content.

Are transformer-based networks stronger than GANs?

Transformers are growing in awareness thanks to their role in popular tools, such as ChatGPT, and their support for multimodal AI. But transformers won't necessarily replace GANs for all applications.

Searle said he expects to see more integration to create text, voice and image data with enhanced realism. "This may be desirable where improved contextual realism or fluency in human-machine interaction or digital content would enhance the user experience," he noted. For example, GANsformers might be able to generate synthetic data to pass the Turing test when confronted by both a human user and a trained machine evaluator. In the case of text responses, such as those furnished by a GPT system, the inclusion of idiosyncratic errors or stylistic traits could mask the true origin of an AI-derived output.

Conversely, improved realism might be problematic with deepfakes used to launch cyberattacks, damage brands or spread fake news. In these cases, GANsformers could provide better filters to detect deepfakes.

"The use of adversarial training and contextual evaluation could produce AI systems able to provide enhanced security, improved content filtering and defense against misinformation attacks using generative botnets," Searle said.

But Zidaritz believes transformers can potentially edge out GANs in many use cases since they can be applied to text and images more easily. "New GANs will continue to be developed, but their applications will be more limited than that of GPTs," he said. "It is also likely that we will see more GAN-like transformers and transformer-like GANs, in both of which the transformer with its self-attention or its self-similarity mechanism will be central."

It's important to note that there has been considerably more research and development on multimodal LLMs, which can sometimes achieve content-generation results like those of GANs. They also make it easier to translate human intent into results. While they might not be as computationally efficient as GANs, considerably higher levels of investment in LLM research is closing the gap.

Other promising machine learning techniques for generative models are starting to mature. Both variational autoencoders (VAEs) and diffusion methods can be applied to many existing GAN use cases. For example, both VAEs and diffusion figure prominently in Nvidia's new Cosmos platform for physical AI simulation.

Editor's note: This article was updated in January 2025 to improve the reader experience.

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.