GANs vs. VAEs: What is the best generative AI approach?

The use of generative AI is taking off across industries. Two popular approaches are GANs, which are used to generate multimedia, and VAEs, used more for signal analysis.

Generative adversarial networks and variational autoencoders are two of the most popular approaches used to produce AI-generated content.

Here is a summary of their chief similarities and differences:

- Both GANs and VAEs are types of models that learn to generate new content. This content includes AI models, synthetic data in structured and unstructured formats, possible drug targets and realistic multimedia, such as voices and images.

- In general, GANs tend to be more widely used to generate multimedia. VAEs are used more to analyze and process signals in audio, images and other data to extract meaningful patterns and features.

- GANs and VAEs can be used in tandem to take advantage of their respective strengths. A VAE-GAN hybrid would combine the VAE's ability to generate meaningful representatives of data with the GAN's talent for producing high-quality, realistic images.

Let's examine the history of GANs and VAEs, expand on their similarities and differences, and examine some use cases.

What are GANs and how do they work?

Ian Goodfellow and fellow researchers at the University of Montreal introduced GANs in 2014. They have shown tremendous promise in generating many types of realistic data. Yann LeCun, chief AI scientist at Meta, has written that GANs and their variations are "the most interesting idea in the last ten years in machine learning."

For starters, GANs have generated realistic speech, including matching voices and lip movements to produce better translations. They have also translated imagery, differentiated between night and day and delineated dance moves between bodies. Combined with other AI techniques, they improve security and build better AI classifiers.

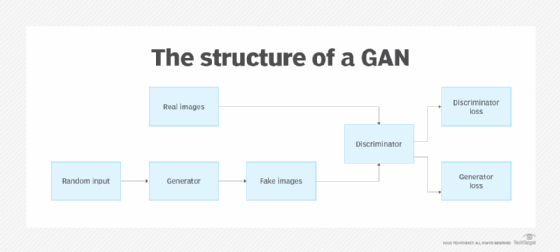

The mechanics of GANs involve the interplay of two neural networks that work together to generate and then classify data that is representative of reality. GANs generate content using a generator neural network that is tested against a second neural network, the discriminator, which determines whether the content looks "real." This feedback helps train a better generator network. The discriminator can also detect fake content or a piece of content that's not part of the domain. Over time, both neural networks improve, and the feedback helps them learn to generate data as close to reality as possible.

What are VAEs and how do they work?

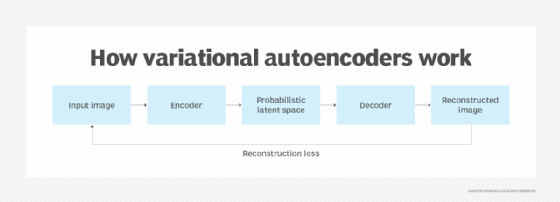

VAEs were also introduced in 2014. They were developed by Diederik Kingma, a research scientist at Google, and Max Welling, research chair in machine learning at the University of Amsterdam. Like GANs, VAEs promise to create more effective classification engines for various tasks but with different mechanics. At their core, VAEs build on neural network autoencoders made up of two neural networks: an encoder and a decoder. The encoder network optimizes for more efficient ways of representing data, while the decoder network optimizes for more efficient ways of regenerating the original data set.

This article is part of

What is GenAI? Generative AI explained

Traditionally, autoencoder techniques clean data, improve predictive analysis, compress data and reduce the dimensionality of data sets for other algorithms. VAEs take this further to minimize errors between the raw signal and the reconstruction.

"VAEs are extraordinarily strong in providing near-original content with just a reduced vector. It also allows us to generate inexistent content that can be used free of licensing," said Tiago Cardoso, group product manager at Hyland Software.

GANs vs. VAES: How do they compare?

The biggest difference between GANs and VAEs is how they are applied. Pratik Agrawal, partner at Bain & Company, said that GANs are typically employed when dealing with any imagery or visual data. He finds VAEs work better for signal processing use cases, such as anomaly detection for predictive maintenance or security analytics applications.

Here is a list of other similarities and differences.

Similarities

Unsupervised learning methods. GANs and VAEs can learn to identify patterns and generate new content using unlabeled data sets. This can streamline data preparation compared to supervised techniques.

Neural networks. Both techniques use neural networks but in different ways.

Flexibility. Both techniques work well with diverse data types, especially images, sounds and videos. They can also work with text, particularly when describing data features, but they are not as good as other techniques, such as transformers, for text generation.

Differences

Architecture. GANs are adversarial in that they pit two neural networks against each other. In contrast, VAEs learn a probabilistic representation to improve the process of encoding and then decoding data.

Training mechanism. GANs train two separate neural networks, such as convolutional neural networks or recurrent neural networks, to create content, distinguish fake samples and progressively improve both neural networks. VAEs train two separate neural networks to encode data more efficiently and then decode the original sample more efficiently.

Output quality. GANs are better at generating sharp, high-quality, realistic samples. VAEs struggle with images but do better with time series data, such as speech, video or synthetic continuous IoT data streams.

Stability. GANs are less stable because slight differences can cause them to make big differences. VAEs can be trained more gradually, making fine-tuning the model easier.

Use in tandem

Researchers sometimes combine the techniques to achieve better results for a given use case. For example, VAE-GANs can combine a VAE decoder with a GAN generator to improve the quality of samples generated by the VAE.

A VAE-GAN combination can also be used in hand pose estimation to predict hand and finger positions in relation to a fixed reference point. In this instance, the VAE generates a sequence of hand poses while the GAN discovers the relative depth or distance between hand joints. This helps track and analyze hand movements in space.

Another use case is using VAEs to learn the representations of brain waves combined with GANs to generate mental imagery associated with particular patterns.

Generative AI use cases

Generative AI techniques like GANs and VAEs can be deployed in a variety of use cases, including the following real-life applications:

- Deploying deepfakes to mimic people.

- Generating malware examples to train anomaly detectors.

- Generating synthetic traffic data to improve intrusion detection.

- Improving dubbing for movies.

- Creating photorealistic art in a particular style.

- Suggesting new drug compounds to test.

- Designing physical products and buildings.

- Optimizing new chip designs.

- Writing music in a specific style or tone.

- Creating synthetic data to train autonomous cars and robots.

Since both VAEs and GANs are examples of neural networks, their applications can be limited in actual live business examples, Agrawal said. Data scientists and developers working with these techniques must tie results back to inputs and run sensitivity analyses. It's also essential to consider factors such as the sustainability of these tools and to address who runs them, how often they are maintained and the technology resources needed to update them.

It's worth noting that a variety of other techniques have recently emerged in generative AI, including diffusion models used to generate and optimize images; transformers, such as OpenAI's ChatGPT, that are widely used in language generation; and neural radiance fields, a new technique used to create realistic 3D media from 2D data.

Editor's note: This article was updated in March 2025 to include more information on the differences between VAEs and GANs and to add further use cases.

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.