Getty Images/iStockphoto

Build an AI agent with LangChain in this beginner tutorial

LangChain is a popular choice amongst developers for building AI applications, including agents. Use this tutorial to get started with the framework.

LangChain can help build agents and other types of AI applications. However, developers must understand its installation and building procedures to use it successfully.

LangChain is an open source framework for connecting large language models (LLMs) with data, software utilities and other LLMs. It does this through various prebuilt tools and integrations that make it easy for developers to implement the functionality needed to build AI applications.

LangChain is particularly beneficial for agentic AI development. With LangChain, users can create an AI agent that can pass a human request to an LLM. The LLM processes the request and determines which tools or commands are needed to fulfill it. The agent then executes tasks automatically.

This LangChain tutorial for beginners covers the basics of LangChain installation and how to build an agent that can manage files on a local computer using LangChain's File System tool.

Install LangChain

Before building agents or other AI applications, developers must get comfortable with LangChain's simple installation process.

All commands in this article were tested on an Ubuntu 25.04 system, but most will work on any OS that supports Python 3. The only exception is the sudo apt commands used to install Python 3 and Pip, a package manager for Python. Apt-get is Ubuntu's software installation utility; users running an OS other than Ubuntu must install these two packages using a system-supported installation method.

First, install Python 3 and Pip:

sudo apt update

sudo apt install python3 python3-pip

Next, use Pip to install the LangChain package:

pip install langchain

Test LangChain

To test that LangChain is properly set up, run some simple code that sends a query to an LLM from within a terminal. Developers can run the following commands directly in a Python console, although executing them inside a Jupyter Notebook might be more convenient and enable a more interactive approach.

First, install the LangChain package for the LLM in use. This tutorial uses Google's Gemini model, but LangChain supports a range of models.

Install the Gemini package:

pip install -qU "langchain[google-genai]"

Next, run the following commands to import the modules needed to interact with Gemini. These commands also prompt the user to enter a Gemini API key. Developers can create one here.

import getpass

import os

if not os.environ.get("GOOGLE_API_KEY"):

os.environ["GOOGLE_API_KEY"] = getpass.getpass("Enter API key for Google Gemini: ")

from langchain.chat_models import init_chat_model

model = init_chat_model("gemini-2.0-flash", model_provider="google_genai")

from langchain_core.messages import HumanMessage, SystemMessage

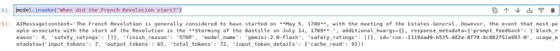

Next, pass a query to Gemini using the .invoke method. For example, the following command asks Gemini when the French Revolution started:

model.invoke("When did the French Revolution start?")

Figure 1 shows Gemini's output based on code running inside a Jupyter Notebook.

Build an AI agent with LangChain

The above tutorial showed how to use LangChain to send a message to an LLM and view the response. However, it didn't create an agent because the LLM wasn't connected to any tools that would enable it to carry out commands.

The following tutorial uses LangChain to connect to an LLM and integrate with a tool. Specifically, it uses the File System tool, which provides the commands necessary to manage files on a computer. The agent will be able to read, write and delete files based on natural-language input from the user.

1. Integrate an LLM

Install the integration with the LLM that will guide the agent. This tutorial uses Gemini:

pip install -qU "langchain[google-genai]"

Next, set up an API key for the LLM:

import getpass

import os

if not os.environ.get("GOOGLE_API_KEY"):

os.environ["GOOGLE_API_KEY"] = getpass.getpass("Enter API key for Google Gemini: ")

from langchain.chat_models import init_chat_model

model = init_chat_model("gemini-2.0-flash", model_provider="google_genai")

2. Set up the tool

Install and configure the desired LangChain tool. As noted above, this tutorial's tool is File System, which enables interaction with files on a computer.

The File System tool is part of the langchain-community package. Install it with the following command:

pip install -qU langchain-community

Then, import the modules needed to use File System:

from langchain_community.agent_toolkits import FileManagementToolkit

Next, set a working directory, which maps File System onto a directory on the host computer's local file system. This tutorial uses a temporary directory, because it's not advisable to give the tool access to a directory containing important files without deploying security safeguards.

Set up the directory:

from tempfile import TemporaryDirectory

working_directory = TemporaryDirectory()

Finally, enable the tool:

tools = FileManagementToolkit(

root_dir=str(working_directory.name),

selected_tools=["read_file", "write_file", "list_directory"],

).get_tools()

3. Build an AI agent

With both the LangChain LLM integration and the tool set up, developers can now create an agent.

As a prerequisite, install LangGraph -- an AI agent framework LangChain developed that is not provided in the default LangChain package -- with the following command:

pip install -U langgraph

Next, bind the LLM defined in the first section of this tutorial to the tool defined in the second section:

model_with_tools = model.bind_tools(tools)

from langgraph.prebuilt import create_react_agent

agent_executor = create_react_agent(model, tools)

This binding generates an AI agent.

4. Run the AI agent

Now, the agent can perform tasks.

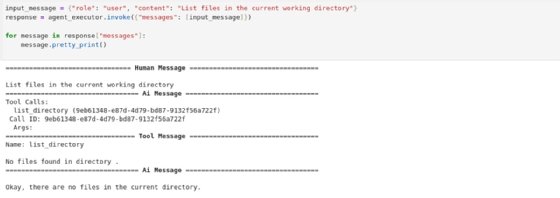

Submit a request to the agent by modifying the content value of the input_message variable. For example, the following code asks the agent to list files in the current working directory and print the response:

input_message = {"role": "user", "content": "List files in the current working directory"}

response = agent_executor.invoke({"messages": [input_message]})

for message in response["messages"]:

message.pretty_print()

Figure 2 shows what the output looks like.

Figure 2 shows that the tool found no files in the directory, which makes sense because there aren't any yet. The tool reported this finding to the LLM, which generated a response accordingly.

Next, submit a request asking the agent to create a file named sample-file.txt:

input_message = {"role": "user", "content": "Create a file named sample-file.txt"}

response = agent_executor.invoke({"messages": [input_message]})

for message in response["messages"]:

message.pretty_print()

Figure 3 shows the agent creating the file as requested.

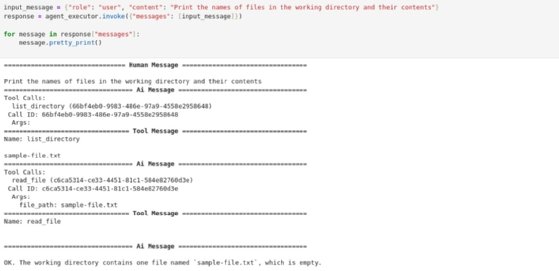

Next, ask the agent to state which files are in the directory:

input_message = {"role": "user", "content": "Print the names of files in the working directory and their contents"}

response = agent_executor.invoke({"messages": [input_message]})

for message in response["messages"]:

message.pretty_print()

As the agent's response in Figure 4 indicates, the directory now contains a file named sample-file.txt.

5. Explore more complex use cases

The tutorial above demonstrated the basics of setting up LangChain and building AI agents. But it's a simple example where the agent carries out only basic tasks -- namely, managing files on a local file system.

This represents a small portion of what LangChain can do. To understand other use cases that LangChain AI agents can support, look at the complete list of LangChain tools. LangChain can integrate with various websites, search engines, software development tools and more, making it possible to automate a wide variety of tasks using AI agents.

Chris Tozzi is a freelance writer, research adviser and professor of IT and society. He has previously worked as a journalist and Linux systems administrator.