flash memory

What is flash memory?

Flash memory, also known as flash storage, is a type of nonvolatile memory that erases data in units called blocks and rewrites data at the byte level. Flash memory is widely used for storage and data transfer in consumer devices, enterprise systems and industrial applications. Flash memory retains data for an extended period regardless of whether a flash-equipped device is powered on or off.

Flash memory is used in enterprise data center server, storage and networking technology as well as in a wide range of consumer devices, including USB flash drives -- also known as memory sticks -- SD cards, mobile phones, digital cameras, tablet computers, and PC cards in notebook computers and embedded controllers.

There are two types of flash memory: NAND and NOR. NAND flash-based solid-state drives (SSDs) are often used to accelerate the performance of I/O-intensive applications. NOR flash memory is often used to hold control code, such as the BIOS in a PC.

Flash memory is also used for in-memory computing to help speed performance and scalability of systems that manage and analyze large sets of data.

Origins of flash storage technologies

Dr. Fujio Masuoka is credited with inventing flash memory when he worked for Toshiba in the 1980s. Masuoka's colleague, Shoji Ariizumi, reportedly coined the term flash because the process of erasing all the data from a semiconductor chip reminded him of the flash of a camera.

Flash memory evolved from erasable programmable read-only memory (EPROM) to electrically erasable programmable read-only memory (EEPROM). Flash is technically a variant of EEPROM, but the industry reserves the term EEPROM for byte-level erasable memory and applies the term flash memory to larger block-level erasable memory.

How does flash memory work?

Flash memory stores information in memory cells that use floating-gate transistors to store and retrieve data. A high voltage traps electrons in the floating gate to store data, and when data needs to be wiped, the charge from the floating gate is released. To read the stored data, the charge on the floating gate is checked.

The following examines the various components and processes of flash memory:

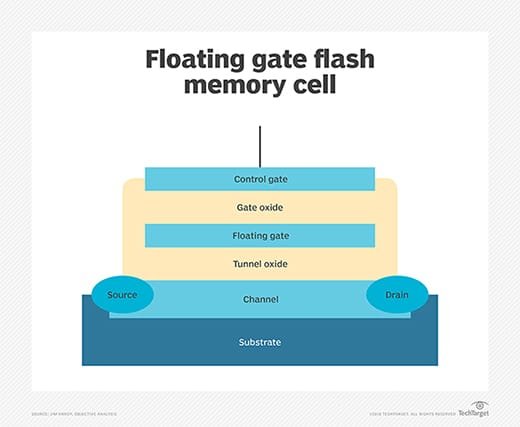

Structure. Flash memory architecture includes a memory array stacked with a multitude of flash cells. A basic flash memory cell consists of a storage transistor with a control gate and a floating gate, which is insulated from the rest of the transistor by a thin dielectric material or oxide layer. The floating gate stores the electrical charge and controls the flow of the electrical current.

Programming. Electrons are added to or removed from the floating gate to change the storage transistor's threshold voltage. Changing the voltage affects whether a cell is programmed as a zero or a one.

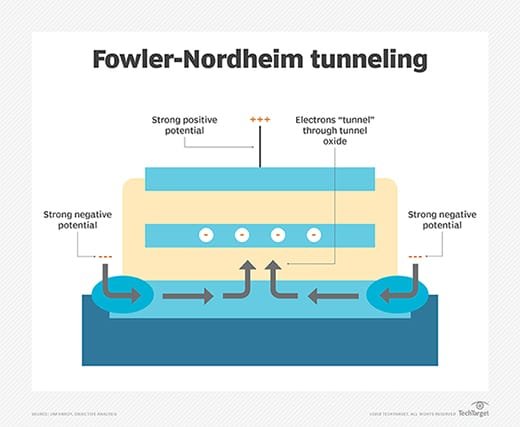

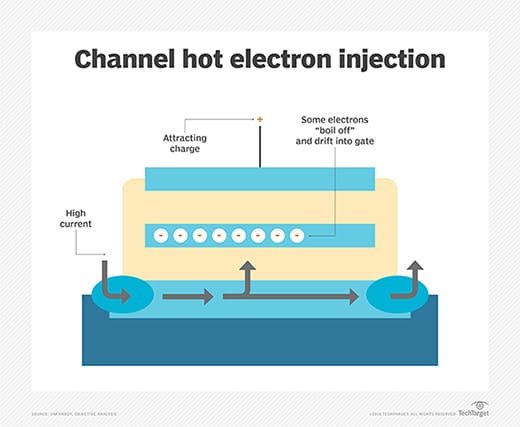

A process called Fowler-Nordheim tunneling removes electrons from the floating gate. Either Fowler-Nordheim tunneling or a phenomenon known as channel hot-electron injection traps the electrons in the floating gate.

Erasing and tunneling. With Fowler-Nordheim tunneling, data is erased via a strong negative charge on the control gate. This forces electrons into the channel, where a strong positive charge exists. The reverse happens when using Fowler-Nordheim tunneling to trap electrons in the floating gate. Electrons manage to forge through the thin oxide layer to the floating gate in the presence of a high electric field, with a strong negative charge on the cell's source and the drain as well as a strong positive charge on the control gate.

Channel hot-electron injection. Also known as hot-carrier injection, channel hot-electron injection lets electrons break through the gate oxide and change the threshold voltage of the floating gate. This breakthrough occurs when electrons acquire sufficient energy from the high current in the channel and the attracting charge on the control gate.

Electrical isolation and persistent storage. Electrons are trapped in the floating gate regardless of whether a device containing the flash memory cell is receiving power because of electrical isolation created by the oxide layer. This characteristic enables flash memory to provide persistent storage.

Examples of flash memory applications

Because of its small size, fast data access and non-volatile nature, flash memory is widely used in a variety of electronic devices.

The following are some popular use cases for flash memory:

- USB flash drives. USB flash drives, also known as thumb drives, use flash memory to store data. These drives are mainly used for storing and transferring data between different computers and electronic devices and have an integrated USB interface.

- SSDs. SSDs are storage devices that replace traditional mechanical hard disk drives (HDDs) with flash memory as the primary storage medium. SSDs are popular for laptops, desktop computers and servers because they provide faster data access, increased reliability and lower power usage.

- Memory cards. Memory cards come in many formats -- secure digital card (SD) cards, microSD cards, memory sticks, CompactFlash cards -- and use flash memory for storage. Memory cards are commonly used to extend storage capacity in digital cameras, tablets and devices such as portable game consoles.

- Smartphones and tablets. Flash memory serves as the main storage in smartphones and tablets. It enables faster load times, effective data storage and quick access to media files.

- Wearable devices. Wearable technology uses flash memory to store user data, apps and operating systems. Examples include smartwatches, fitness trackers and smart glasses.

- Industrial robots. Many industries are using industrial robots to replace labor-induced processes. The instructions for their operations and tasks are typically programmed into their internal flash memory.

- Scientific instruments. Most modern scientific instruments, including the electron microscope, pH meters and electrical conductivity meters, contain their own flash memory to temporarily store data.

NOR vs. NAND flash memory

NOR and NAND flash memory differ in architecture and design characteristics. NOR flash uses no shared components and can connect individual memory cells in parallel, enabling random access to data. A NAND flash cell is more compact and has fewer bit lines, stringing together floating gate transistors to increase storage density.

NAND is better suited to serial rather than random data access. NAND flash process geometries were developed in response to planar NAND reaching its practical scaling limit.

NOR flash is fast on data reads, but it's typically slower than NAND on erases and writes. NOR flash programs data at the byte level. NAND flash programs data in pages, which are larger than bytes, but smaller than blocks. For instance, a page might be 4 kilobytes (KB), while a block might be 128 KB to 256 KB or megabytes in size. NAND flash consumes less power than NOR flash for write-intensive applications.

NOR flash is more expensive to produce than NAND flash and tends to be used primarily in consumer and embedded devices for boot purposes and read-only applications for code storage. NAND flash is more suitable for data storage in consumer devices as well as enterprise server and storage systems due to its lower cost per bit to store data, greater density, and higher programming and erase speeds.

Devices, such as camera phones, can use both NOR and NAND flash -- in addition to other memory technologies -- to facilitate code execution and data storage.

Flash memory form factors

Flash-based media is based on a silicon substrate. Also known as solid-state devices, they're widely used in both consumer electronics and enterprise data storage systems.

The following three SSD form factors have been identified by the Solid State Storage Initiative, a project of the Storage Networking Industry Association:

- SSDs that fit into the same slots used by traditional electromechanical HDDs. SSDs have an architecture that's like an integrated circuit.

- Solid-state cards that reside on a printed circuit board and use a standard card form factor, such as Peripheral Component Interconnect Express (PCIe).

- Solid-state modules that fit in a dual inline memory module (DIMM) or small outline dual inline memory module using a standard HDD interface, such as the Serial Advanced Technology Attachment (SATA).

An additional subcategory is a hybrid hard drive that combines a conventional HDD with a NAND flash module. A hybrid hard drive is generally viewed to bridge the divide between rotating media and flash memory.

All-flash and hybrid flash memory

The advent of flash memory fueled the rise of all-flash arrays. These systems, which contain only SSDs, offer advantages in performance and potentially reduced operational costs compared to all disk-based storage arrays. The chief difference, aside from the media, is in the underlying physical architecture used to write data to a storage device.

HDD-based arrays have an actuator arm that lets data be written to a specific block on a specific sector on the disk. All-flash storage systems don't require moving parts to write data. The writes are made directly to the flash memory and custom software handles data management.

A hybrid flash array blends disk and SSDs. Hybrid arrays use SSDs as a cache to speed access to frequently requested hot data, which subsequently is rewritten to back-end disk. Many enterprises commonly archive data from disk as it ages by replicating it to an external magnetic tape library.

Flash plus tape, also known as flape, describes a type of tiered storage in which primary data in flash is simultaneously written to a linear tape system.

In addition to flash memory arrays, the ability to insert SSDs in x86-based servers has increased the technology's popularity. This arrangement is known as server-side flash memory, and it lets companies sidestep the vendor lock-in associated with purchasing expensive and integrated flash storage arrays.

The drawback of placing flash in a server is that users must build the hardware system internally, including the purchase and installation of a storage management software stack from a third-party vendor.

Pros and cons of flash memory

The following are some advantages of flash memory:

- Flash is the least expensive form of semiconductor memory.

- Unlike dynamic random access memory (DRAM) and static RAM (SRAM), flash memory is non-volatile, offers lower power consumption and can be erased in large blocks.

- NOR flash offers increased random read speeds while NAND flash is fast with serial reads and writes.

- An SSD with NAND flash memory chips delivers significantly higher performance than traditional magnetic storage media, such as HDDs and tape.

- Flash drives consume less power and produce less heat than HDDs.

- Enterprise storage systems equipped with flash drives are capable of low latency, which is measured in microseconds or milliseconds.

The main disadvantages of flash memory are the wear-out mechanism and cell-to-cell interference as the dies get smaller. Bits can fail with excessively high numbers of program/erase cycles, which eventually break down the oxide layer that traps electrons. The deterioration can distort the manufacturer-set threshold value at which a charge is determined to be a zero or a one. Electrons could escape and get stuck in the oxide insulation layer, leading to errors and bit rot.

Anecdotal evidence suggests NAND flash drives aren't wearing out to the degree once feared. Flash drive manufacturers have improved endurance and reliability through error correction code algorithms, wear leveling and other technologies.

In addition, SSDs don't wear out without warning. They typically alert users in the same way a sensor might indicate an underinflated tire.

NAND flash memory storage types

NAND flash semiconductor manufacturers have developed different types of memory suitable for a wide range of data storage uses cases. The following chart explains the various NAND flash types.

| Types of NAND flash memory storage | ||||

| Description | Advantages | Disadvantages | Primary use | |

| Single-level cell (SLC) | Stores one bit per cell and two levels of charge. | Higher performance, endurance and reliability than other types of NAND flash. | More expensive than other types of NAND flash. | Enterprise storage, mission-critical applications. |

| Multi-level cell (MLC) | Can store multiple bits per cell and multiple levels of charge. The term MLC equates to two bits per cell. | Cheaper than SLC and enterprise MLC (eMLC), high density. | Lower endurance than SLC and eMLC, slower than SLC. | Consumer devices, enterprise storage. |

| eMLC | Typically stores two bits per cell and multiple levels of charge; uses special algorithms to extend write endurance. | Less expensive than SLC flash, greater endurance than MLC flash. | More expensive than MLC, slower than SLC. | Enterprise applications with high write workloads. |

| Triple-level cell (TLC) | Stores three bits per cell and multiple levels of charge. Also referred to as MLC-3, X3 or 3-bit MLC. | Lower cost and higher density than MLC and SLC. | Lower performance and endurance than MLC and SLC. | Mass storage consumer applications, such as USB drives and flash memory cards. |

| Vertical/ 3D NAND | Stacks memory cells on top of each other in three dimensions vs. traditional planar NAND technology. |

Higher density, higher write performance and lower cost per bit vs. planar NAND. |

Higher manufacturing cost than planar NAND; difficulty in manufacturing using production planar NAND processes; potentially lower data retention. |

Consumer and enterprise storage. |

| Quad-level cell (QLC) |

Uses a 64-layer architecture that's considered the next iteration of 3D NAND. | Stores four bits of data per NAND cell, potentially boosting SSD densities. | More data bits per cell can affect endurance; increased costs of engineering. | Mostly write once, read many use cases. |

| Note: NAND flash wear-out is less of a problem in SLC flash than it is in less expensive types of flash, such as MLC and TLC, for which the manufacturers can set multiple threshold values for a charge. | ||||

NOR flash memory types

The two main types of NOR flash memory are parallel and serial, also known as serial peripheral interface. NOR flash was originally available only with a parallel interface. Parallel NOR offers high performance, security and additional features. Its primary uses include industrial, automotive, networking and telecom systems and equipment.

NOR cells are connected in parallel for random access. The configuration is geared for random reads associated with microprocessor instructions and to execute codes used in portable electronic devices, almost exclusively of the consumer variety.

Serial NOR flash has a lower pin count and smaller packaging, making it less expensive than parallel NOR. Use cases for serial NOR include personal and ultra-thin computers, servers, HDDs, printers, digital cameras, modems and routers.

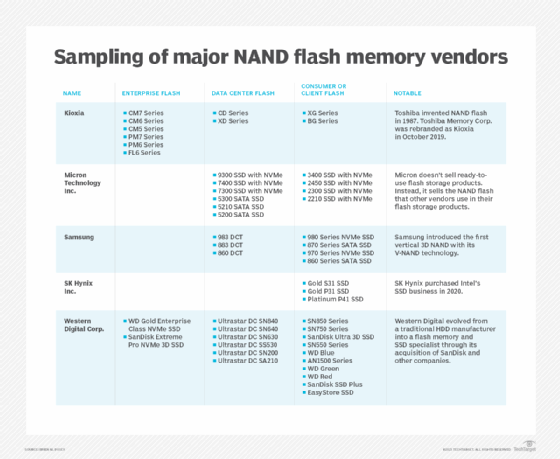

Vendor breakdown of enterprise NAND flash memory products

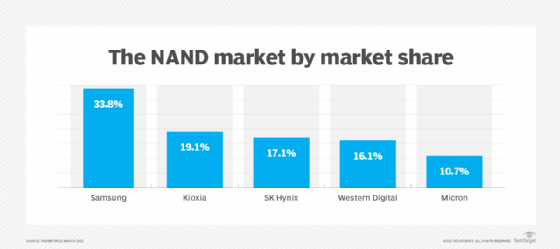

Major manufacturers of NAND flash memory chips include Kioxia -- formerly Toshiba Memory Corp. -- Micron Technology Inc., Samsung, SK Hynix Inc. and Western Digital Corp. Intel, which was once a leading chip manufacturer, sold its SSD business in 2020 to SK Hynix.

There was a NAND flash shortage in 2016, disrupting the market. The shortfall caused SSD prices to rise and lead times to lengthen. The demand outstripped supply largely due to soaring demand from smartphone makers. In 2018, signs began showing that the shortage was near its end.

Other turmoil is exerting an influence on the market. In November 2017, leading flash supplier Toshiba agreed to sell its chip making unit to a group of corporate and institutional investors led by Bain Capital. Toshiba sold the flash business as part of its effort to cover financial losses and to avoid being delisted on the Tokyo Stock Exchange.

Recently, consumer demand in the electronic device market has fallen due to high inflation, post-pandemic instability and supply-chain bottlenecks, causing NAND memory pricing to plummet because of an overstock. According to market intelligence firm TrendForce, the prices for NAND memory fell by 10 to 15 percent in the first quarter of 2023. However, a rebound is anticipated if the demand stays stable.

Leading NOR vendor products

Major manufacturers of NOR flash memory include Cypress Semiconductor Corp., GigaDevice, Macronix International Co. Ltd., Microchip Technology Inc., Micron Technology Inc. and Winbond Electronics Corp.

The following are some of the features and offerings of the NOR manufacturers:

- Cypress Semiconductor acquired NOR flash provider Spansion in 2015. The Cypress NOR portfolio includes FL-L, FL-S, FS-S and FL1-K products.

- GigaDevice is a NOR flash memory designer that also develops microcontrollers, some of which are based on the Arm architecture and others on RISC-V, or reduced instruction set computer V, architecture.

- Macronix OctaFlash uses multiple banks to enable write access to one bank and read from another. Macronix MX25R Serial NOR is a low-power version that targets internet of things (IoT) applications.

- Microchip NOR is branded as SPI Serial Flash and Serial Quad I/O Flash. The vendor's parallel NOR products include the Multi-Purpose Flash devices and Advanced Multi-Purpose Flash devices families.

- Micron sells Serial NOR Flash and Parallel NOR Flash as well as Micron Xccela high-performance NOR flash for automotive and IoT applications.

- The Winbond serial NOR product line is branded as SpiFlash Memories and includes the W25X and W25Q SpiFlash Multi-I/O Memories. In 2017, Winbond expanded its line of Secure Flash NOR for additional uses, including system-on-a-chip design to support AI, IoT and mobile applications.

Uncover the widespread use of flash memory across a wide range of consumer goods, from smartphones to USB memory cards. Gain insight into the different types of flash memory, its diverse applications and its future trends.