The future of generative AI: 10 trends to follow in 2026

GenAI's outlook focuses on ROI expectations, power demands, data quality, agentic orchestration, plug-and-play LLMs, ethical practices, stepped-up security and human oversight.

Generative AI's adoption rate among businesses has been meteoric since the introduction of ChatGPT in November 2022. Going into 2026, more than 80% of enterprises will have tested or deployed GenAI-enabled applications, Gartner reports, compared to less than 5% in 2023. With the exception of some select business sectors and applications reporting a measurable return on investment, ROI remains a concern among many decision-makers responsible for the successful implementation of AI initiatives within their organizations.

As GenAI technologies mature and investments mount due to the rise of agentic AI, physical AI and AI data centers, 2026 could see positive ROI across organizations. Learn about these 10 GenAI trends that could shape the future of business operations.

1. Heightened ROI expectations

Returns on AI investments can vary drastically. PwC's "2025 Global CEO Survey" found that 56% of surveyed executives reported efficiency gains from their GenAI deployments, 34% saw profitability increases and 32% reported revenue increases. Meanwhile, MIT's report "The GenAI Divide: State of AI in Business 2025" cites $30 to $40 billion in GenAI business investments, but "95% of organizations are getting zero return," while just 5% of "integrated AI pilots are extracting millions in value."

Business executives are sending a clear message that their tolerance for experimentation without tangible benefits is over. "[Business leaders] are looking for metrics and proof that their GenAI investments are delivering returns." said Brian Jackson, principal research director at Info-Tech Research Group.

This article is part of

What is GenAI? Generative AI explained

2. AI as seamless as electricity

AI has progressed from a tool that simply takes orders to perform a single task to agentic systems that use reason to orchestrate processes and autonomously execute complex tasks. "It has just become part of the process," noted Adnan Masood, chief AI architect at digital transformation consultancy UST, who called AI's evolution the "industrialization of intelligence."

A case in point is AI, code creation and Microsoft CTO Kevin Scott's prediction that AI will write 95% of code by 2030. "Top-tier developers," Scott said, "will use AI as a force multiplier to enable them to operate with greater efficiency and scope."

Gone are the days when executives ask, "Should we try this? Should we test this?" Now they're asking, "How do we run this reliably for hundreds of users?" said Manjeet Rege, director of the University of St. Thomas Center for Applied Artificial Intelligence. "The era of AI pilots is over, the era of AI operations has begun," he said. "GenAI is dissolving into the enterprise stack. Users will access it through ERP forms and CRM workflows, supply chain screens, ticketing systems and not by going [to a GenAI tool]. It is becoming like electricity. You don't see it, you use it. You use what's built on top of it."

3. Mainstreaming agentic AI

Many technology leaders are predicting a boom in the number of AI agents at work in 2026. In August 2025, Gartner predicted that enterprise applications integrated with task-specific AI agents will increase from just 5% to 40% by the end of 2026.

"Agentic AI is the next platform shift," Rege acknowledged. "We're moving from generation on-demand to action on behalf of the user. The leap from generative AI to agentic AI is the leap from answers to outcomes. Agents are taking goals instead of prompts, then splitting tasks into subtasks [to perform] and triggering business processes without human intervention to achieve desired outcomes."

The key to this trend is natural language processing provided by GenAI. "The language engine is critical," explained Arthur Carvahlo, associate professor of information systems and analytics at Miami University's Farmer School of Business. "With generative AI, we can establish our goals with natural language. I can now tell agents very explicitly what I want them to do in natural language. Before we had to use math to tell machines what we wanted them to do."

4. Humans at the steering wheel

Although businesses will augment and automate more of their workflows with GenAI and eventually agentic AI, humans won't be pushed aside. "The role of humans becomes more central, not less," Rege conjectured. "It elevates the value of human judgment" to establish AI guardrails and validate outcomes. "The real skill of the next decade," he added, "will be AI stewardship."

Human guardrails will be a necessity, but that will require fluency in AI. The September 2025 Boston Consulting Group report found that "fluency in AI is becoming essential across roles, alongside systems thinking, problem framing and sound judgment." With agent-led orchestration, the report notes, "AI takes on end-to-end execution and humans steer strategy and oversight."

5. Spotlight on ethical and responsible AI principles

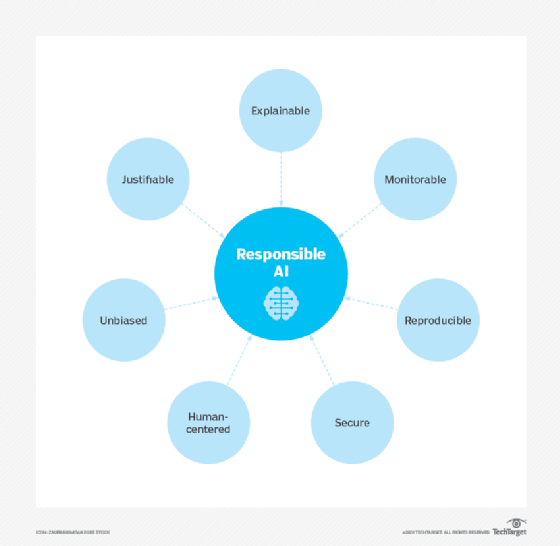

GenAI is not infallible. It can produce hallucinations and share dangerous content. There are legal accusations of copyright infringement and data privacy violations as developers use data from all across the internet to train and run GenAI models. Such concerns put a spotlight on responsible AI -- an approach to developing and deploying AI in ways that are fair, accurate, transparent, accountable, explainable and ethical.

A 2025 report, "Implementing Responsible AI in the Generative AI Age," from consultancy HCLTech and MIT Technology Review Insights, reveals that 87% of business executives surveyed recognize the critical importance of adopting responsible AI principles. Yet 85% say they are unprepared for adoption, citing implementation issues such as complexity, a shortage of staff expertise, problems managing operational risks, regulatory compliance and inadequate resource allocation.

Responsible AI is essential to earning user trust, noted Sreekanth Menon, senior vice president of the global AI practice and innovation at consultancy Genpact. "Responsible AI [RAI] is not going anywhere," he said. "In fact, enterprises around the world increased their investment in RAI for safer implementation of AI across the board. Now, with the rise of agentic AI use cases, the risk vector has broadened."

Among the 400 CIOs, CTOs, IT directors and other technology leaders surveyed by the IEEE in September 2025, 44% cite AI ethical practices as the top skill for AI-related hires in 2026 -- ahead of skills in data analysis, machine learning, data modeling and software development.

6. GenAI's uphill security battle

Hackers attack GenAI systems through prompt injections, data poisoning and model inversions. They can also exploit APIs to overload systems or extract proprietary models via query-based attacks. And they can use social engineering to trick users into sharing GenAI-generated content that contains embedded threats. A 2025 Gartner survey reports that 54% of the cybersecurity respondents said their organizations experienced an attack on enterprise AI applications in the prior 12 months.

"Bigger breaches are on the horizon," acknowledged Sagar Samtani, director of Indiana University's Kelley School of Business Data Science and Artificial Intelligence Lab. "Now that AI is integrated into more of the tech stack, cybersecurity is more and more of a concern."

Hackers' capabilities are outpacing enterprise defenses, Samtani added, despite attempts by businesses to secure workflows, add security layers and establish the right permission controls. "No matter what the technology is," he explained, "adversaries are always going to have the advantage because they're not bound by laws, regulations, codes of ethics, working-hour limits or PTO [paid time off]. They're able to work around the clock, and there are so many of them with a wide range of motivations."

7. More limited GenAI model choices

When ChatGPT 5 debuted, OpenAI offered an interactive model selector for users to choose which model to use. But reasoning models are much more expensive to run the prompts, Info-Tech's Jackson reported, so vendors are taking model control away from users to help control costs. "AI providers are trying to find more ways to deliver queries through less expensive models," he said. "So the average user at a company using Gemini isn't going to have to worry whether to use the Flash version or the Deep Think version. There will be an agent that decides that for them to get the best answer at the best price."

8. Greater demand for interpretability

Businesses know the value of understanding and explaining how AI models make decisions or generate outputs. "There is a question of ROI and how to make sure you get value out of these models, and that means you need to quantify risk -- there is some level of risk in AI systems -- so you need to quantify that and you need to know what it can and can't do," said Shayan Mohanty, chief data and AI officer at consultancy Thoughtworks.

Businesses need to know "what is actually happening beneath the hood," he added. "It's something [they're] going to have to contend with in the future. If you believe your enterprise is going to be AI-enabled at some level, and most companies are making a bet that their companies will transform because of AI, then you have to be able to inspect those AI systems the way you do with code."

9. Satisfying GenAI's hunger pangs

GenAI models require significant compute power, making them "power-hungry monsters," said Karen Panetta, dean of graduate education at Tuft University's School of Engineering and an IEEE fellow. "They need high-performance computers, and those computers need power." As a result, data centers are proliferating along with sustainability concerns, she added.

Data centers will generate around 2.5 billion metric tons of CO2 emissions worldwide by 2030, about three times more than they would emit without GenAI, according to research by Morgan Stanley.

Hyperscalers are building more efficient and sustainable data centers by using smarter cooling technologies and renewable energy sources, as well as placing them in geographic regions where environmental features support sustainability objectives, Panetta said.

10. Cost management in GenAI strategies

As GenAI and agentic AI deployments increase, Mohanty said token consumption could explode, and costs could rise exponentially. "Token costs are coming down, but the total volume of tokens is being generated as fast, if not faster," he explained, advocating for applying FinOps to GenAI strategies.

GenAI technologies to know in 2026

GenAI technology trends to watch include building and upgrading infrastructure that supports GenAI, the tendency for businesses to adopt language models rather than build their own and the availability of domain-specific, open-source and sovereign AI models for certain tasks.

Data infrastructure

GenAI requires a large volume of high-quality data. GenAI and agentic AI also need access to data, requiring businesses to build the infrastructure necessary to get their AI programs and AI agents talking to their unstructured and structured data.

"There is this question on how exactly to design data products with AI consumption as a first-class citizen," Mohanty said. "That's a new notion that no one has a handle on. This is top of mind for all CIOs, CDOs and CTOs. They'll have a number of agents running around their organizations, but in order for them to be effective, they have to have access to internal data. And the question is how that works mechanically and how do they govern that. It requires new reference architecture."

Rege noted that "the winners in 2026 will be those who treat data as infrastructure and not a byproduct. Data is a competitive advantage."

Vendor-built language models

For decades, businesses have had to determine whether to build their own technologies, buy them or pursue a combination of the two. GenAI is no different. A recent KPMG survey found that 50% of organizations were buying or leasing their GenAI from vendors, 29% were pursuing a mix of building, buying and partnering and 12% were building GenAI models in-house.

"The ones who adopted a vendor solution are successful most of the time," Jackson reported. Businesses struggle with training their own large language models and can frequently stymie success, he explained. But businesses that leave the heavy work to vendors and tweak only "where they have the most to gain from customization" such as with AI agents, report greater levels of success, he added.

Domain-specific models

Among the emerging technologies for 2026 are domain-specific GenAI models that are trained on data from a specific industry or professional discipline to perform specialized tasks within those sectors or professions. Domain-specific AI models have emerged in cybersecurity, law and healthcare, Jackson said.

Open-source AI models

Many open-source models are quickly improving, making them worth watching in 2026, said Carvahlo. Like open-source software, open-source AI model code, architecture and sometimes even training data are publicly available for anyone to use, modify and distribute under an open-source license.

Sovereign AI

"Countries are starting to think about AI as a strategic economic differentiator," Jackson said, explaining that some countries want to develop and control their own AI systems to ensure they always have access to AI capabilities and are free from the control of other governments. The topic might not dominate discussions in the U.S., but many other countries see it as important. Jackson said an Info-Tech 2026 trends report found that 39.6% of IT leaders surveyed were exclusively using sovereign AI, 20.9% were using sovereign AI and foreign AI, 14.2% were actively assessing sovereign AI models, 12.6% had no immediate plans for adoption, 9.2% said it was not a consideration and 3.6% said no sovereign options were available.

Mary K. Pratt is an award-winning freelance journalist with a focus on covering enterprise IT and cybersecurity management.