AI agent vs. chatbot: Breaking down the differences

Despite using similar AI technologies, AI agents automate tasks while chatbots converse with users. Understanding their differences is key to using the right AI for business needs.

While AI agents and chatbots rely on some of the same underlying technologies -- including large language models, or LLMs -- they are fundamentally different in most other respects. They address different use cases and excel in distinct areas.

An AI agent is an autonomous system that uses AI to guide its ability to complete tasks and automate workflows. A chatbot is a software program that interacts with human users conversationally.

While a chatbot might be better than an AI agent for a particular use case -- or vice versa -- most businesses will benefit from adopting both types of AI to address diverse use cases. AI agents and chatbots don't need to be either/or technologies. However, understanding their differences is a critical first step when choosing the right AI-powered tool for enterprise use cases.

AI agent vs. chatbot: Key differences

Although modern AI agents and chatbots often use generative AI and LLM technologies, the tasks they use them for, how they interact with users, and the types of use cases they excel at are all different.

AI agents and chatbots diverge in their capabilities, components, risks and management challenges and integrations.

1. Capabilities

Chatbots primarily interpret and respond to natural language input. In contrast, AI agents support a broader range of capabilities, like automating various business processes.

This doesn't mean that every AI agent can do almost anything. Agents are usually designed to support specific use cases, such as managing business accounting records or automating software application installation.

Chatbots are primarily helpful for tasks that center on answering questions or sharing information, such as helping an employee find information within a corporate database or assisting a customer in troubleshooting a problem by suggesting fixes. In contrast, AI agents focus on any tasks that can be completed using digital tools.

2. Components

Most modern chatbots require two basic components: an LLM or other type of AI model capable of interpreting and responding to user prompts, and an interface -- such as a website or a voice recognition system -- that enables users to interact with the chatbot.

AI agents are more complex. In addition to requiring access to an LLM and an end-user interface, agents also integrate with software applications and utilities. This enables them to carry out tasks autonomously.

3. Risks and management challenges

Due to their expanded capabilities and complexity, AI agents often have greater security risks and management challenges.

Since chatbots primarily engage in chats, their main risks are hallucinations and AI bias -- i.e., when a chatbot's output is incorrect, misleading or unethical. Chatbots can also potentially share sensitive information with an unauthorized user.

Because they also use LLM architecture, AI agents struggle with the same concerns of hallucination, bias and security. However, AI agents can cause greater issues due to their autonomous nature, especially if they receive malicious prompts or instructions. Agents can expose sensitive data, destroy information or disrupt critical services.

4. Integrations

Both AI agents and chatbots can integrate with external tools and services. Agents tend to rely more extensively on integrations because the ability to connect to software tools and utilities is central to how AI agents work. Plus, standardized frameworks, such as the Model Context Protocol, have made it easy for developers to integrate AI agents with a variety of external resources and with each other.

For chatbots, integrating with external resources -- beyond private databases to answer questions or look up data -- is often not as important because chatbots don't need to run commands or carry out actions. They only need the ability to chat.

Similarities between AI agents and chatbots

Despite being fundamentally different types of AI, chatbots and AI agents share some notable similarities:

- Use of LLMs. AI agents and chatbots typically use LLMs to interpret user prompts and generate responses.

- Ability to perform multi-step actions. Chatbots and AI agents both carry out multi-step activities. Chatbots can engage in conversations involving multiple user prompts, whereas AI agents can work through various steps or perform iterative actions.

- User interfaces. Although AI agent and chatbot interfaces can vary, both typically offer some way for human users to engage with them and issue requests, often using text or voice.

- Business benefits. AI agents and chatbots help businesses create value mainly by reducing the time staff must invest in manual tasks. Chatbots do this by automating conversations and information retrieval, while AI agents do it through workflow automation.

AI agent and chatbot functionalities and overlap

AI agents and chatbots have their own techniques for completing their primary goals. These functionalities can sometimes overlap, leading to chatbots with agentic qualities or agents with chatbot qualities.

How AI agents work

Most modern AI agents include two key components: an LLM and one or more software tools or applications. Some agents also have access to special data resources, such as a user's personal folders on a PC.

Agents use an LLM to interpret prompts from human users and decide how to fulfill those requests using the tools and data available through the agent. The LLM specifies the commands or API calls to execute, and agents then carry out those instructions.

For example, an AI agent designed to manage data on a local file system might have access to OS utilities for reading, writing and copying files. If a user sends the agent a prompt like "List all PDF files that I created yesterday," the LLM would tell the agent to run the commands necessary to search for and display a list of files.

Agents have been around in various forms for decades. Until recently, however, most were guided by rule-based systems, meaning they relied on built-in logic that told them how to react to requests by following preformulated scripts.

Instead, modern agents use LLMs, which are more flexible because they can interpret a wide range of user prompts. For this reason, agentic AI has recently become a popular technology, with many businesses seeking to use AI agents to automate complex, variable-dependent tasks.

How chatbots work

Chatbots use natural language processing techniques to interpret input and generate replies.

There are many types of chatbots: smartphone virtual assistants capable of responding to voice input; text-based virtual customer service chatbots that offer support on websites; and interfaces -- like the ChatGPT website or Claude Desktop -- that enable users to interact directly with an LLM.

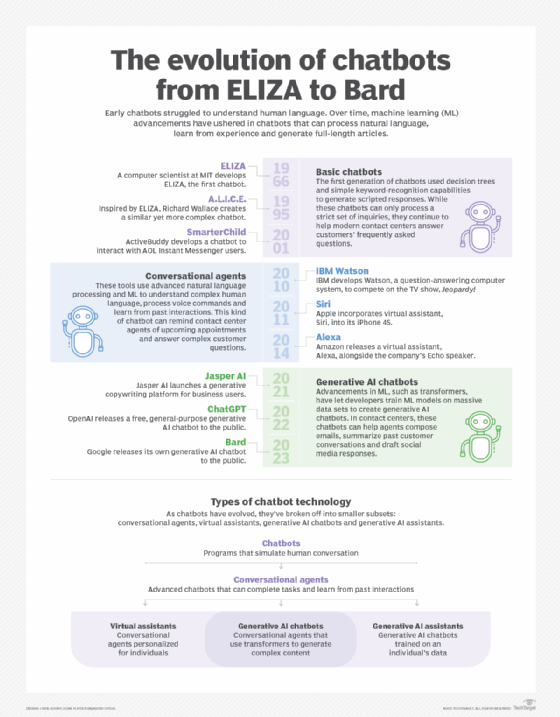

Like AI agents, chatbots have a long history. Most historians consider Eliza, a software program developed in the mid-1960s that could carry out conversations by emulating a psychotherapist, as the first chatbot.

Historically, most chatbots relied on libraries of prebuilt responses to engage in conversations. As a result, the topics a chatbot could discuss were narrow. Today's chatbots often use LLMs, making them capable of discussing a wide range of topics. That said, some organizations might deliberately restrict the content that an LLM-powered chatbot can discuss to help reduce the risk that it will give users incorrect or irrelevant information.

Can a system have both agentic and chatbot qualities?

In some cases, the line between AI agents and chatbots can blur. This happens when the functionality of both a chatbot and an agent becomes available in the same tool.

For example, consider a customer service chatbot whose primary purpose is to answer questions and provide information. However, the chatbot can also carry out limited actions, like resetting a user's password or canceling an order -- tasks that go beyond conversation. Because of its latter features, this chatbot could also be considered an agent.

That said, tools that offer both chatbot and agent capabilities usually focus more on one type of functionality than the other, and they can thus be categorized based on their primary function. A chatbot that can perform a narrow set of actions beyond holding a conversation could still be considered a chatbot because chatting is its primary purpose.

The term AI assistant sometimes refers to chatbots that perform basic actions in response to specific users' requests. This separates them from AI agents, which are capable of more complex actions and initiatives.

Choosing between AI agents vs. chatbots

So, which type of AI-powered tool -- an AI agent or a chatbot -- is best for addressing a particular business need? The answer depends on four key factors:

1. Does the use case only require conversational AI?

If so, a chatbot is best in most cases. For use cases that require the ability to perform actions, rather than just share information, an agent might be necessary.

2. What development resources are available?

Implementing AI agents often requires more software development resources due to their greater complexity. Chatbots tend to be simpler to deploy, not just because they have fewer components but also because it's easier to find off-the-shelf chatbot tools, eliminating the need to build them in-house.

3. What are the security risks?

For use cases involving highly sensitive data or systems, chatbots might pose less risk because they have a lower potential to cause damage. That said, chatbots still come with security risks, especially if they are off-the-shelf tools. Organizations must invest in security and privacy guardrails, whether they deploy a chatbot or an agent.

4. What are the future needs?

Because AI agents can perform a wide range of tasks, they're easier to adapt over time in response to changing needs or increased complexity. In contrast, chatbots will always be limited to their primary conversation function, making them less ideal for use cases that might require additional functionality in the future.

Chris Tozzi is a freelance writer, research adviser and professor of IT and society. He has previously worked as a journalist and Linux systems administrator.