What is reinforcement learning from human feedback (RLHF)?

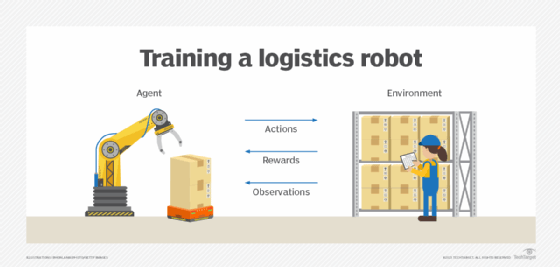

Reinforcement learning from human feedback (RLHF) is a machine learning (ML) approach that combines reinforcement learning techniques, such as rewards and comparisons, with human guidance to train an artificial intelligence (AI) agent.

Machine learning is a vital component of AI. ML trains the AI agent on a particular function, running billions of calculations and getting the agent to learn from them. Automation speeds up the training process and makes it faster than human training.

However, there are times when human feedback is vital to fine-tune interactive or generative AI. Using human feedback for generated text can better optimize the model and make it more efficient, logical and helpful.

In RLHF, human testers and users provide direct feedback to optimize the language model more accurately than self-training alone. RLHF is primarily used in natural language processing (NLP) for AI agent understanding in applications such as chatbot and conversational agents, text to speech and summarization.

This article is part of

What is GenAI? Generative AI explained

Why is RLHF important?

In regular reinforcement learning, AI agents learn from their actions through a reward function that assigns numerical scores to model outputs based on how well they align with human preferences. But the agent is essentially teaching itself, and the rewards often aren't easy to define or measure, especially with complex tasks, such as NLP. The result is an easily confused chatbot that makes no sense to the user.

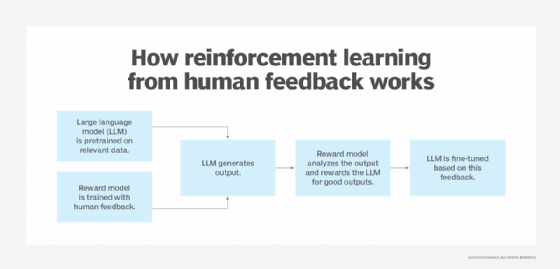

The goal of RLHF is to train language models to generate text that's both engaging and factually accurate. It does this by first creating a reward model that can predict how humans will rate the quality of the output-generated text. The reward model is used to train an ML model that can predict the human ratings of the text.

Next, the RLHF system fine-tunes the reward model by rewarding the language model for generating text the reward model rates highly. Conversely, the reward model can encourage the language model to reject certain questions or inputs. For example, language models often refuse to generate any content that advocates violence or is racist, sexist or homophobic.

How does RLHF work?

RLHF is an iterative process where human feedback is collected on an ongoing basis and used for continuous improvement of the large language model (LLM). RLHF training is done in three phases:

- Initial phase. The first phase involves selecting an existing model as the main LLM to determine and label correct behavior. A pretrained model requires less training data overall so training can be done faster.

- Human feedback. After training the initial model, human testers use various metrics to evaluate its performance. For example, human trainers provide a quality or accuracy score to various model-generated outputs. The AI system then evaluates its performance based on human feedback to create rewards for reinforcement learning.

- Reinforcement learning. The reward model is fine-tuned with outputs from the main LLM, and the LLM receives a quality score from testers. The LLM uses this feedback to improve its performance on future tasks.

What are the specific uses of RLHF?

One example of a model that uses RLHF is OpenAI's GPT-4, which powers ChatGPT, a generative AI tool that creates new content, such as chat and conversation, based on prompts. A good generative AI application should read and sound like a natural human conversation. This means NLP is necessary for the AI agent to understand how human language is spoken and written.

The GPT-3 and GPT-4 LLMs behind ChatGPT are trained using RLHF to generate conversational, real-life responses for the person making the query. They're trained on a massive amount of data to predict the next word to form a sentence. ChatGPT learns human expectations and creates humanlike responses.

Training an LLM this way goes beyond having the tool predict the next word; it helps construct an entire coherent sentence. This sets ChatGPT apart from simple chatbots, which typically provide prewritten, canned answers to questions. Because of its human-based training, ChatGPT is able to understand the intent of the question and provide natural-sounding answers that help with decision-making.

Google Gemini also uses RLHF training to refine Gemini's outputs. Its RLHF training is conducted alongside supervised fine-tuning. This is a different technique that shows the LLM examples of high-quality outputs and responses so it adjusts accordingly.

What are the benefits of RLHF?

RLHF is often used as an alternative to reinforcement learning due to the benefits of human feedback. These benefits include the following:

- Human values. AI models become cognizant of what's acceptable and ethical human behavior when humans are in the loop, as humans correct unacceptable outputs.

- Safety. RLHF steers AI models away from producing unsafe outputs. This is particularly important for systems used in autonomous vehicles and healthcare applications that could affect human safety.

- User experience. Human feedback helps an AI model understand human preferences better than preference data alone. Giving consistent feedback based on changing preferences lets the model recommend relevant products based on current preferences and trends.

- Data labeling tasks. Businesses using RLHF save time and money because human annotators aren't needed to label large training data sets when human feedback can clarify the contents of training data.

- Model knowledge. Not only are gaps in training data filled with the help of people, but AI models are also exposed to new real-world knowledge, such as business processes and workflows, as part of AI research efforts.

What are the challenges and limitations of RLHF?

RLHF also has challenges and limitations, including the following:

- Subjectivity and human error. The quality of feedback can vary among users and testers. When generating answers to advanced inquiries, people with the proper background in complex fields, such as science or medicine, should be the ones providing feedback. However, finding experts can be expensive and time-consuming.

- Wording of questions. The quality of an AI tool's answers depends on the queries. If a question is too open-ended or the user isn't clear with their instructions, the LLM might not fully understand the request. In training, proper wording is necessary for an AI agent to decipher user intent and understand context.

- Training bias. RLHF is prone to problems with machine learning bias. Asking a factual question, such as "What does 2+2 equal?" gives one answer. However, more complex questions, such as those that are political or philosophical in nature, can have several answers. AI defaults to its training answer, causing bias and a narrow scope that leaves out other answers or information.

- Scalability. Because this process uses human feedback, it's time-consuming and resource-intensive to scale the process to train bigger, more sophisticated models. Automating the feedback process might help solve this issue.

Implicit language Q-learning implementation

LLMs can be inconsistent in their accuracy for some user-specified tasks. A method of reinforcement learning called implicit language Q-learning (ILQL) addresses this.

Traditional Q-learning algorithms use language to help the model understand the task. ILQL is a type of reinforcement learning algorithm that's used to teach a model to perform a specific task, such as training a customer service chatbot to interact with a customer.

ILQL is an algorithm to teach models to perform complex tasks with the help of human feedback. Using human input in the learning process, models can be trained more efficiently than by self-learning alone.

In ILQL, the model receives a reward based on the outcome and human feedback. The model then uses this reward to update its Q-values, which determine the best action to take in the future. In traditional Q-learning, the model receives a reward only for the action outcome.

RLHF vs. reinforcement learning from AI feedback

Reinforcement learning from AI feedback (RLAIF) is different from reinforcement learning with human feedback. RLHF relies on human feedback to fine-tune output. RLAIF, on the other hand, incorporates feedback from other AI models. With RLAIF, a pretrained LLM is used to train the new model, automating and simplifying the feedback process. The downside to this practice is that, without human feedback, the new model could lack empathy, ethical considerations and other human-based capabilities.

Use cases where RLAIF might be the better approach include social media sentiment analysis and working with large volumes of customer satisfaction data because AI models analyze big data faster than humans. RLHF is better suited for smaller applications that require nuances humans can provide, such as healthcare applications.

What's next for RLHF?

RLHF technology is expected to improve as current limitations are slowly fixed. Expected improvements include the following:

- Improvement of feedback collection. There are various ways to make the feedback collection process more efficient, such as crowdsourcing in which feedback tasks are distributed among a large pool of individuals.

- Multimodal opportunities. Multimodal AI models examine different forms of inputs, such as images or audio, in addition to text. They're use is expected to increase. RLHF can provide feedback to these various types of output, such as improving detail in images.

- User-specific reward mechanisms. RLHF will continue to make AI more aware of user-specific preferences and lead to better reward mechanisms that adhere to specific users' needs.

- New learning techniques. New techniques for RLHF are expected to continue. For example, transfer learning takes human feedback on one task and applies it to other similar tasks.

- Explainability. RLHF will help advance explainable AI efforts, providing more transparent outputs and explaining the steps they performed when training models.

- New regulations. AI governance regulations and frameworks are increasing worldwide, making compliance important. Existing and future regulations will help ensure RLHF is conducted responsibly and ethically.

RLHF is one of many techniques ML professionals use. Learn more about popular ML certifications and courses that cover various reinforcement and supervised learning techniques.