The history of artificial intelligence: Complete AI timeline

From the Turing test's introduction to ChatGPT's celebrated launch, AI's historical milestones have forever altered the lifestyles of consumers and operations of businesses.

Artificial intelligence, or at least the modern concept of it, has been with us for several decades, but only in the recent past has AI captured the collective psyche of everyday business and society.

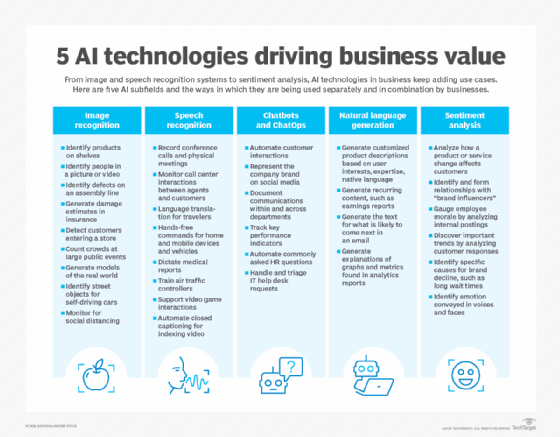

AI is about the ability of computers and systems to perform tasks that typically require human cognition. Our relationship with AI is symbiotic. Its tentacles reach into every aspect of our lives and livelihoods, from early detections and better treatments for cancer patients to new revenue streams and smoother operations for businesses of all shapes and sizes.

AI can be considered big data's great equalizer in collecting, analyzing, democratizing and monetizing information. The deluge of data we generate daily is essential to training and improving AI systems for tasks such as automating processes more efficiently, producing more reliable predictive outcomes and providing greater network security.

Take a stroll along the AI timeline

The introduction of AI in the 1950s very much paralleled the beginnings of the Atomic Age. Though their evolutionary paths have differed, both technologies are viewed as posing an existential threat to humanity.

This article is part of

What is enterprise AI? A complete guide for businesses

Through the years, artificial intelligence and the splitting of the atom have received somewhat equal treatment from Armageddon watchers. In their view, humankind is destined to destroy itself in a nuclear holocaust spawned by a robotic takeover of our planet. The anxiety surrounding generative AI (GenAI) has done little to quell their fears.

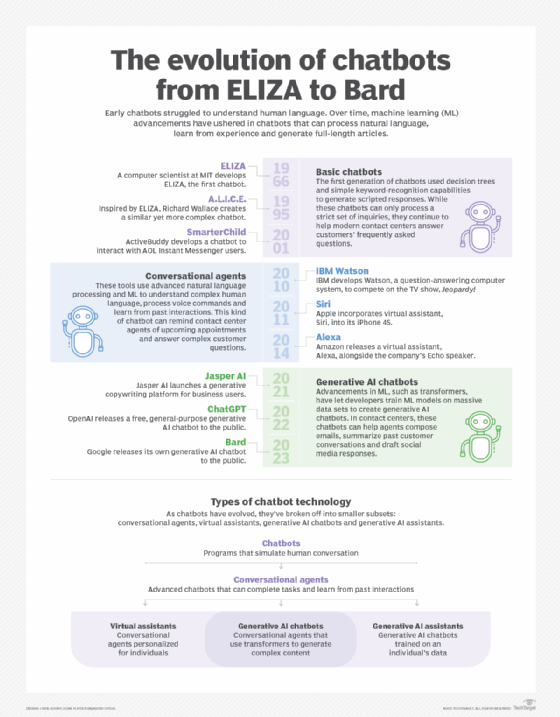

Perceptions about the darker side of AI aside, artificial intelligence tools and technologies since the advent of the Turing test in 1950 have made incredible strides -- despite the intermittent roller-coaster rides mainly due to funding fits and starts for AI research. Many of these breakthrough advancements have flown under the radar, visible mostly to academic, government and scientific research circles until the past decade or so, when AI was practically applied to the wants and needs of the masses. AI products such as Apple's Siri and Amazon's Alexa, online shopping, social media feeds and self-driving cars have forever altered the lifestyles of consumers and operations of businesses.

Through the decades, some of the more notable developments include the following:

- Neural networks and the coining of the terms artificial intelligence and machine learning in the 1950s.

- Eliza, the chatbot with cognitive capabilities, and Shakey, the first mobile intelligent robot, in the 1960s.

- AI winter followed by AI renaissance in the 1970s and 1980s.

- Speech and video processing in the 1990s.

- IBM Watson, personal assistants, facial recognition, deepfakes, autonomous vehicles, GPT content and image creation, and lifelike GenAI clones in the 2000s.

1950

Alan Turing published "Computing Machinery and Intelligence," introducing the Turing test and opening the doors to what would be known as AI.

1951

Marvin Minsky and Dean Edmonds developed the first artificial neural network (ANN) called SNARC using 3,000 vacuum tubes to simulate a network of 40 neurons.

1952

Arthur Samuel developed Samuel Checkers-Playing Program, the world's first program to play games that was self-learning.

1956

John McCarthy, Marvin Minsky, Nathaniel Rochester and Claude Shannon coined the term artificial intelligence in a proposal for a workshop widely recognized as a founding event in the AI field.

1958

Frank Rosenblatt developed the perceptron, an early ANN that could learn from data and became the foundation for modern neural networks.

John McCarthy developed the programming language Lisp, which was quickly adopted by the AI industry and gained enormous popularity among developers.

1959

Arthur Samuel coined the term machine learning in a seminal paper explaining that the computer could be programmed to outplay its programmer.

Oliver Selfridge published "Pandemonium: A Paradigm for Learning," a landmark contribution to machine learning that described a model that could adaptively improve itself to find patterns in events.

1964

Daniel Bobrow developed STUDENT, an early natural language processing (NLP) program designed to solve algebra word problems, while he was a doctoral candidate at MIT.

1965

Edward Feigenbaum, Bruce G. Buchanan, Joshua Lederberg and Carl Djerassi developed the first expert system, Dendral, which assisted organic chemists in identifying unknown organic molecules.

1966

Joseph Weizenbaum created Eliza, one of the more celebrated computer programs of all time, capable of engaging in conversations with humans and making them believe the software had humanlike emotions.

Stanford Research Institute developed Shakey, the world's first mobile intelligent robot that combined AI, computer vision, navigation and NLP. It's the grandfather of self-driving cars and drones.

1968

Terry Winograd created SHRDLU, the first multimodal AI that could manipulate and reason out a world of blocks according to instructions from a user.

1969

Arthur Bryson and Yu-Chi Ho described a backpropagation learning algorithm to enable multilayer ANNs, an advancement over the perceptron and a foundation for deep learning.

Marvin Minsky and Seymour Papert published the book Perceptrons, which described the limitations of simple neural networks and caused neural network research to decline and symbolic AI research to thrive.

1973

James Lighthill released the report "Artificial Intelligence: A General Survey," which caused the British government to significantly reduce support for AI research.

1980

Symbolics Lisp machines were commercialized, signaling an AI renaissance. Years later, the Lisp machine market collapsed.

1981

Danny Hillis designed parallel computers for AI and other computational tasks, an architecture similar to modern GPUs.

1984

Marvin Minsky and Roger Schank coined the term AI winter at a meeting of the Association for the Advancement of Artificial Intelligence, warning the business community that AI hype would lead to disappointment and the collapse of the industry, which happened three years later.

1985

Judea Pearl introduced Bayesian networks causal analysis, which provides statistical techniques for representing uncertainty in computers.

1988

Peter Brown et al. published "A Statistical Approach to Language Translation," paving the way for one of the more widely studied machine translation methods.

1989

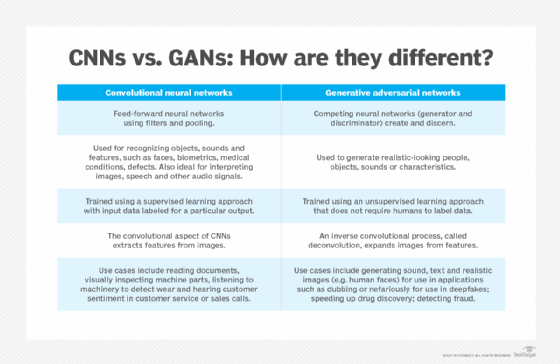

Yann LeCun, Yoshua Bengio and Patrick Haffner demonstrated how convolutional neural networks (CNNs) can be used to recognize handwritten characters, showing that neural networks could be applied to real-world problems.

1997

Sepp Hochreiter and Jürgen Schmidhuber proposed the Long Short-Term Memory recurrent neural network, which could process entire sequences of data such as speech or video.

IBM's Deep Blue defeated Garry Kasparov in a historic chess rematch, the first defeat of a reigning world chess champion by a computer under tournament conditions.

2000

University of Montreal researchers published "A Neural Probabilistic Language Model," which suggested a method to model language using feedforward neural networks.

2006

Fei-Fei Li started working on the ImageNet visual database, introduced in 2009, which became a catalyst for the AI boom and the basis of an annual competition for image recognition algorithms.

IBM Watson originated with the initial goal of beating a human on the iconic quiz show Jeopardy! In 2011, the question-answering computer system defeated the show's all-time (human) champion, Ken Jennings.

2009

Rajat Raina, Anand Madhavan and Andrew Ng published "Large-Scale Deep Unsupervised Learning Using Graphics Processors," presenting the idea of using GPUs to train large neural networks.

2011

Jürgen Schmidhuber, Dan Claudiu Cireșan, Ueli Meier and Jonathan Masci developed the first CNN to achieve "superhuman" performance by winning the German Traffic Sign Recognition competition.

Apple released Siri, a voice-powered personal assistant that can generate responses and take actions in response to voice requests.

2012

Geoffrey Hinton, Ilya Sutskever and Alex Krizhevsky introduced a deep CNN architecture that won the ImageNet challenge and triggered the explosion of deep learning research and implementation.

2013

China's Tianhe-2 doubled the world's top supercomputing speed at 33.86 petaflops, retaining the title of the world's fastest system for the third consecutive time.

DeepMind introduced deep reinforcement learning, a CNN that learned based on rewards and learned to play games through repetition, surpassing human expert levels.

Google researcher Tomas Mikolov and colleagues introduced Word2vec to automatically identify semantic relationships between words.

2014

Ian Goodfellow and colleagues invented generative adversarial networks, a class of machine learning frameworks used to generate photos, transform images and create deepfakes.

Diederik Kingma and Max Welling introduced variational autoencoders to generate images, videos and text.

Facebook developed the deep learning facial recognition system DeepFace, which identifies human faces in digital images with near-human accuracy.

2016

DeepMind's AlphaGo defeated top Go player Lee Sedol in Seoul, South Korea, drawing comparisons to the Kasparov chess match with Deep Blue nearly 20 years earlier.

Uber started a self-driving car pilot program in Pittsburgh for a select group of users.

2017

Stanford researchers published work on diffusion models in the paper "Deep Unsupervised Learning Using Nonequilibrium Thermodynamics." The technique provides a way to reverse-engineer the process of adding noise to a final image.

Google researchers developed the concept of transformers in the seminal paper "Attention Is All You Need," inspiring subsequent research into tools that could automatically parse unlabeled text into large language models (LLMs).

British physicist Stephen Hawking warned, "Unless we learn how to prepare for, and avoid, the potential risks, AI could be the worst event in the history of our civilization."

2018

Developed by IBM, Airbus and the German Aerospace Center DLR, Cimon was the first robot sent into space to assist astronauts.

OpenAI released GPT (Generative Pre-trained Transformer), paving the way for subsequent LLMs.

Groove X unveiled a home mini-robot called Lovot that could sense and affect mood changes in humans.

2019

Microsoft launched the Turing Natural Language Generation generative language model with 17 billion parameters.

Google AI and Langone Medical Center's deep learning algorithm outperformed radiologists in detecting potential lung cancers.

2020

The University of Oxford developed an AI test called Curial to rapidly identify COVID-19 in emergency room patients.

Open AI released the GPT-3 LLM consisting of 175 billion parameters to generate humanlike text models.

Nvidia announced the beta version of its Omniverse platform to create 3D models in the physical world.

DeepMind's AlphaFold system won the Critical Assessment of Protein Structure Prediction protein-folding contest.

2021

OpenAI introduced the Dall-E multimodal AI system that can generate images from text prompts.

The University of California, San Diego, created a four-legged soft robot that functioned on pressurized air instead of electronics.

2022

Google software engineer Blake Lemoine was fired for revealing secrets of Lamda and claiming it was sentient.

DeepMind unveiled AlphaTensor "for discovering novel, efficient and provably correct algorithms."

Intel claimed its FakeCatcher real-time deepfake detector was 96% accurate.

OpenAI released ChatGPT on Nov. 30 to provide a chat-based interface to its GPT-3.5 LLM, signaling the democratization of AI for the masses.

2023

OpenAI announced the GPT-4 multimodal LLM that processes both text and image prompts. Microsoft integrated ChatGPT into its search engine Bing, and Google released its GPT chatbot Bard.

Elon Musk, Steve Wozniak and thousands more signatories urged a six-month pause on training "AI systems more powerful than GPT-4."

2024

Generative AI tools continued to evolve rapidly with improved model architectures, efficiency gains and better training data. Intuitive interfaces drove widespread adoption, even amid ongoing concerns about issues such as bias, energy consumption and job displacement.

Google's rebranding of its Bard GenAI chatbot got off to a rocky start, as the newly named Gemini generated heavy criticism for producing inaccurate images of multiple historic figures, including George Washington and other Founding Fathers, as well as numerous other factual inaccuracies. Google's debut of AI Overviews, which provides quick summaries of topics and links to documents for deeper research, intensified concerns over its search engine monopoly and raised additional questions among traditional and online publishers over the control of intellectual property.

The European Parliament adopted the Artificial Intelligence Act with provisions to be applied over time, including codes of practice, banning AI systems that pose "unacceptable risks" and transparency requirements for general-purpose AI systems.

Colorado became the first state to enact a broad-based regulation on AI usage, known as the Colorado Artificial Intelligence Act, requiring developers of AI systems "to use reasonable care to protect consumers from any known or reasonably foreseeable risks of algorithmic discrimination in the high-risk system." The California legislature passed several AI-related bills, defining AI and regulating the largest AI models, GenAI training data transparency, algorithmic discrimination and deepfakes in election campaigns. More than half of U.S. states have proposed or passed some form of targeted legislation citing the use of AI in political campaigns, schooling, crime data, sexual offenses and deepfakes.

Delphi launched GenAI clones, offering users the ability to create lifelike digital versions of themselves, ranging from likenesses of company CEOs sitting in on Zoom meetings to celebrities answering questions on YouTube.

2025 and beyond

Corporate spending on generative AI is expected to surpass $1 trillion in the coming years. Bloomberg predicts that GenAI products "could add about $280 billion in new software revenue driven by specialized assistants, new infrastructure products and copilots that accelerate coding."

Riding the coattails of GenAI, artificial intelligence's continuing technological advancements and influences are in the early innings of applications in business processes, autonomous systems, manufacturing, healthcare, financial services, marketing, customer experience, workforce environments, education, agriculture, law, IT systems and management, cybersecurity, and ground, air and space transportation.

By 2026, Gartner reported, organizations that "operationalize AI transparency, trust and security will see their AI models achieve a 50% improvement in terms of adoption, business goals and user acceptance." Yet Gartner analyst Rita Sallam revealed at July's Data and Analytics Summit that corporate executives are "impatient to see returns on GenAI investments … [and] organizations are struggling to prove and realize value." As a result, the research firm predicted that at least 30% of GenAI projects will be abandoned by the end of 2025 because of "poor data quality, inadequate risk controls, escalating costs or unclear business value."

Today's tangible developments -- some incremental, some disruptive -- are advancing AI's ultimate goal of achieving artificial general intelligence. Along these lines, neuromorphic processing shows promise in mimicking human brain cells, enabling computer programs to work simultaneously instead of sequentially. Amid these and other mind-boggling advancements, issues of trust, privacy, transparency, accountability, ethics and humanity have emerged and will continue to clash and seek levels of acceptability among business and society.

The current patchwork of targeted U.S. state and local legislation already proposed or enacted might well lead to a broad-based bipartisan national law that specifically regulates, or reins in, the development, deployment and application of AI.

Editor's note: This article was updated to reflect the latest developments and trends in artificial intelligence.

Ron Karjian is an industry editor and writer at TechTarget covering business analytics, artificial intelligence, data management, security and enterprise applications.