What are autonomous AI agents and which vendors offer them?

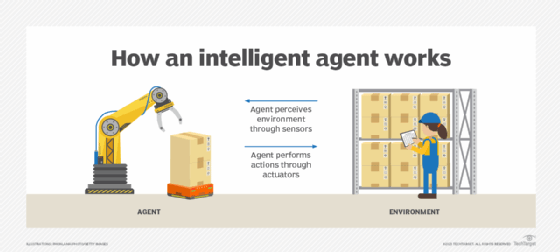

Autonomous artificial intelligence (AI) agents are intelligent systems that can perform tasks for a user or system without human intervention. They're a specific type of intelligent agent characterized by their ability to operate independently, make decisions and take actions without requiring ongoing human guidance.

A normal software agent is a goal-oriented program that reacts to its environment in limited autonomous ways to perform a function for an end user or other program. Intelligent agents are typically more advanced, can perceive their environment, process data and make decisions with some level of adaptability. Autonomous AI agents, by comparison, are designed to operate independently with a higher level of adaptability to enable them to make more complex decisions with little to no human influence.

Agents can typically activate and run themselves without input from human users. They can also be used to initiate or monitor other programs and applications. Autonomous AI agents typically use large language models (LLMs) and external sources like websites or databases. They can also continuously improve using self-learning techniques. Autonomous AI agents can operate in dynamic environments, making them ideal for complex tasks like enterprise customer service.

Agent-based computing and modeling have existed for decades, but with recent innovations in generative AI, researchers, vendors and hobbyists are building more autonomous AI agents. While these efforts are still in their early stages, the long-term goal is to enhance efficiency, streamline workflows and advance processes. For example, autonomous AI agents could be used in tandem with robotic process automation (RPA) bots to execute simple tasks and eventually collaborate on whole processes.

This article is part of

What is GenAI? Generative AI explained

Features of autonomous AI agents

Common features of autonomous AI agents include the following:

- Increased autonomy. Autonomous AI agents have increased levels of autonomy compared to other agents. This means they can operate in more complex environments.

- Adaptability. Autonomous AI agents can react and learn from their environment, meaning they can interpret data, navigate complex obstacles and adjust their behavior.

- Accessibility to tools. Autonomous AI agents can also access tools like data from customer interactions, external databases, the web and sensor data.

- Memory storage. Autonomous AI agents with built-in memory means that the agent can learn from its past experiences.

- Social skills. Autonomous AI agents can understand naturally written human language, as they're typically trained using LLMs and natural language processing (NLP).

How do autonomous agents work?

Autonomous AI agents must reliably perform complex context-dependent tasks, maintain long-term memory and address ethical situations and inherent biases.

Autonomous AI agents typically operate using a combination of technologies, such as machine learning (ML), NLP and real-time data analysis. The agents produce responses based on LLMs and the data used to train them.

In operation, autonomous AI agents generally go through the following steps:

- The process typically starts with a data collection stage, where the agent gathers data from various sources. This includes data from customer interactions, external databases, sensors or other tools such as web searches, application programming interfaces (APIs) or other agents so it can better perceive its environment. This step is important for the agent to properly understand the context of each task it's given and for it to make properly informed decisions.

- The next step is a decision-making process where the agent analyzes the collected data and begins identifying patterns and predicting outcomes using ML processes.

- After the agent makes a decision, it moves on to an execution step, where it takes one or more sequential actions to reach its goal.

- The agent updates its knowledge base and makes any refinements to learn from its experience. This helps the agent improve over time.

Types of AI autonomous agents

AI autonomous agents are defined as follows by their range of agent functions and capabilities and their degree of intelligence:

Model-based reflex agents. These agents use their current perception and memory to build a comprehensive view of their environments. They can store data in memory, and the model is updated as the agent receives new information.

Goal-based agents. These agents expand on the information that model-based agents store by also including goal information or information about desirable situations.

Utility-based agents. These agents are similar to goal-based agents but provide an extra utility measurement that rates possible scenarios based on desired results. They then choose the action that maximizes desired outcomes. Rating criteria examples include the progression toward a goal, probability of success or required resources.

Learning agents. Using an additional learning algorithm, these agents can gradually improve and become more knowledgeable about their environment. Feedback on performance is measured, which determines how performance should change over time.

Pros and cons of autonomous AI agents

Autonomous AI agents offer the following benefits:

- Cost. AI generally does well with repetitive tasks and can free up human operators to work on more complex or creative tasks.

- Customer experience. Autonomous AI agents enable more personalized and responsive interactions when put in customer-facing positions.

- Task automation. They can automate more tasks than other agent-based software that would otherwise require a human.

- Response quality. They can provide fast, comprehensive responses.

However, these types of agents also come with some challenges, including the following:

- Compute requirements. The training and operation of autonomous AI agents can be complex and require more computing resources than other types of agents.

- Data privacy and ethics. ML models can sometimes exude biases and inaccurate results and, depending on their use, might have access to user data. Ensuring that user data is protected and that ethical decision-making processes are made can be difficult for some organizations.

- Multiagent dependencies. Multiagent systems commonly share the same issues or weaknesses.

- Technically complex. Creating and deploying AI agents requires specific skills and expertise, which might be difficult for smaller organizations to implement.

- True understanding. Autonomous AI agents often struggle in scenarios requiring deep contextual understanding, mainly because of underlying LLM and compute limitations.

Examples and uses of autonomous agents

Innovator and venture capitalist Yohei Nakajima generated heightened interest in autonomous AI agents when he created BabyAGI in March 2023 and published the code on GitHub as a a proof of concept. Since then, many other open source autonomous AI agent tools have been released, including the following:

- AgentGPT. This platform enables users to perform various tasks, including simple research and deploying autonomous AI agents.

- DeepWisdom MetaGPT. This framework available on GitHub can be used for code generation, project planning and prototyping.

- Forethought AutoChain. This developer platform makes its code available via GitHub.

- LlamaIndex. This open source data orchestration framework can be used for building LLMs.

- Microsoft Prompt flow. Available on GitHub as a hosted Microsoft project, this platform can be used to develop LLM-based AI applications.

Vendors

Several vendors, including the following, also offer autonomous AI products:

- OpenAI released in early 2023 an Assistants API to support AI-assisted tasks running in the cloud and on mobile devices.

- Salesforce announced in December 2024 Agentforce 2.0, a platform of autonomous bots for service, sales, e-commerce and marketing. This version includes the proprietary Atlas Reasoning Engine that helps Agentforce retrieve data, reason and act.

- UiPath released Autopilot in 2023. Its RPA infrastructure blends generative and specialized AI to run automated processes across the enterprise.

History of autonomous AI agents

One of the earliest examples of an autonomous AI agent dates back to Stanford Research Institute's development of Shakey the Robot in 1966. The focus was on creating an entity that could respond to assigned tasks by setting appropriate goals, perceiving the environment, generating a plan to achieve those goals and executing the plan while adapting to the environment. Shakey was designed to operate as an embedded system over an extended period, performing a range of different but related tasks.

Scaling this approach was difficult. Until recently, most methods for building autonomous agents required manual knowledge engineering efforts involving explicit coding of low-level skills and models to drive agent behavior. ML was gradually used to learn focused elements such as object recognition or obstacle avoidance for mobile robots.

Recent innovations in applying LLMs to understand tasks have yielded an entirely different and more automated approach. The focus now is on synthesizing and executing a solution to a task instead of supporting a continuously operating agent that dynamically sets its own goals. These newer generative models are also designed to use LLMs for planning and problem-solving.

These innovations are a departure from previous work on enterprise assistants that lacked adaptability, including expert systems, autonomous agents, intelligent agents, decision support systems, RPA bots, cognitive agents and self-learning algorithms. Autonomous agents that use LLMs are getting better at dynamic learning and adaptability, understanding context, making predictions and interacting in a more human-like manner. Agents, therefore, can operate with minimal human intervention and adapt to new information and environments in real time.

Autonomous AI's future

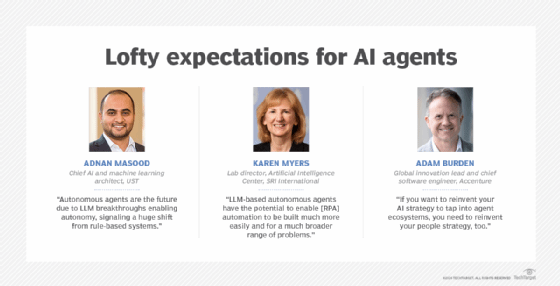

Autonomous AI can be viewed as an evolution of RPA, which is focused on point tasks. LLM autonomous agents have the potential to enable a broader range of automation capabilities to be built more easily.

AI might eventually be powered by autonomous agents, leading to fully autonomous AI operating systems that support more adaptable systems aligned with company goals, compliance, legal standards and human values.

When the time comes to deploy chatbot or LLM agent technologies more broadly without involving a human for validation, caution is necessary. Agents aren't yet fully reliable and can still generate inaccurate responses, meaning precautions and checks must be in place.

Businesses need to prioritize data security and ethical AI practices. They must also develop mechanisms to ensure the decisions these autonomous agents make align with organizational values and adhere to legal standards. That requires addressing issues surrounding algorithmic fairness, accountability, auditability, transparency, explainability, security and bias mitigation.

On the dark side, autonomous AI agents might fuel more resilient, dynamic and self-replicating malicious bots to launch denial-of-service attacks, hack enterprise systems, drain bank accounts and undertake disinformation campaigns. Businesses must also consider how human workers are affected, including roles and responsibilities. Humans will have an important role in creating, testing and managing agents and deciding when and where internal agents should be allowed to run independently.

Many enterprises today are rolling out chatbots that handle multiple tasks. A future goal is to develop an ecosystem of domain-specific agents that are optimized for different tasks. An Accenture "Technology Vision 2024" report found that 96% of executives worldwide agreed that AI agent ecosystems will represent "a significant opportunity for their organizations in the next three years."

Adam Burden, global innovation lead and chief software engineer at Accenture said, "We believe that over the next decade, we will see the rise of entire agent ecosystems, large networks of interconnected AI that will push enterprises to think about their intelligence and automation strategy in a fundamentally different way."

He sees a future in which billions of autonomous agents connect with each other and perform tasks, significantly altering the landscape of commerce and customer care and amplifying everyone's abilities. AI, for example, is currently used to detect manufacturing flaws, but connected agents eventually could enable fully automated, lights-out production of goods at factories without requiring humans on-site. "This shift," Burden noted, "is driving intense interest in autonomous AI agents now."

As the technologies continue to evolve, the quality of autonomous AI agents will continue to improve. Learn more about different available AI agents for business use.