Getty Images

Technology outlook: Service providers cite four key trends

What's on tap for 2024? The partner ecosystem expects to help customers scale generative AI projects, work with smaller models and play a bigger role at the edge.

IT service providers said they are looking at 2024 as a year for scaling generative AI, focusing on customers' data challenges, and pushing more workloads to the edge.

The technology outlook, based on interviews with consultants, systems integrators and industry analysts, emphasizes the wider and more effective use of promising technologies. Partners' enterprise clients have yet to emerge from the investigation stage of generative AI, nor have they fully exploited older developments such as edge compute. Service providers, in their advisory capacity, can help customers advance the state of the art.

Another important theme: finding the best way to harness related technologies to achieve business goals. Expect IT service providers to play a pivotal role in orchestrating AI models, curated datasets, data pipelines, cloud resources and the increasingly potent edge.

Read on for the details on four technology trends poised to occupy the partner ecosystem in 2024.

Scaling GenAI

Enterprise use of generative AI in 2023 was largely experimental as organizations learned about the technology and its challenges, explored use cases and pursued some initial deployments. But IT services companies are preparing for broader generative AI adoption.

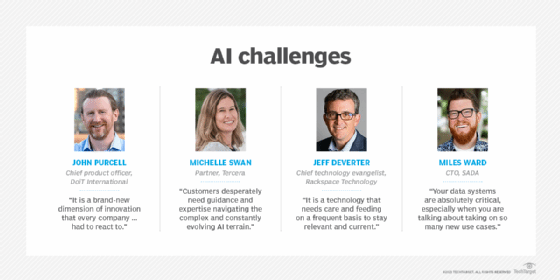

"2024 is about scale for us," said Jeff DeVerter, chief technology evangelist at Rackspace Technology, a multi-cloud solutions provider based in San Antonio. "There are solutions we are now delivering in the tens. And we're looking forward to doing them in the hundreds, concurrently and in a global fashion."

Gearing up for expected customer demand, Rackspace is partnering with Dell Technologies and NVIDIA to build private cloud infrastructure for deploying generative AI. Rackspace also works with AWS, Microsoft Azure and Google Cloud for public cloud projects. Rackspace has cataloged more than 500 AI cases across more than a dozen industries, DeVerter said.

"The tidal wave of AI, the great catalyst of transformation, is only going to accelerate," he added.

John Purcell, chief product officer at DoiT International, a cloud cost management technology and service provider based in Santa Clara, Calif., also anticipates a generative AI transition in 2024 following a year of generative AI exploration in 2023.

"It is a brand-new dimension of innovation that every company in the tech space and every company that touches customers had to react to and start experimenting with to understand what role it should play," he said.

Accordingly, customers contacting DoiT in the first half of 2023 wanted to learn how to get started with generative AI. The second half of year, customers sought to identify how they could harness the technology to meet business goals.

Next year, customers will move on to "operationalizing" generative AI within their organizations, Purcell said. This stage will see the technology transform "from experiment to where we start to see it show up in the way that customers provide services to their customers," Purcell said.

Customers in the coming year will also need to work out a strategy for not only adopting generative AI but also sustaining it over time, according to DeVerter. He refers to this phase as the industrialization of generative AI, which includes devising processes for the ongoing optimization and refinement of AI models.

"It is a technology that needs care and feeding on a frequent basis to say relevant and current," he said.

Consultancies focusing on AI will see a significant surge of attention in the coming year, said Michelle Swan, partner at Tercera, a company that invests in cloud professional services firms.

"Customers desperately need guidance and expertise navigating the complex and constantly evolving AI terrain," she said, noting that consultants can help them differentiate between genuine capabilities and marketing hype.

Pursuing smaller language models

As more customers take a deeper dive into generative AI, they might begin to look beyond the large language models from providers such as Anthropic and OpenAI.

GP Bullhound, a technology advisory and investment firm, predicts that the next wave of models will be smaller. While bigger, as in the parameter count, has been considered better, LLMs are now bumping into financial and performance limitations, according to the company's "Technology Predictions 2024" report.

"Today, the cost of these large language models is quite significant," said Alec Dafferner, partner and head of U.S. Advisory at GP Bullhound.

Other issues with LLMs include slow response times and exponentially growing compute requirements, said Robert Engels, CTO AI for Capgemini Insights and Data, who also heads the consulting company's AI Futures Lab.

"It is not sustainable," he noted during a December webinar on technology trends. "We will see an inflection point in 2024 toward smaller models."

Sector and domain focus

Smaller models let organizations address specific tasks and fine tune models for lower cost and with higher quality, Engels said. He said business will be able to deploy sector-specific models that capture a "tribal language" within medicine or engineering, for instance, or domain-specific models for a particular category of software coding.

Arun Ramchandran, president and global head of consulting and generative AI practice at Hexaware, a technology and business process company, said he expects to see increasing demand for smaller models focused on specific fields such as the legal industry. Hexaware, headquartered in Mumbai with North American operations based in Iselin, N.J., is a technology and business process services company.

"There will be a trend toward more domain-specific, private smaller models," Ramchandran said.

Those models will offer higher performance and greater efficiency, compared with the larger foundation models, he added.

David Guarrera, principal at EY Americas Technology Consulting and leader of EY's generative AI initiatives, also noted the trend toward much smaller language models -- specifically open source models, many of which stem from Meta's Llama 2 model.

Such derivatives can get organizations 60 percent to 80 percent of where they want to go for most applications, he said. The large foundation models cover more ground, but the gap is closing.

In the meantime, the open source models are easier to fine-tune and tailor. They also cost less to run because they are smaller -- a crucial consideration as businesses transition from prototyping to enterprise generative AI deployment, he noted.

"As soon as we really begin thinking about cost at scale, the discussion of open source models is going to very naturally follow," Guarrera said.

The price/performance calculus

A business must determine whether to use a foundation model suitable for many use cases or use something that offers 80% coverage but can be tailored to the specific use case it needs and costs an order of magnitude less.

"That's going to be a calculation many organizations will start to make," Guarrera said.

While enterprises next year may start paying more attention to Llama derivatives, such as Stanford Alpaca, Koala and Vicuna, they might also find that the large language models will shrink.

"The size of those will come down," Dafferner said.

For example, GP Bullhound predicts that OpenAI's GPT-5 will be smaller than GPT-4, which saw parameters soar compared with its predecessor.

But while the size of the foundation models might shrink, their influence in 2024 probably won't.

"Almost everyone we are working with is using some sort of large foundation model," Guarrera said, noting his company does most of its client work with GPT- 4.

"The large language models cover tasks that we need to cover," Engel added.

Focusing on data management

Data is a critical hurdle in the path toward greater scale and the eventual industrialization generative AI. Industry executives expect to see customers focus on data as they move toward enterprise deployment of generative AI.

"Without a good, solid data plan, there is no AI," DeVerter said.

But organizations might stumble when it comes to doing the prep work for AI deployment -- consolidating and cleansing data so it can be used to train a model, he noted.

Guarrera said establishing good data practices and a data-first culture has been an essential goal for the past 20 years. The latest wave of AI technology, however, raises the stakes for getting the data house in order.

"It has become even more important as customers are rushing to say, 'We have to capture the value of AI right now.'"

In addition to data quality and culture, organizations will also be looking to modernize their data infrastructure.

"Your data systems are absolutely critical, especially when you are talking about taking on so many new use cases," said Miles Ward, CTO at SADA, a business and technology consulting firm recently acquired by Insight Enterprises.

Flexibility will be the watchword for such systems as businesses look to use generative AI across a range of corporate functions and business processes. In that context, organizations will move from static, perpetual-license data warehouses software to cloud data warehouses, Ward said. The cloud data warehouses offer autoscaling based on changing query volumes and traffic patterns, offer greater flexibility than traditional warehouses.

Other technologies to watch in 2024

Augmented reality/virtual realty. The consumer headset market is expected to grow next year with Apple's arrival in the market. But AR will also enter the business mainstream in areas such as manufacturing and supply chain management, noted Jim Remsik, founder and CEO of Flagrant, an IT services company.

Post-quantum cryptography. This field will reach a "pivotal moment" in 2024, according to Julian van Velzen, CTIO and head of Capgemini's Quantum Lab. He cited the expected release next year of the National Institute of Standards and Technology's final standards for quantum-resistant algorithms. The standards address concerns that quantum computers will be able to break conventional encryption algorithms.

Industry clouds. These platforms, geared to vertical markets, are just making their way into enterprises, but Gartner forecasts significant expansion. The market research company projects more than 70% of enterprises will use industry cloud platforms by 2027 -- that's compared to 15% in 2023.

Growing edge computing

Edge computing is hardly new -- it turned up on TechTarget Editorial's 2019 top tech trends list. What's different heading into 2024 is that edge devices are more powerful and the tools for managing such devices at scale are more readily available. As a result, industry executives predict a resurgence of the edge next year, with the potential for running more workloads including AI.

Businesses have spent the past few decades "instrumenting" everything at the edge, said Juan Orlandini, CTO of North America at Insight Enterprises, a solutions integrator based in Chandler, Ariz. In the case of retail, everything from refrigerator doors to store shelves to gas pumps all have IP addresses. But the potential to fully use connected devices has been lacking.

"What we haven't had is the capability to really do intelligent things with them," Orlandini said.

Innovation in edge devices

Obstacles have included insufficient compute resources, reliance on non-IT personal to maintain devices and expensive bandwidth. The edge, however, is in for significant changes in 2024 and beyond. Orlandini said several factors are converging to propel the shift.

For one, edge compute devices have evolved to pack more power in a smaller space. The same amount of power that once required five to seven full racks of equipment can now fit into half a rack, Orlandini said. In addition, newer devices manage themselves. Vendors such as Dell, Lenovo and HPE provide offerings that make devices easier to manage in fleet deployments of hundreds or even tens of thousands of devices, he noted.

"Managing that large number of devices was a science experiment if you did it in the past," Orlandini said. "It is now becoming a little bit more commoditized."

Bandwidth has also become more affordable. Technologies such as Secure Access Service Edge have improved the industry's understanding of security at the edge, he added.

Smaller AI models residing at the edge

The more-capable edge appears well-timed with the shrinkage of generative AI models. The smaller, specialized models require less hardware, which makes them more suitable for deployment at the periphery, Engels said.

"We will see integration of these models at the edge," he noted.

AI models sized for edge deployment, generative AI or otherwise, can address latency problems with workloads, Orlandini said. A manufacturing plant that wants to use AI for quick decision-making should, ideally, not have to rely on a model deployed in a centralized IT resource, such as a public cloud or centralized data center.

"Running AI models at the edge is now becoming very possible," he said. "You want to have local autonomy to make those very important decisions."

Ward said he believes a central system is probably more efficient for constructing an AI model, while an edge system can handle inferencing as real-world data runs through the model. He expects to see a balancing act play out next year as organizations weigh the advantages of centralized versus edge systems.

Ward framed the task ahead as "trying to understand how edge computing and cloud computing work together to build the experience that customers expect."