How the channel can help fight bias in AI applications

Customers' AI applications pose the risk of producing prejudiced results. Channel firms, however, can step in to educate organizations and reduce harmful biases within AI systems.

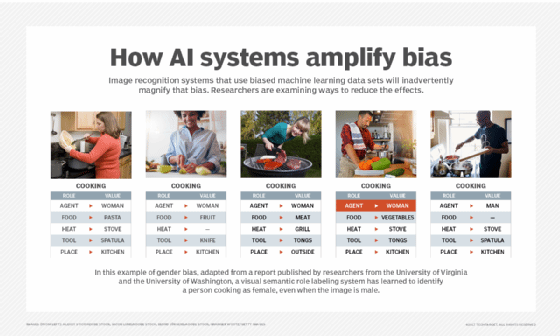

Run a Google search on "bias in AI" and you'll find all kinds of stories about what can -- and does -- happen when systems become automated and the human element is removed. Of course, today, AI and machine learning are embedded into myriad different technologies, and while it no doubt plays a positive role, biased data is often problematic.

As AI applications become more prevalent, channel firms can play a role in helping customers mitigate algorithm bias. Biased AI ranked the second biggest AI-related ethical concern associated with AI in Deloitte's 2018 "State of AI in the Enterprise" study, behind AI's power to help create and spread false information.

"Today, algorithms are commonly used to help make many important decisions, such as granting credit, detecting crime, and assigning punishment," the report notes. "Biased algorithms, or machine-learning models trained on biased data, can generate discriminatory or offensive results."

Consequently, emerging technologies like AI no longer spark purely technical discussions; they are factoring into C-suite discussions across organizations, said Vinodh Swaminathan, principal, innovation and enterprise solutions, at KPMG. KPMG is a global professional services provider with U.S. headquarters in New York City.

"Leaders are looking for trusted advisors who can provide independent perspectives [on] how responsibly customers have deployed AI in their enterprise," Swaminathan said.

Considerations when deploying AI applications

Not surprisingly, vendors said AI bias does not inhibit organizations from deploying AI systems. The key is ensuring the data being put into a system is vetted and any bias is flagged.

Manoj Madhusudhanan

Manoj Madhusudhanan

"Existing data is biased, because [an] existing system is biased," said Manoj Madhusudhanan, global head of cognitive technologies at Wipro Technologies, an IT services firm based in Bengaluru, India. Wipro developed a machine learning and AI platform called Holmes. "So, when we apply AI to such a system, bias will get into the decision-making. It can result in discrimination against a gender, race, region, religion or decisions [that] are harmful for society."

Algorithms make conclusions based on data, and checkpoints should be applied within AI applications to minimize the bias. This can be done by humans monitoring the context and algorithms and fine-tuning the parameters, Madhusudhanan said. This is a practice known as the "human in the loop."

"The algorithm should decide most things, but a human needs to validate inputs, findings and interim conclusions at intervals in the process," he said. Otherwise, the bias will be amplified.

Ashim Bose

Ashim Bose

Businesses must establish appropriate governance to ensure that their AI applications are reliable and ethically sound, Swaminathan stressed. "Only [when] leaders are confident that these technologies can be monitored and mitigated for things like bias will companies be able to truly realize the promise of AI to transform their businesses," he said.

Trust in the recommendations derived from AI-based algorithms for decision support and augmentation is one of the drivers of adoption, noted Ashim Bose, worldwide leader for analytics and AI products at DXC Technology. "Transparency in decision-making is paramount to building that trust," he said. "AI algorithms need to have transparency built into them, along with the ability to detect unconscious bias in the data."

How to help customers address AI bias

Wipro and its partners work with customers across their entire automation journey, Madhusudhanan said. "Fighting bias is a crucial part of this journey, including [the] initial consultation, where we help them create their own 'human in the loop' checkpoints and analysis of their data sets to see what potential biases may come out."

However, he added that there's no technology product right now that can fight against bias in AI applications. "Instead, it requires diligence by humans."

Vinodh Swaminathan

Vinodh Swaminathan

Swaminathan agreed that channel partners can play a role, but it's up to their customers "to be confident that these AI technologies can be monitored, controlled and managed to mitigate early security and reputation risks."

Education plays a role, and vendors like IBM and DXC Technology are working to both highlight and address AI bias. One of the ways to address the problem is to build AI forensics tools to profile an AI algorithm, according to DXC. Ironically, "it turns out that machine learning is one of the best ways to protect against the unintended consequences of machine learning," DXC stated.

Developing an AI service to mitigate bias risks

Recently, KPMG introduced AI in Control -- a framework to help partners and businesses evaluate AI models. The framework uses tools, including IBM Watson OpenScale, to assess the models around four "trust anchors": Integrity, Free from Bias, Explainability, and Agility and Robustness, Swaminathan said.

"With this solution, businesses can easily understand as well as be able to explain how algorithms, including the data it was trained on, came to a certain decision, to both internal and external stakeholders," he said.

For example, AI in Control can monitor a Line of Credit Loan Scoring model for bias specifically against a protected group such as females or transgender individuals, Swaminathan said. "Thresholds and other parameters can be defined to escalate if unfair outcomes are noticed. Watson OpenScale can also be used to explain the decisions made by a model, including which data elements and features were key to making decisions."

For companies to fully realize the potential of AI applications, they need to recognize that this is a big productivity step change and they may need help along the way, he said. Partners can be beneficial by using tools to help assess and detect bias in the data used to train AI models.

There is great demand for AI skills in the marketplace, and this is an area where third-party providers with the right skills, methodology and experience can help, Bose said.

"DXC Technology has a set of offerings around information governance where we help clients with the ethical use of their data and the insights derived from it," he said. "This is done in synergy with setting up data and AI platforms for our clients and developing their AI apps, so we look at this holistically."