4 types of machine learning models explained

Rigorous experimentation is key to building machine learning models. Learn about the main types of ML models and the factors that go into picking and training them for a specific task.

Artificial intelligence is built on the foundation of machine learning (ML) models. These models are software programs designed to classify data, identify data patterns, spot anomalies in data sets, make predictions about past data and synthesize new data. They do this all without the need for case-by-case programming, such as is required for a traditional software application.

ML models are trained to see, recognize and understand their respective tasks using an extensive set of example or training data. When trained models receive live data from the business, they can associate extensive and complex data to render useful suggestions, make predictions and generate other outputs. Models can also learn, using feedback on accuracy or usefulness to refine and optimize their performance for future decision-making.

But ML models aren't ubiquitous. One model doesn't fit all business needs. Although ML models are designed and built for specific business purposes, they're typically based on several foundational model types. As businesses of all sizes pursue and adopt AI, it's essential for leaders to recognize the varied types of ML models in use, understand the basics of ML model ROI and know the fundamentals of ML model development.

ML models vs. algorithms

Machine learning discussions often use the terms algorithm and model interchangeably. While the two ideas are related, they represent different parts of the ML spectrum:

- ML algorithms. A machine learning algorithm is a method or set of instructions that represents things such as a mathematical formula, a process or a recipe. There are many common algorithms with ML use cases, such as linear regression, K-means clustering and decision trees. Once an appropriate algorithm is selected and developed into software, it can be trained on example data to learn its intended task.

- ML models. An ML model is the practical representation of the trained algorithm or algorithms that can make operational decisions using live business data -- it's the algorithm after it's been trained.

It's important to distinguish between models and algorithms. Software developers build algorithms, but they only become models when trained and validated to perform properly and deliver accurate business outputs. This distinction reveals a key weakness in machine learning: The precision of algorithms doesn't necessarily translate into accurate business outcomes.

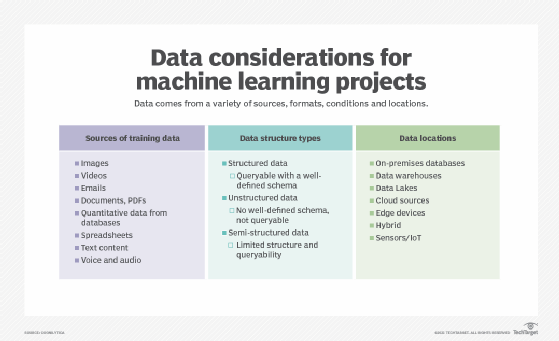

A suitable ML model requires the proper algorithm and training data. A business can experiment with algorithms to find the best one for a business task, and that algorithm must be trained with an ample volume of quality data. ML model development requires trial-and-error experimentation.

Types of ML models

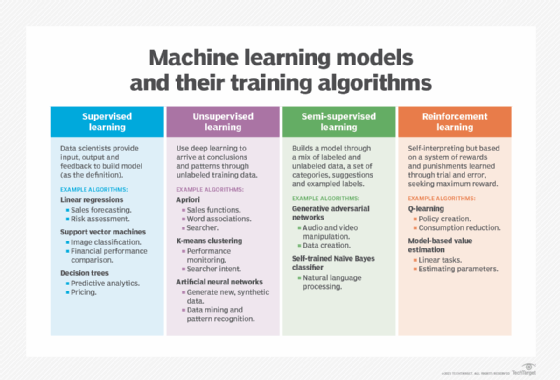

There are four types of machine learning models, each distinguished by their approach to learning and adaptation:

Supervised learning

A supervised learning model is trained from a curated and labeled data set. Each training data point has a meaningful role in eliciting a known or specific output from the model. Consequently, supervised learning is used for models that require accurate predictions based on production data, such as identifying spam emails.

These models typically use algorithms that specialize in data classification, such as decision trees and linear regression. They're highly efficient, though limited in scope and capability; frequent retraining might be required to maintain accuracy.

Unsupervised learning

An unsupervised learning model is trained from an unlabeled data set. The model doesn't directly correlate outputs to training inputs, so there are no predefined or known good outputs from the model.

Unsupervised learning models can find groupings, patterns and relationships within diverse, seemingly unrelated data. This makes them useful when the model must find or identify unknown or unexpected activity in production data, such as identifying anomalous behavior in network traffic patterns. These models can use grouping and data reduction algorithms, such as hierarchical clustering and principal component analysis.

Semisupervised learning

A semisupervised learning model uses a mix of supervised and unsupervised approaches. It's an optimal choice for models that demand a well-defined foundation of training data. These models rely on a small, well-curated source of labeled data and a large volume of quality unlabeled data, and they can apply a mix of underlying algorithms. This approach delivers better performance than supervised learning alone. Semisupervised learning models are used in demanding situations, such as speech recognition systems.

Reinforcement learning

A reinforcement learning model learns by trial and error, using interactions with the production environment to obtain feedback to optimize performance and accuracy. Reinforcement learning models are some of the most autonomous ones available.

One example of a reinforcement learning model is a user satisfaction rating generated in response to a model's output. Another example is using metrics that measure the difference between intended and actual outputs, letting the model adjust its behavior for subsequent outputs. Reinforcement learning models are commonly used for advanced AI tasks, such as autonomous vehicles and AI companions.

Types of ML algorithms

Every ML model is designed and built using algorithms, which are sets of instructions expressed as software. There are many well-established algorithms that predate ML and AI but have direct applications in ML model development.

Supervised learning models specialize in categorizing and correlating inputs and outputs. They use classification and regression algorithms, such as the following:

- Decision trees.

- K-nearest neighbors (K-NN).

- Lasso regression.

- Linear regression.

- Logistic regression.

- Naive bayes.

- Random forests.

- Ridge regression.

- Support vector machines (SVMs).

Unsupervised learning models use clustering, data reduction and association algorithms, such as the following:

- Apriori algorithm.

- Density-based spatial clustering of applications with noise or DBSCAN.

- Hierarchical clustering.

- K-means.

- Principal component analysis.

- T-distributed stochastic neighbor embedding.

Semisupervised learning models use a mix of supervised and unsupervised algorithms to couple a small, curated data set with much larger unlabeled training data sources. Reinforcement learning also applies a mix of algorithms, but it relies on interaction with the environment, such as user feedback, to grade responses and refine actions over time. Algorithms specific to reinforcement learning models include the following:

- Policy gradient methods.

- Q-learning.

- State-action-reward-state-action.

The choice of algorithm depends on the type of model required. In some cases, developers need to do proof-of-concept testing on several algorithms to find one that offers optimal accuracy and performance with lower compute demands and costs.

ML models ROI

Machine learning models can represent a significant investment for a business. It takes resources to design, build, train, test, deploy, integrate, operate and maintain a model in an IT environment. This is true whether the model is in the local data center or a cloud infrastructure. Business leaders must justify the investment through an ROI calculation such as the following:

ROI = (total returns – total costs) ÷ total costs × 100

The ROI calculation process involves the following steps:

- Set measurable baselines and goals. It's impossible to gauge ROI accurately without understanding current baselines, such as customer acquisition costs, lead generation rates, number of tasks automated and productivity rates. Set measurable goals against those baselines. For example, the ML project goal might be to increase existing productivity rates by 10%. This first step defines the ML model project's value to the business.

- Identify total costs. Calculate all direct and indirect costs for the project. The spectrum of costs can include data, infrastructure, developer, tool, integration, compliance checks and other workflow costs. Some costs are more subjective and intangible than others, so it's common for business leaders to collaborate with data science, IT, software and other project team members for a comprehensive cost evaluation.

- Identify total returns. Calculate the direct and indirect returns or benefits of the project. The scope of this evaluation can include cost savings, such as lower support costs or more productivity; revenue growth, such as higher sales; and intangible returns, such as faster decision-making, better customer experience, reduced employee costs and enhanced risk mitigation.

- Calculate ROI. Perform the ROI calculation. If the costs are lower than the returns, the ROI should be positive for the business.

The following are several ways to improve the accuracy of the ROI calculation:

- Apply metrics. Rely on business KPIs, such as productivity rates, to measure the returns of an ML model. The model itself can be monitored using metrics such as accuracy and precision, while technical metrics must be translated into business impacts before being applied to an ROI calculation.

- Use testing to validate benefits. Some business outcomes can't be assigned solely to the ML model. The model's integration, use of human approvals and other interventions can affect its benefits. For example, if a model triples productivity, but it still takes the same amount of time for human approvals, the benefits might be muted. Use statistical testing or A/B testing to gauge the model's real benefits.

- Repeat the ROI calculation regularly. ROI calculations aren't one-time efforts; they're ongoing processes that should be performed on a regular basis. For example, a model's accuracy can change over time, resulting in changes to its benefits that will affect the ROI. Changing ROI is a basis for updating, retraining and refining the model.

Choosing an ML algorithm

There are many algorithms available for ML models, and they can be adapted or created for specific business use cases. This flexibility has facilitated the adoption and evolution of ML, but it can also make the choice of underlying algorithm challenging for AI architects and developers. The following are some factors involved in selecting a suitable algorithm:

- The problem. Developers must determine what the ML model needs to do and what problem it's solving to determine what type of algorithm will work best. Developers must translate the business need into a model type and then select an algorithm that will provide adequate performance, computing resources and accuracy. For example, regression works well with supervised learning models for continuous outcomes and classification for categorical tasks. Clustering and data reduction tasks are suited to unsupervised learning models. Neural networks and deep learning support complex tasks, such as image and speech recognition.

- The data set. How much data is involved? Simpler algorithms and models, such as SVM and K-NN, perform well with smaller data sets but struggle with larger ones. Other algorithms and models, such as neural networks, are better suited to larger data sets. Overloading a simpler model with excess data can lead to poor performance and marginal accuracy.

- Data quality. Some models and algorithms, such as SVM, are suited for high-quality, well-curated data sets. Other models, such as decision trees, aren't as picky about data quality. Data quality can be enhanced through preprocessing, such as feature scaling and other data quality assurance tasks. However, this can add time and cost to the model's creation.

- Explainability. Simpler algorithms, such as linear regression, are easier to understand and explain, and their outcomes can be predicted or repeated with high confidence. More complex algorithms can be more difficult to explain and ensure reliable repeatability. This can affect a business's compliance posture and should be discussed with compliance leaders.

- Training time. Models must be trained, and that takes time. Simpler models, often using smaller data sets, can train faster than complex ones that use enormous data sets. This can be an issue when developers must experiment with different models and data sets, resulting in repeated training delays or models that must be periodically retrained.

- Computing costs. Consider the infrastructure and computing costs involved in model operation, especially if the model must scale up over time with additional users and production data. Simpler models and algorithms impose lower infrastructure demands and costs than complex models that require extensive training.

Even algorithms with similar purposes can provide different levels of accuracy and performance depending on the business task and data sources. It's common for model developers to try several different algorithms and compare the resulting behavior to determine the model that offers the best combination of benefits for the business.

7 ML model development considerations

Machine learning models do far more than create code. They represent significant business investments that are expected to enhance business outcomes. The following eight issues should be considered when doing this development:

- Data availability. It's training that turns an ML algorithm into an ML model, and training takes data -- often vast volumes of data -- that must be obtained from various sources. Is there enough data to support the intended use case? If not, the model might not perform with the level of accuracy needed.

- Data quality. The old IT axiom, garbage in, garbage out, certainly applies to ML models. The data used to train a model must be accurate, complete, relevant and often reviewed, tagged and preprocessed to ensure adequate data quality. This demands support from data science experts, along with more time and computing investment.

- Explainability and bias. ML model development must include clear explanation of how the model works and arrives at its decisions. Explainability must extend to the training data and its provenance. A business might have to prove the model provides repeatable and reliable outputs for given inputs, and that its algorithms and data sources have been vetted for bias. This could become a competitive advantage as more global ML and AI regulations emerge.

- Hyperparameter tuning. Hyperparameters are configuration settings that control a model's learning process, such as the learning rate, the number of layers in a neural network and the batch size of training data. Hyperparameter tuning can optimize the time it takes to train a model, the depth of its learning and the accuracy of its outcomes. Developers must have a strategy for adjusting these settings to provide optimal model performance.

- Scalability. Will the model maintain its accuracy and performance as it's used more? Increasing user requests and production data volume will demand more computing and network resources. Model deployment and maintenance must include a plan to monitor and scale the model to ensure its continued reliability in response to growing demand.

- Security. ML models access significant amounts of data, some of which might be sensitive or contain personally identifiable information. Similarly, the outcomes and data the ML model produces must remain secure, ensuring that only authorized users can view or use them. All of this demands careful attention to data security, data privacy and model design with proper safeguards.

- Monitoring and maintenance. Metrics should be used to monitor a model's performance, while KPIs are applied to measure the model's outcomes. Compare monitoring results against an established baseline and use those differences over time to justify fine-tuning or retraining the model.

Tools and frameworks for ML model development

Machine learning models are built using tools and frameworks that align with existing software development paradigms, such as DevOps and machine learning operations, or MLOps. Developers rely on tools and frameworks to build, train, manage and support the entire ML software development process. The following are some commonly used model-building and training tools:

- Apache MXNet.

- Extreme Gradient Boosting.

- Hugging Face Transformers.

- Keras.

- PyTorch.

- Scikit-learn.

- TensorFlow.

Data management and analysis tools include the following:

- Deepchecks.

- Fiddler AI.

- Matplotlib.

- NumPy.

- Pandas.

- Seaborn.

The following are some complete ML model lifecycle and comprehensive cloud provider tools:

- Amazon SageMaker.

- BentoML.

- LakeFS DVC.

- Google Cloud AI Platform.

- Microsoft Azure ML Studio.

- MLflow.

- TensorFlow Extended.

Editor's note: These lists were compiled based on Informa TechTarget's independent research. They're in alphabetical order and aren't ranked.

Future trends in ML models

Machine learning models are the foundation of AI systems. As AI applications expand across industries and use cases, the underlying models will have to become more powerful and effective. Some expected future trends include the following:

- Autonomy. As models gain acceptance and trust, they'll become more autonomous, trusted to access more data, perform more complex processing and deliver more accurate outcomes without human intervention. However, the actions resulting from more autonomous models, such as an AI system deciding to open or close an industrial valve, might still require a human in the loop.

- Efficiency. The architectural designs, programming languages, development tools and data sets used to build and train ML models will become more powerful and sophisticated. They will deliver more capabilities using less computing power and energy.

- Explainability and transparency. As ML and AI become essential for everyday business, there will be an increased emphasis on compliance. ML models, and the AI platforms that use the models, must be well-understood. Explainability means the models' outputs are repeatable and can be predicted reliably; humans must know how the models work. Transparency ensures the algorithms and training data used to build the model are clear and well-documented, so the model can withstand industry and regulatory scrutiny.

- Bias and ethics. Another aspect of future compliance will involve bias and ethics. Businesses that design, build, train, deploy and host ML and AI systems will need to ensure that the algorithms and data used to create the models are bias-free. Bias mitigation ensures that the models' outcomes are fair and don't discriminate against any users. Businesses will be responsible for ensuring the ethical use of these systems, as well.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 30 years of technical writing experience in the PC and technology industry.