putilov_denis - stock.adobe.com

Why you need an AI ethics committee

Businesses are using AI in many new ways. Learn how an AI ethics committee can help mitigate the risk of implementing enterprise AI.

AI progress is skyrocketing, and ethics is top of mind for many.

As AI technologies, such as ChatGPT and Dall-E, have garnered attention, big tech companies have slashed AI ethics boards. Just over a week after the release of GPT-4, Microsoft axed its entire ethics and society team as part of a larger purge of 10,000 employees. More than a year before, Google engineer Blake Lemoine was released from Google's Ethical AI team for claiming the Language Model for Dialogue Applications, or LaMDA, system was sentient.

In an interview with Lemoine for Bloomberg post-firing, he expressed concern about bias in the construction of powerful AI tools. At the top, only a handful of corporate decision-makers sculpt AI. At the bottom, training data sets are often significantly limited. He worried that these tools would have negative effects on people's ability to communicate empathetically and shut people out of conversations entirely.

Lemoine's questions, posed over a year ago, are more relevant than ever. ChatGPT is the one of the fastest-growing consumer applications in history. It took five days for ChatGPT to reach 100 million users, according to statistics from Exploding Topics. Businesses are urgently incorporating the latest AI systems in their workflows. Organizations such as the Center for Humane Technology are wary of the technology and its potential risk. Governments are beginning to regulate the tech as well. For example, Italy has banned the application. The EU is proposing new copyright rules for generative AI. China's government has also drafted a rule that makes generative AI providers responsible for the output their systems create.

Why is alignment important?

AI alignment is the field of study that makes sure AI systems are aligned with the goals of the programmer. At its simplest, it makes sure an AI system functions. At its most complex, alignment develops systems in line with human rights and values, as embedded by a programmer.

OpenAI, the creator of ChatGTP, has made alignment and AI ethics a top concern, but it isn't the only company that develops these tools. As companies develop or procure third-party AI tools, they need a committee in place to ensure the tool is sustainable, aligns with the social values of the business and reduces the risks from AI systems.

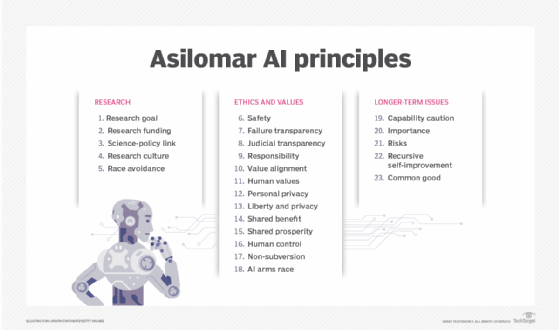

Organizations of all types recognize the importance of AI alignment and develop standards defining and reinforcing it. For example, the Future of Life Institute -- a nonprofit organization concerned with reducing catastrophic technological risk -- developed guidelines for the ethical development of AI called the Asilomar AI Principles.

What are the ethical risks of advanced AI systems?

Some of the main ethical risks of misaligned AI systems are the following:

- Privacy and data protection. A lack of privacy, misuse of personal data and security problems are just some of the ways that privacy and data protection could be an issue for AI systems. For example, there was a brief security glitch in ChatGPT where users were able to view each other's chat histories. This could give users access to others' conversations. Open AI also has access to user content, meaning any input, file uploads or feedback provided to an OpenAI service.

- Reliability. AI systems can struggle with data quality, accuracy and data integrity. For example, a chatbot such as ChatGPT is trained on a wide breadth of text from the internet ending in September 2021. That text contains outdated and false information.

- Transparency. A lack of accountability, transparency, bias and discrimination could be serious issues for AI systems. For example, an AI tool designed to approve people for health insurance might make an unfair, biased decision because some groups are under- or overrepresented in the data set. As many disclaimers stipulate on AI providers' websites, large language models have a tendency to exacerbate and amplify biases present in the training data sets. In many AI systems, the exact decision-making process is not technically explainable. These systems are called black box AI.

- Safety. Misaligned AI could cause physical harm in certain contexts. For example, in self-driving cars, a misaligned system could cause the car to crash and endanger others on the road or the driver. And a medical system used to help with diagnoses could dispense harmful treatment by misunderstanding the context of the patient and their needs.

- Data rights. AI uses data and work generated by real people to inform its outputs -- sometimes, without their consent. For example, Reddit wants to get paid for helping train big AI models from OpenAI and Google because it was one of many sources crawled without consent and used to train tools that are now popular.

How should an AI ethics committee work?

Ethics committees should be tasked with reviewing an AI product once developed or procured. They should look for ethical risks, propose changes to mitigate them and then give it another review once the changes have been implemented.

The amount of power an ethics advisory board has will have to do with the value the business places on ethics as a whole. In some cases, product development and IT procurement teams might be required to consult the committee when introducing new technologies. In other cases, consulting them is a suggestion. The committee may or may not have the power to veto product proposals depending on how much direct business influence the committee has. In some cases, a company might assign a C-level employee to lead the committee, limiting the committee's power and enabling the business to take ethical risks when deemed appropriate by the C-level employee.

Who should be on an AI ethics committee?

The specific composition of an AI ethics committee will vary from organization to organization, but it's important that all stakeholders in the project are fairly represented. The board needs an array people with different specialties and experiences, such as the following:

- Ethicists. These can be people with high-level degrees in philosophy or the ethical principles of a specific field, such as healthcare or criminal justice. They have the training to spot complex ethical issues and the ability to help the group through an objective assessment of the system. They can pose informed ethical questions that others might not think of.

- Lawyers. They can weigh in on the legal permissibility of AI systems. They can also assess efficacy of any technical tools or metrics used to assess the fairness of the system.

- Engineers. AI engineers and technologists can help the less technically adept members of the board understand the nuances of the system and how those technical underpinnings may contribute to ethical risk. For example, creating synthetic demographic data to train an ethical AI might be something these members would weigh in on.

- Business strategists. They can assess the business risk of an AI system and help with the operational aspect of addressing those risks. In the event of serious risk, business experts can decide how and when to mitigate it based on the needs of the business.

- Subject matter experts (SMEs). SMEs can act as bias scouts during data collection. Ethics committees may use bias mitigation tools -- such as IBM's AI Fairness 360 open source toolkit and the open source Python toolbox FairML -- on chosen data sets. SMEs can help choose the data sets and point out biases early before putting them into the tool.

- Public representatives. They represent the social context that the model will be deployed in. They also illuminate key biases early in the training process.