Distributed Component Object Model (DCOM)

What is Distributed Component Object Model (DCOM)?

Distributed Component Object Model (DCOM) is an extension to Component Object Model (COM) that enables software components to communicate with each other across different computers on a local area network (LAN), on a wide area network (WAN) or across the internet. Microsoft created DCOM to distribute COM-based applications in a way not possible with COM alone.

Over the years, COM has provided the foundation for numerous Microsoft products and technologies. COM defines a binary interoperability standard for creating reusable software components that can interact with each other at runtime. This includes a standard protocol and wire format that COM objects use to interact when running on different hardware components. COM standardizes function calls between components and provides a base interface for component interaction without requiring an intermediary system component.

DCOM extends COM by enabling clients and components to communicate even if they reside on different machines. To facilitate this communication, DCOM replaces the local interprocess communication used in traditional COM communication with a network protocol. In effect, DCOM provides a longer wire than COM, yet the client and the component are not aware of this difference.

What is DCOM architecture?

The DCOM wire protocol is based on Distributed Computing Environment/Remote Procedure Calls (DCE/RPC), which provides a standard for converting in-memory data structures into network packets. The DCOM protocol uses RPCs to expose application objects, while handling the low-level details of a network protocol. The client can call the component's methods without incurring any overhead.

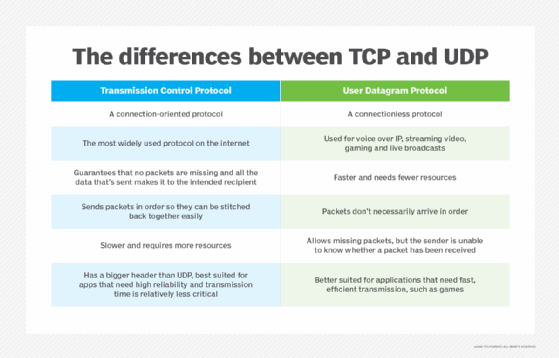

DCOM applications do not require a specific network transport protocol for carrying out client-component communications. DCOM can transparently use a variety of network protocols, including User Datagram Protocol, NetBIOS, TCP/IP and Internetwork Packet Exchange/Sequenced Packet Exchange. DCOM also provides a security framework that can be used on any of these protocols, whether connectionless or connection-oriented.

DCOM also includes a marshaling mechanism that enables a component to inject code on the client side. This code serves as a proxy object that can intercept multiple method calls from the client and bundle them into a single RPC. In addition, DCOM includes a distributed garbage collection mechanism that automatically releases unused references to COM objects, helping to free up memory on the host systems. The garbage collection process is transparent to the application.

Since its release, DCOM continues to be integrated into Windows operating systems. However, it has never been embraced outside the Microsoft ecosystem to the degree once anticipated. In addition, a recent discovery of a security vulnerability has caused Microsoft to implement hardening changes into DCOM, which are being rolled out in three stages. The first stage was introduced in 2021. The next is scheduled for 2022. And the final update related to this issue will occur in 2023.

DCOM is Microsoft's approach to a network-wide environment for program and data objects. In many ways, it is similar to Common Object Request Broker Architecture (CORBA) introduced by Object Management Group. Like DCOM, CORBA provides a specification for distributed objects. However, because of security and scalability concerns, neither approach achieved the universal and widespread use that was once hoped for with the rise of the internet.

See 12 common network protocols and their functions explained.