context window

What is a context window?

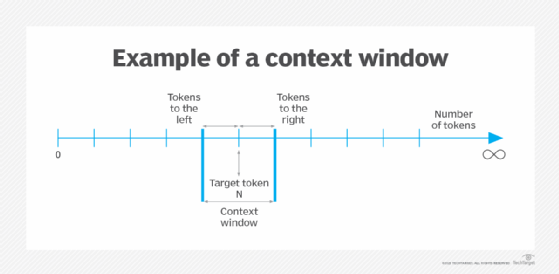

A context window is a textual range around a target token that a large language model (LLM) can process at the time the information is generated. Typically, the LLM manages the context window of a textual sequence, analyzing the passage and interdependence of its words, as well as encoding text as relevant responses. This process of cataloging pieces of a textual sequence is called tokenization.

As a natural language processing task, a context window is applicable to artificial intelligence (AI) concerns in general, along with machine learning and prompt engineering techniques, among others. For example, the words or characters in an English sentence can be segmented into multiple tokens. It's the positional encoding in generative AI that determines token placement within that textual sequence.

The context window size is the number of tokens both preceding and following a specific word or character -- the target token -- and it determines the boundaries within which AI remains effective. Context window size incorporates a set of user prompts and AI responses from recent user history. However, AI cannot access a data set history that is outside the defined context window size and instead generates incomplete, inaccurate output.

In addition, AI interprets the tokens along the context length to create new responses to the current user input or the input target token.

Why are context windows important in large language models?

A context window is a critical factor in assessing the performance and determining further applications of LLMs. The ability to provide fast, pertinent responses based on the tokens around the target in the text history is a metric of the model's performance. A high token limit points to a higher intelligence level and larger data processing capabilities.

Context windows can set text limits for smart AI responses, avoiding lengthy replies and consistently generating texts in readable language. The AI tool generates each response within its defined parameters, contributing in this way to a real-time conversation.

Similarly, a context window checks both left and right of the target token in text, and the AI tool identifies and targets the surrounding data sets of the target token. This eliminates unnecessary checks on the conversation history and only provides relevant responses.

Benefits of large context windows

Large context windows have several benefits. Some of the most notable benefits include the following:

- Saves time. The generative AI tool pinpoints the data sets on either side of the target token, avoiding irrelevant data in relation to the input target token. In fact, a well-defined context window, especially a larger context window, can expedite operations.

- Accepts large inputs. A large context window is a strong indicator of the semantic capability of LLMs to manage tokens. LLMs support linguistic searches in the vector database using word embeddings, ultimately generating relevant responses through an understanding of the terms related to the target token.

- Provides detailed analysis. A context window operates to the left and right of the target token to deeply analyze the data. The placement of importance scores enables the summarization of an entire document. Scrutiny of many tokens boosts research, learning and AI-based enterprise operations.

- Allows for token adjustment. The encoder-decoder in LLMs utilizes mechanisms such as "attention heads" for a better understanding of contextual dependencies. In long context-length use cases, an LLM can selectively focus on the relevant side of the target token to avoid extraneous responses. Indeed, token usage optimization ensures rapid processing of lengthy text while identifying and preserving its relevancy.

Comparing context window sizes of leading LLMs

There are different context window sizes for the different LLMs, such as the following:

- GPT-3. Generative Pre-trained Transformer (GPT) is a large language model for OpenAI's ChatGPT. The context window size for GPT-3 is 2049 tokens. All GPT models are trained up to September 2021.

- GPT-3.5-turbo. GPT-3.5-turbo of OpenAI has a context window of 4,097 tokens. Another version, GPT-3.5-16k, can handle a larger number of tokens; it has a 16,385-token limit.

- GPT-4. GPT-4 in ChatGPT with fine-tuning ability offers a context window size of up to 8,192 tokens. GPT-4-32k has a larger context window of up to 32,768 tokens.

- Claude. AI tool Claude by Anthropic offers a token limit of about 9,000. Claude is in the beta stage, and the API is available to a limited number of real-time users.

- Claude 2. Anthropic announced that Claude 2 offers a larger context window of up to 100,000 tokens. Users can input an entire document of approximately 75,000 words in a single prompt for Claude 2 API.

- Large Language Model Meta AI (Llama). Meta AI announced an open source Llama family of LLMs. All Llama models are trained on the 16k context window. According to ArXiv, the Llama family offers more than 100,000 tokens.

Criticisms of large context windows

There are some issues to consider with large context windows, including the following:

- Accuracy declines. AI hallucination is the inability to distinguish between tokens in large context windows. A Stanford study shows that AI performance degrades with large data sets, providing inaccurate information.

- More time and energy are required. Large context windows operate across numerous complex data sets, increasing the response time. The average time spent in entering input and output generation requires higher processing power and consumes more electricity.

- Costs increase. To maintain information accuracy in long context windows, the computational costs for generative AI tools increase by a factor of four. Higher pricing is a direct result of larger context lengths.