What is memory management in a computer environment?

Memory management is the process of controlling and coordinating a computer's main memory. It ensures that blocks of memory space are properly managed and allocated so the operating system (OS), applications and other running processes have the memory they need to carry out their operations.

Why is memory management necessary?

Every computer has a main memory that stores the data that is accessed by its various devices and processes. Many of these processes are executed simultaneously, so to ensure that they all perform optimally they must be kept in the main memory during execution. Because these processes all compete for the limited amount of memory available, the memory must be appropriately managed.

Memory management strives to optimize memory usage by subdividing the available memory among different processes and the OS. The goal is to ensure that the central processing unit (CPU) can efficiently and quickly access the instructions and data it needs to execute the various processes. As part of this activity, memory management takes into account the capacity limitations of the memory device itself, deallocating memory space when it is no longer needed or extending that space through virtual memory.

Memory management is also necessary to minimize memory fragmentation issues. Fragmentation impacts memory allocation and results in the inefficient utilization of memory resources. Also, when processes are executed, it's vital to maintain data integrity, which is also an important function of memory management.

Finally, memory management ensures that the memory allocated to a process is not corrupted by another process. Process corruption might result in the system behaving in an unpredictable or undesirable way.

What are the 3 areas of memory management?

Memory management operates at three levels: hardware, operating system and program/application. The management capabilities at each level work together to optimize memory availability and efficiency.

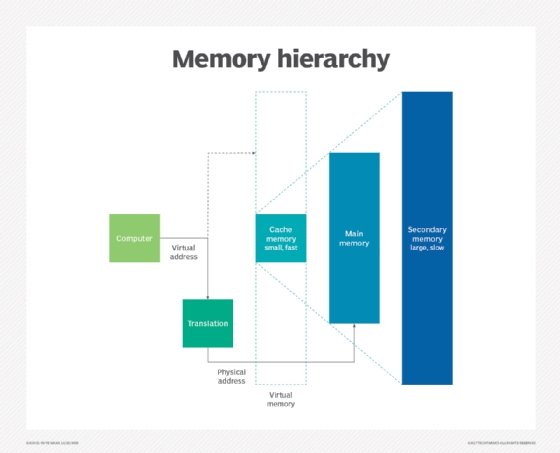

Memory management at the hardware level. At the hardware level, memory management is concerned with the physical components that store data, such as the random access memory (RAM) chips and CPU memory caches (L1, L2 and L3). Most of the management that occurs at the physical level is handled by the memory management unit (MMU), which controls the processor's memory and caching operations. One of the MMU's most important roles is to translate the logical addresses used by the running processes to the physical addresses on the memory devices. The MMU is typically integrated into the processor, although it might be deployed as a separate integrated circuit.

Memory management at the OS level. At the OS level, memory management involves the allocation (and constant reallocation) of specific memory blocks to individual processes and programs as the demands for CPU resources change. To accommodate the allocation process, the OS continuously moves processes between memory and storage devices (hard disk or SSD), while tracking each memory location and its allocation status.

The OS also determines which processes will get memory resources and when those resources will be allocated. As part of this operation, an OS might use swapping -- a method of moving information back and forth between the primary and secondary memory to accommodate more processes.

The OS is also responsible for handling processes when the computer runs out of physical memory space. When that happens, the OS turns to virtual memory, a type of pseudo-memory allocated from a storage drive that's been set up to emulate the computer's main memory. If memory demand exceeds the physical memory's capacity, the OS can automatically allocate virtual memory to a process as it would physical memory. However, the use of virtual memory can impact application performance because secondary storage is much slower than a computer's main memory.

Memory management at the program/application level. Memory management at this level is implemented during the application development process and controlled by the application itself, rather than being managed centrally by the OS or MMU. This type of memory management ensures the availability of adequate memory for the program's objects and data structures. It achieves this via the following:

- Memory allocation. When the program requests memory for an object or data structure, the memory is manually or automatically allocated to that component. If manual, the developer must explicitly program that allocation into the code. If the process is automatic, a memory manager handles the allocation, using a component called an allocator to assign the necessary memory to the object. The allocator might use one of many techniques to assign memory blocks on request: first-fit in which it allocates the first free block that's large enough for the request, buddy system in which it only allocates memory blocks of certain sizes, or using sub-allocators that allocate small blocks of memory to an application out of a larger block received from the system memory manager. The memory manager might be built into the programming language or available as a separate language module.

- Recycling. When a program no longer needs the memory space that has been allocated to it, that space is released and reassigned (i.e., recycled). This task can be done manually by the programmer or automatically by the memory manager, a process often called garbage collection.

Memory management via swapping

Swapping is a common approach to memory management. It refers to the OS temporarily swapping a process out of the main memory and into secondary storage so the memory is available to other processes. The OS will then swap the original process back into memory at the appropriate time.

A process that needs to be executed must reside in the main memory. If this process is of higher priority than other processes, the memory manager will swap out the low-priority processes into the secondary memory, allowing the higher-priority process to be loaded and executed from the main memory. Once the higher-priority process has been executed, the low-priority processes are brought back into the main memory and their execution continues.

Swapping makes it possible to run more processes. It also ensures that memory resources are optimally allocated and utilized, and allows computers with a small main memory to execute programs larger than the available memory.

That said, swapping is not the only memory management technique. Other techniques are also available that are simpler, albeit less flexible, than swapping. One method is monogramming which divides the memory into two parts: one part for the OS and the other part for user programs. The OS, which is protected from the user programs by a fence register, keeps track of the first and last location available for allocating memory to the user program.

Memory management techniques

Apart from swapping and monogramming, other memory management techniques include the following:

- Single contiguous memory management scheme.

- Multiple partitioning.

- Paging.

- Segmentation.

The first two techniques above are types of contiguous memory management schemes; the latter two are types of noncontiguous memory management schemes.

In a single contiguous scheme, a program (that requires memory) occupies one contiguous block of storage locations, meaning memory locations with consecutive addresses. In the single contiguous scheme, the main memory is divided into two contiguous areas, one holding the OS and the other holding a user process. This memory management scheme is easy to implement and manage. The disadvantage is that some main memory remains unused, leading to wastage. Also, it won't work if the program is larger than the available memory space.

The other type of contiguous memory management is multiple partitioning. This method allows multiple programs to run concurrently, with the OS dividing the available memory into multiple partitions to simultaneously execute the programs in a multiprocessing environment. The partitions can be either fixed-sized, with each partition holding a single process, or dynamic (variable-sized), with a process occupying only as much memory as it requires when loaded for execution. Both fixed and dynamic partitions are easy to implement and manage, and both suffer from the problem of memory fragmentation.

In noncontiguous memory management schemes, programs don't necessarily occupy adjacent (contiguous) blocks of physical memory. One scheme is paging. In this memory management method, the main memory is divided into fixed-size memory blocks called frames, enabling easy process swapping and speeding up data access. Paging is easy to implement and it optimizes memory resource utilization.

Segmentation is similar to paging in that it is also a noncontiguous memory management method. However, it divides the main memory into variable-sized blocks of physical memory called segments. These segments correspond to a program's natural divisions (functions, sub-routines, stack, etc.), which can be helpful to programmers in terms of visibility and program execution. On the flip side, segmentation is more complex than paging and contiguous memory management.

Learn about memory management techniques that will improve system performance.